After more than two decades in cybersecurity, I’ve learned to recognize the patterns. A breakthrough technology emerges. Developers dive in. Business leaders push for faster adoption. And security? We're usually a few steps behind, scrambling to catch up.

It happened with cloud, when teams rushed to lift-and-shift workloads to AWS, Azure and GCP before controls were in place. It happened again with containers and DevOps, where microservices and CI/CD pipelines reshaped infrastructure before security had a seat at the table.

Now it’s happening with AI.

Developers are building applications with foundation models and retrieval-augmented generation using tools like AWS Bedrock, Azure OpenAI and Hugging Face. They’re wiring up vector databases, ingesting sensitive data and deploying AI agents that can adapt, reason and generate outputs autonomously — often without the awareness or oversight of security teams.

This time, though, the risks feel different. AI systems don’t just process data, they absorb it, adapt to it, and potentially expose it in ways we're only beginning to understand. The attack surface isn't just wider — it’s evolving in real time.

The challenge is clear: traditional cloud and application security tools weren’t built for this. They can’t see nor understand AI-specific threats like prompt injection, model poisoning or inference abuse.

But we don’t have to stay in reactive mode.

Cortex Cloud AI Security Posture Management gives security teams the visibility and control to get ahead of AI risk — without slowing down innovation. Built natively for AI-powered applications, it maps data flows, monitors AI-specific threats, and helps organizations implement meaningful governance across the AI lifecycle.

In this post, I’ll walk through how security teams can use AI-SPM to go from blind spots to proactive control — starting with discovery and ending with continuous, AI-native detection and response.

The AI Security Visibility Gap

Traditional cloud and application security tools were built for a different era, one where applications had predictable architectures and data flows. AI applications fundamentally break these assumptions.

Consider the modern AI application stack: Training datasets feed into foundation models, which connect to vector databases for retrieval-augmented generation (RAG), expose endpoints through microservices, and integrate with inference datasets to personalize responses. Each component introduces unique security risks that traditional security tools weren’t designed to detect.

The Three Critical AI Security Domains

AI security spans three interconnected domains that traditional security approaches handle poorly:

1. Data Security Across the AI Pipeline

Training datasets and inference data represent the most sensitive information in your organization. When a financial institution fine-tunes a model on customer transaction data, or a healthcare company builds RAG systems using patient records, the security implications extend far beyond traditional data loss prevention.

2. AI Risk Identification

AI deployments introduce new misconfiguration risks that traditional security tools can't detect. Models may be deployed without proper guardrails, training datasets could be publicly writable (creating data poisoning risks), or unvetted models from repositories like Hugging Face might be running in production environments.

Traditional security tools also miss AI-specific risks like excessive permissions granted to model endpoints or misconfigurations that expose AI infrastructure. The challenge isn't just knowing where models come from, but ensuring they're properly configured with appropriate security controls.

3. AI-Aware Real-Time Detection and Response

AI applications require continuous monitoring for security events that could indicate compromise or policy violations. Traditional security tools see AI workloads as generic services and miss critical events like administrators disabling model guardrails, unauthorized changes to AI configurations or suspicious access patterns to AI resources. To detect events like requires real-time detection capabilities that understand AI-specific behaviors and can immediately alert security teams when governance controls are being bypassed.

When these events are detected, automated remediation then helps maintain security posture by reverting unauthorized changes or temporarily restricting access until proper review is completed.

Why Traditional Security Falls Short

Organizations typically discover their AI security gaps through reactive incidents rather than proactive assessment. Security teams scramble to trace which models accessed sensitive data after a breach, or struggle to demonstrate AI compliance during audits.

The fundamental problem: Traditional security tools can't understand AI-specific risks, nor answer critical questions like:

- Which training datasets contain regulated data?

- Are production models running without proper guardrails?

- How many unvetted models from public repositories are active in production?

- Which AI applications have access to crown jewel datastores?

Each question requires AI-native visibility that spans the entire application lifecycle.

Introducing Cortex Cloud AI-SPM

Cortex Cloud AI-SPM provides a comprehensive solution designed specifically for AI-powered applications.

The platform understands AI architectures at a fundamental level. It recognizes the difference between training datasets and inference datasets, maps data flows through RAG pipelines, and identifies AI-specific attack vectors. Most importantly, it prioritizes risks based on actual business impact rather than generating yet another flood of generic alerts.

For security teams, this means moving from reactive investigation to proactive AI governance. For CISOs, it delivers the visibility needed to enable AI innovation while maintaining enterprise security standards.

Implementing AI Security Posture Management: A Practical Walkthrough

Let's examine how a security team would implement comprehensive AI security using Cortex Cloud AI-SPM.

Phase 1: Discover Your AI Estate

The first step addresses the fundamental visibility gap. Many organizations are shocked to discover the scope of their AI footprint once they implement systematic discovery.

AI Asset Inventory

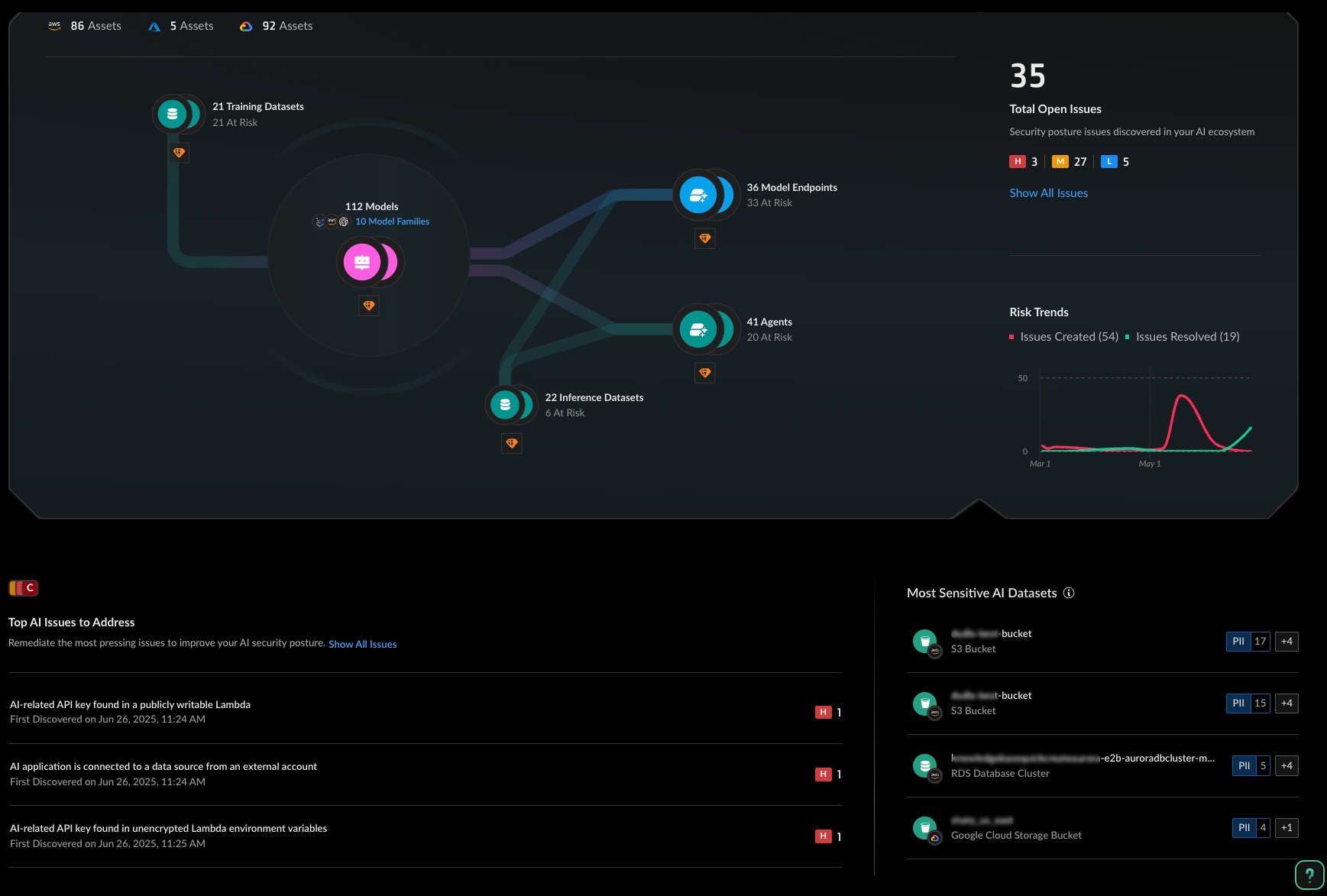

The security team begins with comprehensive AI asset discovery across their multicloud environment, via the AI Security dashboard.

Cortex Cloud AI-SPM automatically identifies all AI components, their relationships and the top-level risks related to each component.

Automated discovery helps reveal shadow AI that manual audits miss — models deployed by development teams in test environments that inadvertently access production data, for example.

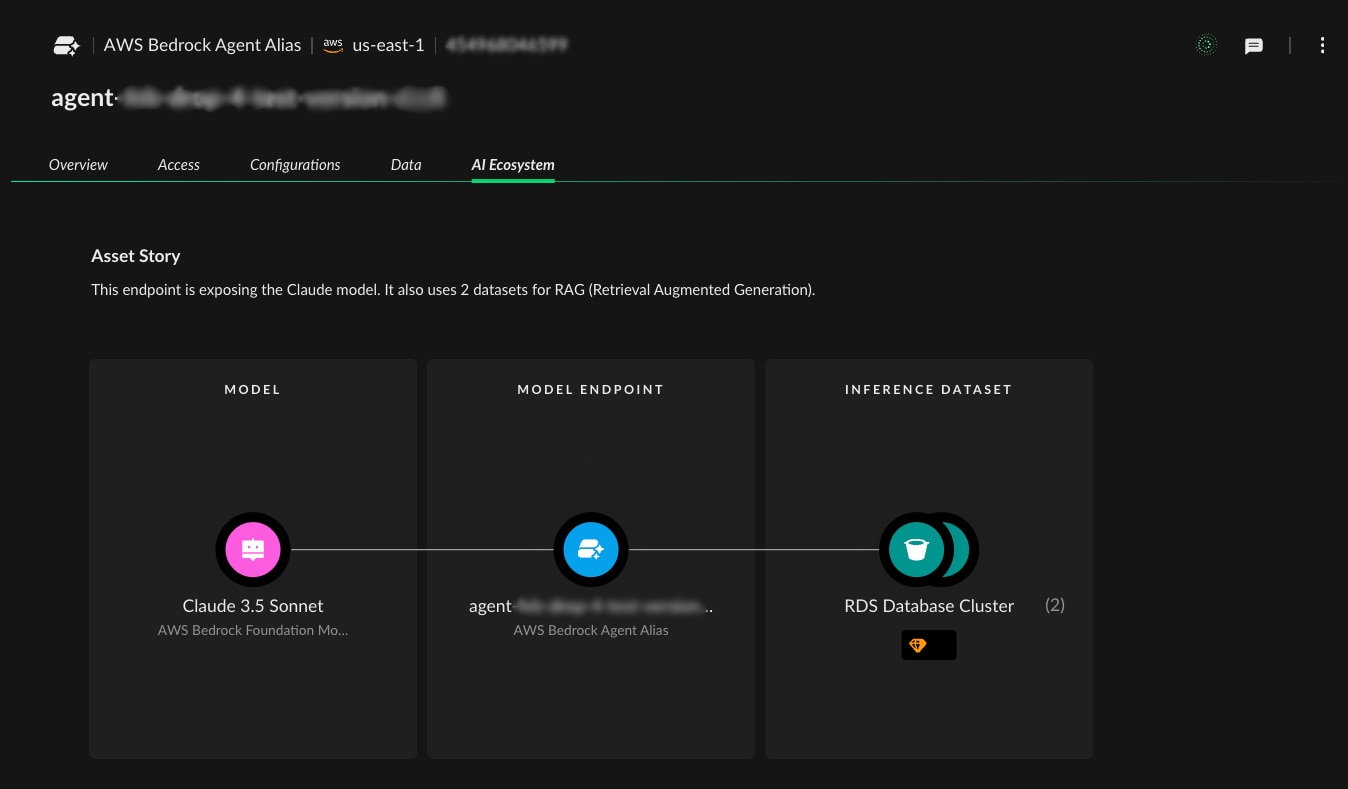

AI Ecosystem Mapping

Beyond simple asset lists, the platform maps relationships between AI components. The security team can trace data lineage from training datasets through models to applications, understanding how sensitive data flows through the AI pipeline.

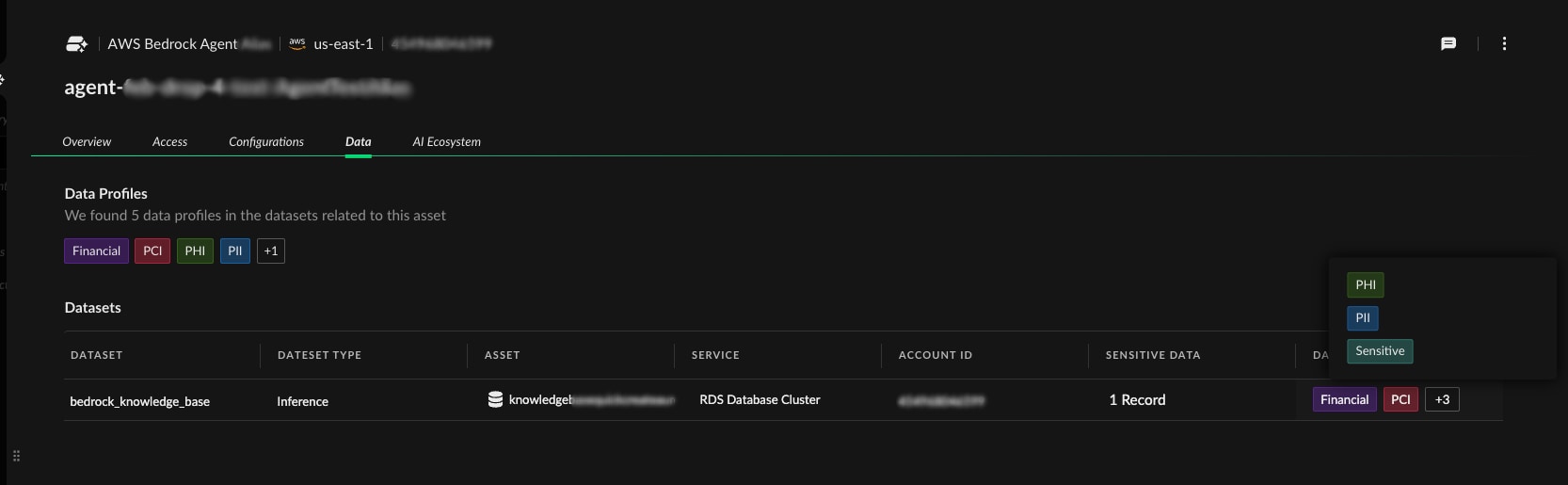

For each asset, they gain deep contextual information:

- Which cloud provider and region hosts the resource

- What data classification levels are involved (PII, PHI, PCI)

- Which identities have access permissions

- How the asset connects to broader business applications

Phase 2: Risk Assessment and Prioritization

With complete visibility established, the security team moves to intelligent risk assessment. Rather than treating all findings equally, Cortex Cloud AI-SPM prioritizes risks based on actual threat potential and business impact.

AI-Specific Risk Detection & Analysis

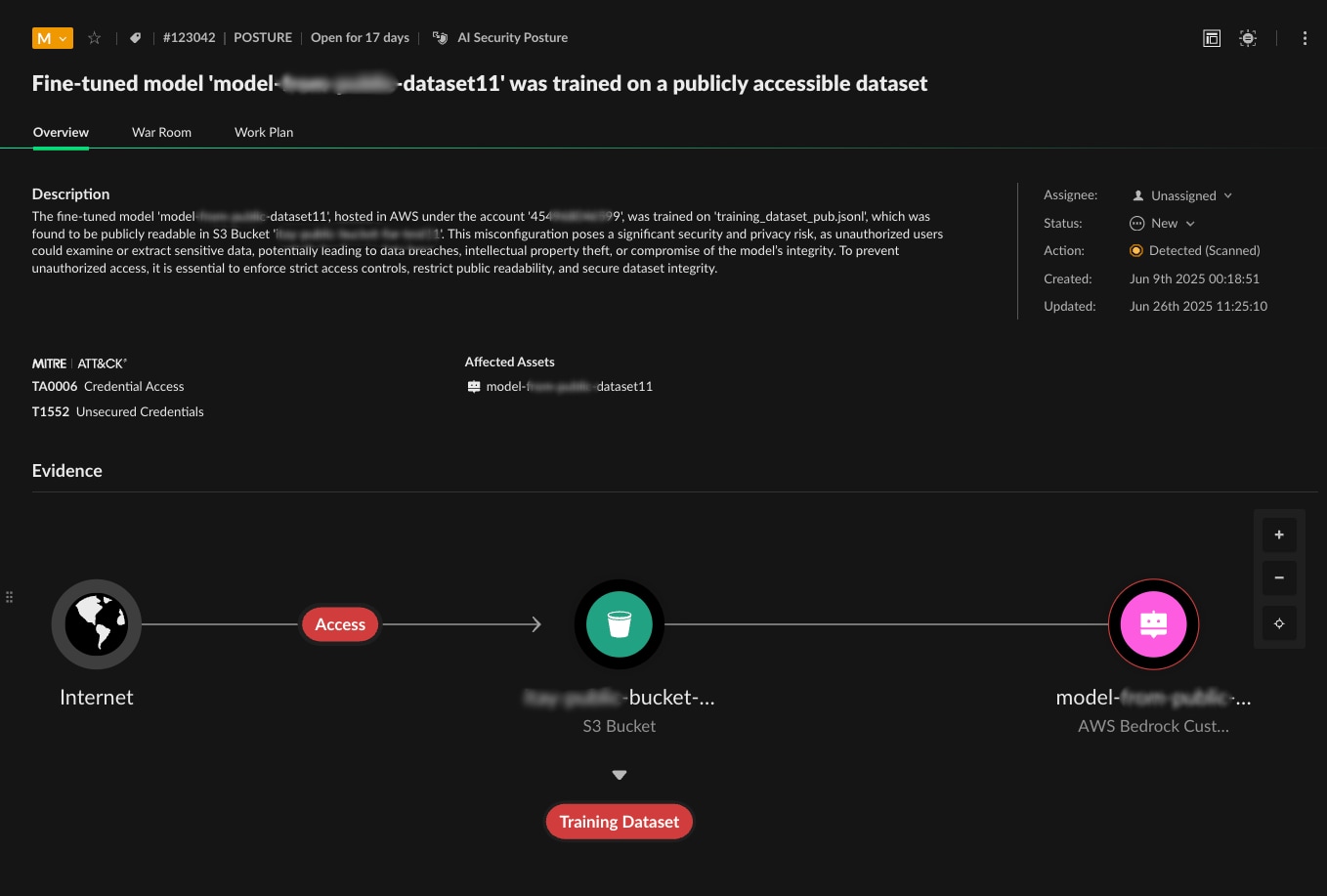

What struck the security team most about the risk assessment was the sheer variety of AI-specific threats — issues that traditional security scans would never surface. The platform uncovered fine-tuned models trained on public datasets vulnerable to poisoning, production applications lacking prompt injection protection and unvetted self-hosted models now processing sensitive customer data. Some models had disabled guardrails; others were granted excessive permissions or trained on publicly writable datasets.

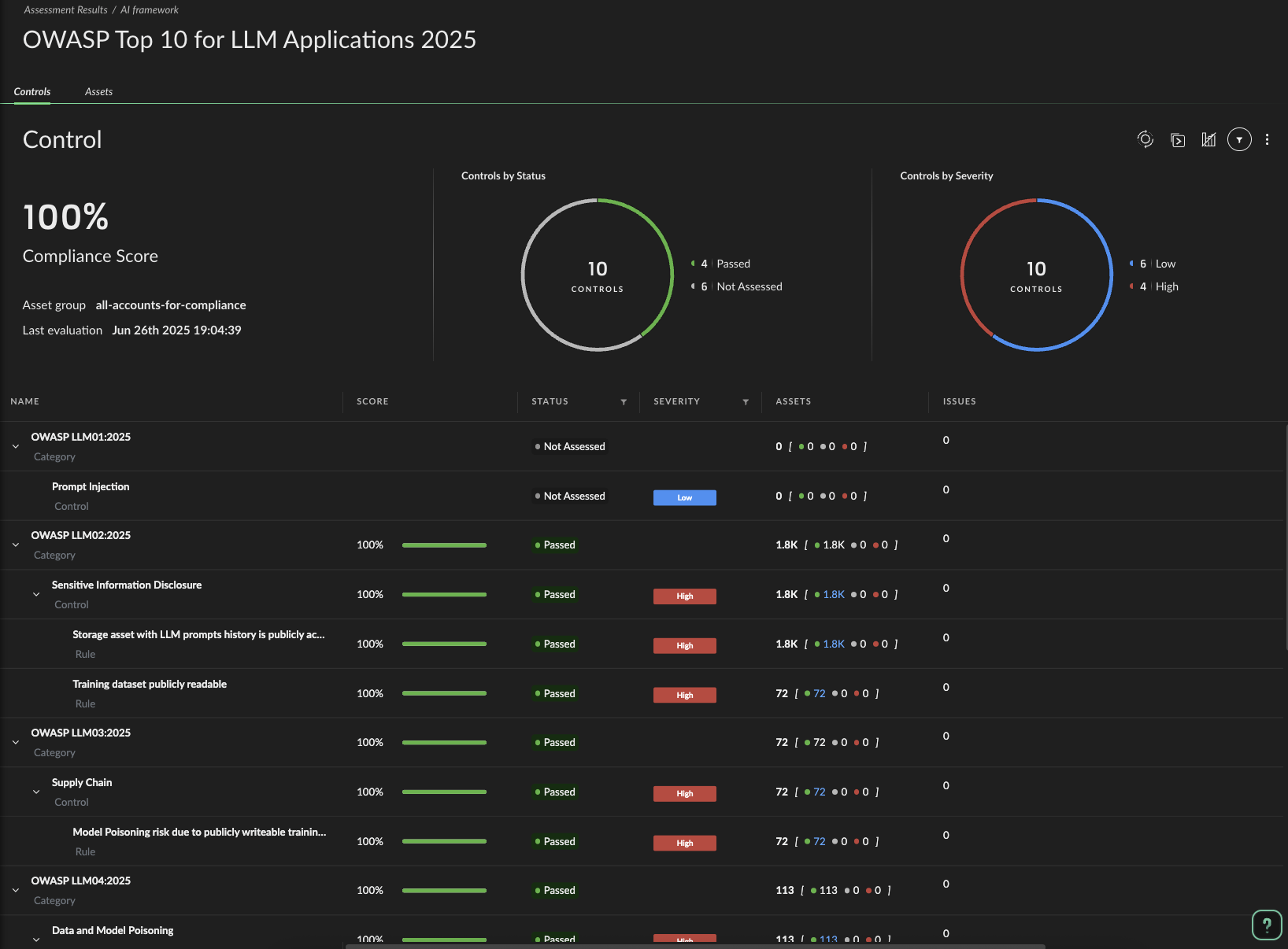

Each finding mapped cleanly to emerging frameworks like the OWASP Top 10 for LLMs and NIST AI Risk Management, but seeing them play out across live, production infrastructure made one thing clear: the AI attack surface isn’t theoretical anymore — it’s here, and it’s unlike anything security teams have faced before.

The platform performs intelligent risk analysis that considers AI-specific threat vectors and their potential business impact.

In the example below, the platform identified a critical risk scenario:

"AI application 'agent-xxx-xxxxx-xxxxxxx' is connected to a data source that ingests data from external account '21xxxxxxxxxxx'"

The evidence visualization shows how an adversary could exploit this configuration: AWS Bedrock Agent processes external data input, storage bucket contains training data marked as "in external account," external unknown data source creates data poisoning risk.

This kind of AI-aware threat analysis represents exactly what traditional security tools miss. They might flag the external data connection as a misconfiguration, but they can't understand that the specific pattern creates a model poisoning attack vector, enabling malicious actors to manipulate training data.

Sensitive Data Context

The platform takes this analysis further by correlating every AI asset with actual sensitive data exposure. Instead of theoretical risk scores, the team sees concrete impact — for example, records containing PII, records with protected health information, and those with payment card data. This data-centric view transforms prioritization from guesswork into evidence-based decision making.

Phase 3: Implementing AI-Specific Controls

With clear risk visibility, the security team can now implement targeted controls that address AI-specific attack vectors without hindering AI innovation.

Model Security Governance

The team tackles the Wild West of model deployment first by gaining complete visibility into their AI estate. No more developers deploying models without security awareness — the platform now provides clear visibility into which models are running, where they came from, and what security controls are in place. Training datasets get the same visibility and classification treatment as any crown jewel asset, with clear identification of what data types are involved and which assets contain marketing copy versus customer financial records.

Perhaps most importantly, the platform identifies when production deployments lack proper guardrails, providing specific recommendations following OWASP LLM01 Prompt Injection guidance. Security teams can now see which models need additional protection and work with development teams to implement appropriate controls. This visibility enables the same rigorous governance approach that manages any other production system, because that's exactly what these AI applications have become.

Data Protection for AI Pipelines

Here's where traditional data protection falls apart. AI applications don't just read databases — they ingest entire datasets into vector stores, retrieve context through RAG queries, and generate responses that could inadvertently expose the data they're supposed to protect.

The platform provides visibility into these AI-specific data flows, helping teams understand how sensitive data moves through AI pipelines. Vector databases are classified and tracked with the same rigor as the source data they contain, following NIST Privacy Framework core functions.

The platform identifies potential data exposure risks by mapping how sensitive data flows from training datasets through models to inference endpoints. Security teams then gain visibility into sensitive data usage across the AI pipeline, enabling them to address OWASP LLM03 Training Data Poisoning risks with informed governance decisions.

Identity and Access Management for AI

The team implements AI-aware identity controls that understand the specialized access patterns of AI applications. Following NIST Zero Trust Architecture principles, they right-size AI permissions to enforce least privilege — no more AI services with blanket access to entire data lakes. They establish monitoring for the nonhuman identities that AI services use to access cloud resources and implement detection for privilege escalation where AI applications gain unauthorized access to additional data sources, addressing OWASP LLM08 Excessive Agency risks.

Phase 4: Continuous Detection and Response

AI security isn't a one-time implementation. It requires continuous monitoring as new models are deployed and AI applications evolve.

Continuous AI Security Visibility

Cortex Cloud AI-SPM provides ongoing visibility into the AI security posture across your cloud environment. The platform continuously identifies AI assets, tracks configuration changes and assesses risks as your AI deployments evolve. This continuous assessment helps security teams maintain awareness of their AI landscape and prioritize emerging risks based on actual threat potential and business impact.

AI-Aware Real-Time Detection and Response

Beyond static risk assessment, Cortex Cloud AI-SPM provides real-time detection of security events that could indicate active threats or policy violations targeting AI infrastructure. The platform monitors for critical events such as unauthorized modifications to AI safeguards and unusual changes to model configurations. For example, when the system detects that "AI safeguards were modified," it immediately alerts security teams to potential evasion attempts that align with MITRE ATLAS technique AML.T0015 (Evade ML Model).

Real-time detections enable security teams to respond immediately to governance bypasses or potential compromise attempts, rather than discovering these critical changes during periodic reviews. The platform correlates these events with broader cloud activity and identity behavior, helping analysts understand whether changes represent legitimate administrative actions or potential security incidents requiring immediate investigation and response.

Integration with Broader Security Operations

AI security doesn't exist in isolation. Cortex Cloud integrates AI-specific findings with broader cloud, application security and SOC operations, creating a unified security posture that spans traditional infrastructure and AI-powered applications.

When security analysts investigate incidents, they gain complete AI context that helps them understand whether an attack targets conventional workloads or exploits AI-specific vulnerabilities. This integration proves especially valuable during complex incidents where attackers move between traditional cloud resources and AI applications, as analysts can trace evidence across both domains without switching between separate tools.

The platform's correlation capabilities connect AI risks with infrastructure vulnerabilities, revealing attack scenarios that neither traditional cloud security nor standalone AI security tools would detect. For example, when a misconfigured IAM role provides excessive permissions to an AI service, the system correlates this infrastructure issue with AI-specific risks like potential training data exposure or model manipulation.

When AI applications show signs of compromise or misconfiguration, the platform provides actionable remediation guidance that helps security teams respond quickly and effectively. Teams gain clear visibility into which AI applications might be affected, what data could be at risk, and specific steps to address AI-specific vulnerabilities. This guidance integrates with existing security workflows and incident response processes, enabling teams to take informed containment actions that address both AI-specific threats and traditional security concerns across the complete attack scenario.

Business Impact: From Risk Reduction to Innovation Enablement

Organizations implementing comprehensive AI security posture management achieve significant business outcomes beyond risk reduction.

Accelerated AI-Driven Innovation

Clear security guardrails enable development teams to move faster with confidence. When AI teams understand which data they can use for training and which deployment patterns meet security requirements, they spend less time navigating security reviews and more time building innovative applications.

Regulatory Compliance

As AI regulations evolve globally — from the EU AI Act to sector-specific requirements, organizations with systematic AI security posture management demonstrate proactive compliance. Detailed audit trails and control evidence satisfy regulatory requirements without manual effort.

Risk-Informed Decision Making

CISOs gain the visibility needed to make informed decisions about AI initiatives. Instead of blanket restrictions that slow innovation, they can approve AI projects based on actual risk assessment and appropriate controls.

Incident Response Effectiveness

When AI-related security incidents occur, teams with comprehensive AI security posture management respond faster and more effectively. They understand which AI applications might be affected, what data could be compromised, and how to contain AI-specific attack vectors.

Learn More

The AI security challenge follows a familiar pattern from previous technology transitions — innovation moves faster than security frameworks can adapt. Organizations deploying AI applications often discover they lack fundamental visibility into their AI estate, leaving security teams to chase shadows while business stakeholders demand faster deployment.

Cortex Cloud AI-SPM breaks the reactive cycle, providing comprehensive AI-native security visibility from the start. Download the Cortex Cloud AI-SPM Datasheet to learn more.