There is a moment in every AI project that is both exhilarating and terrifying: Go Live.

The moment an AI application moves from a controlled development environment into production, the rules change. It is no longer interacting with test data and trusted engineers. It is interacting with the messy, unpredictable real world.

And in the real world, AI doesn’t stay still.

The Silent Killer: Model Drift

Traditional software is static; if you deploy code today, it will be the same code tomorrow. AI models are different. They experience “Drift.”

- Data Drift: The inputs change (e.g., new customer slang, shifting market trends).

- Model Drift: The model’s reasoning degrades or shifts over time.

As I mention in my video, too often organizations assume that because a model passed its pre-deployment checks, it will remain safe forever. But models start evolving the second they hit real-world conditions.

Real-World Attacks Happen in Real-Time

Beyond natural drift, production is where active attacks happen.

- A user might try a sophisticated “jailbreak,” also known as a prompt injection, to trick your customer service bot into refunding a non-existent order.

- A bad actor might try to reverse-engineer your model by flooding it with specific queries (Model Inversion).

- An agent might be tricked into executing a SQL injection through natural language.

If your security stops at the “Build” phase, you are defenseless against these shifts. Without runtime protection, you are essentially flying blind with some of your most powerful and sensitive systems.

Why Runtime is Non-Negotiable

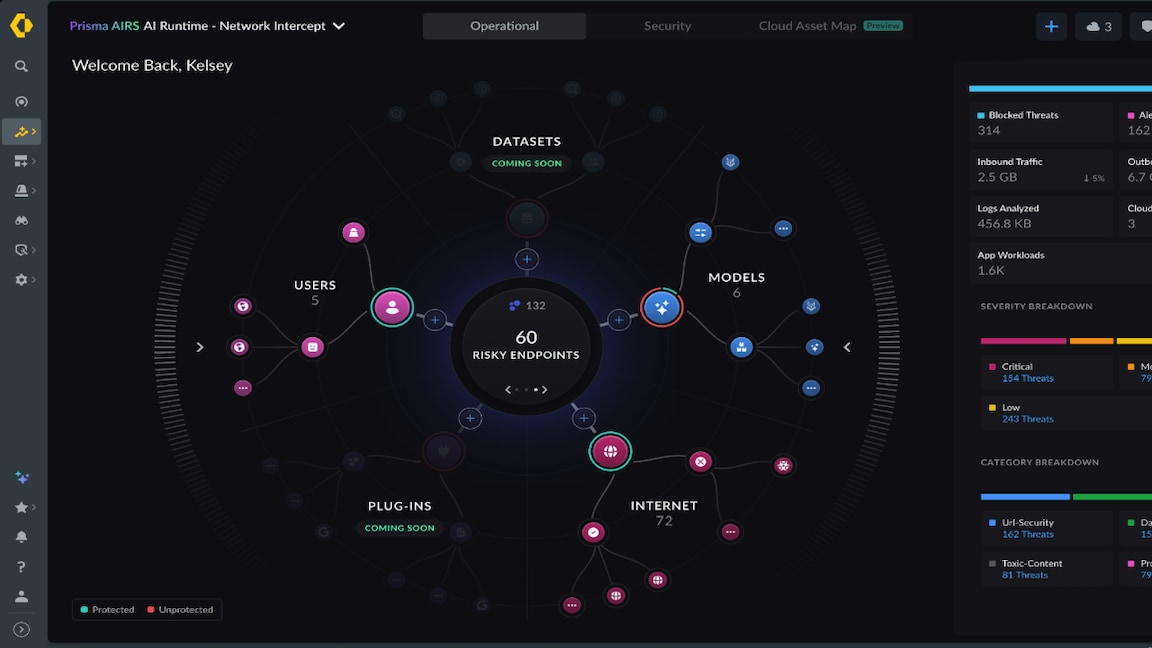

This is why Runtime Security is becoming the defining requirement for enterprise AI. You need a layer of defense that sits between the model and the user, monitoring every interaction in real-time.

With Prisma AIRS, we enforce this protection at the transaction level:

- Detecting when a prompt looks malicious (even if it’s phrased politely).

- Blocking responses that contain PII or sensitive IP before they leave the boundary.

- Stopping the model if it starts behaving erratically.

Security at the Speed of AI

You cannot rely on “logs” that you review next week. By then, the data is gone. You need instantaneous intervention.

Prisma AIRS delivers this continuous visibility. It gives you the confidence to put AI into the wild, knowing that even if the environment becomes unpredictable, your security controls remain absolute.

This is Part 4 of our Deploy Bravelyseries.

Up Next: Kelly Waldher on how secure AI is the bedrock of sustainable innovation.