Identity and access management (IAM) shapes every cloud resource interaction — from a business user viewing a document to an AI model conducting complex operations on vectorized data. Controlling access lies at the heart of cybersecurity, as underscored by the prevalence of IAM issues — multifactor authentication (MFA), excessive policy access, excessive permissions, password problems — according to Unit 42's Global Incident Response Report 2025. And with machine and other nonhuman identities now outnumbering human actors, IAM is more complicated than ever.

Given the state of identity sprawl, you can’t realistically chase down every last entitlement and permission. Managing identity risk, as a result, means understanding which vulnerabilities or misconfigurations will lead attackers to the sensitive data that’s most valuable (to them) and damaging (for you).

In this article, we’ll:

- Review the main challenges in cloud identity security.

- Look at three common attack paths where gaps in identity governance are used to compromise data security.

- Show how you can address these threats by unifying your cloud security — from code to cloud to SOC.

The Cloud Identity Crisis: Questions Security Teams Struggle to Answer

Where Is Your Sensitive Data?

Given the cloud’s nature, data flows continuously between services, storage locations or even different providers — e.g., teams regularly copy production data into temporary environments for testing, debugging or ad hoc analysis. Each new environment exponentially increases permission management efforts. And even when teams delete these environments, copied data can persist in forgotten snapshots or backup configurations.

Who Has Effective Access?

When a user accesses cloud resources, their permissions, such as direct role assignments, group memberships or temporary role assumptions, often originate from multiple sources. These permissions stack and interact across services. For example, access to an EC2 instance might indirectly grant access to S3 buckets through instance profiles. Without ways to map these relationships, security teams can miss critical access paths.

What Is the Impact of AI on Identity Risk?

The drive toward AI adoption leads organizations to collect more data for training and inference and to broaden access — especially for machine identities such as inference endpoints and agents. In addition, sensitive training data becomes embedded in black box models, further obfuscating effective access.

Let’s now look at how these challenges manifest in real-world attack scenarios.

Attack 1: Ransomware via S3 Permissions

Problem

In a ransomware attack, an attacker gains access to internal data and threatens to delete, encrypt or exfiltrate sensitive information (such as customer PII) unless the attacked organization pays the ransom.

Hackers can often delete or encrypt data on cloud storage with few granted permissions, and in some cases, they don’t even have to be read, write or delete. We’ve covered a few such cases in our article about cloud ransomware, including deleting S3 encryption keys and changing bucket lifecycle rules so that objects are automatically deleted.

In another example, data encrypted with AWS Key Management Service (KMS) can be decrypted only with the specific generated key. If an attacker has permission to schedule deletion of a KMS key — which might seem more innocuous than a read permission — they can make the data inaccessible. A security team that’s looking only at direct object level access and is responsible for dozens of KMS keys at any given moment could easily miss this.

Prevention

To block these types of attacks, organizations need to be strict about permission hygiene.

Use Palo Alto Networks Cortex Cloud to:

- Understand what a specific set of permissions actually enables a human or nonhuman actor to do, including the context of which sensitive data can be impacted.

- Find ways to rightsize permissions — such as removing unused access or periodically reviewing broad-reaching permissions — while prioritizing entitlements that grant effective access to sensitive data.

Attack 2: RAG Database Poisoning

Problem

Retrieval augmented generation (RAG) is used to ground large language models (LLMs) with up-to-date information, often by pulling from organization-specific knowledge bases. If an attacker can modify the source documents, they can manipulate the AI's outputs without directly accessing the model — with the goal of causing operational, reputational or legal damage.

For example, a (human) support agent with write access to customer documentation could insert subtle misinformation that gets propagated through customer-facing AI assistants. Or, a disgruntled employee working on data preparation could introduce biased training examples that skew model responses.

Traditional data security focuses on detecting regulated information such as credit card numbers or health records. However, biased training data wouldn’t show up in any classifier. RAG poisoning requires a deeper understanding of data lineage and access patterns, including identifying when a dataset is part of an LLM supply chain. As you can see in figure 1, the “poisoned” data can also be introduced early in the ingestion pipeline with the damage only manifesting further down the line, making it difficult to track down its source.

Prevention

Preventing this type of attack requires a deeper view of business context and data usage patterns, while keeping AI-specific attacks in mind.

Use Cortex Cloud to:

- Understand who has access to data used in model training or inference pipelines.

- Map all ingestion paths into your retrieval databases, including automated syncs, manual uploads and indirect access through connected services.

- Monitor for unusual patterns in document modifications, especially changes to frequently retrieved content.

Attack 3: Shadow Admin Privileges

Problem

Cloud environments make it deceptively easy to accumulate powerful administrative access. What starts as a legitimate need for temporary elevated permissions can evolve into persistent shadow admin privileges that expose sensitive data in unexpected ways.

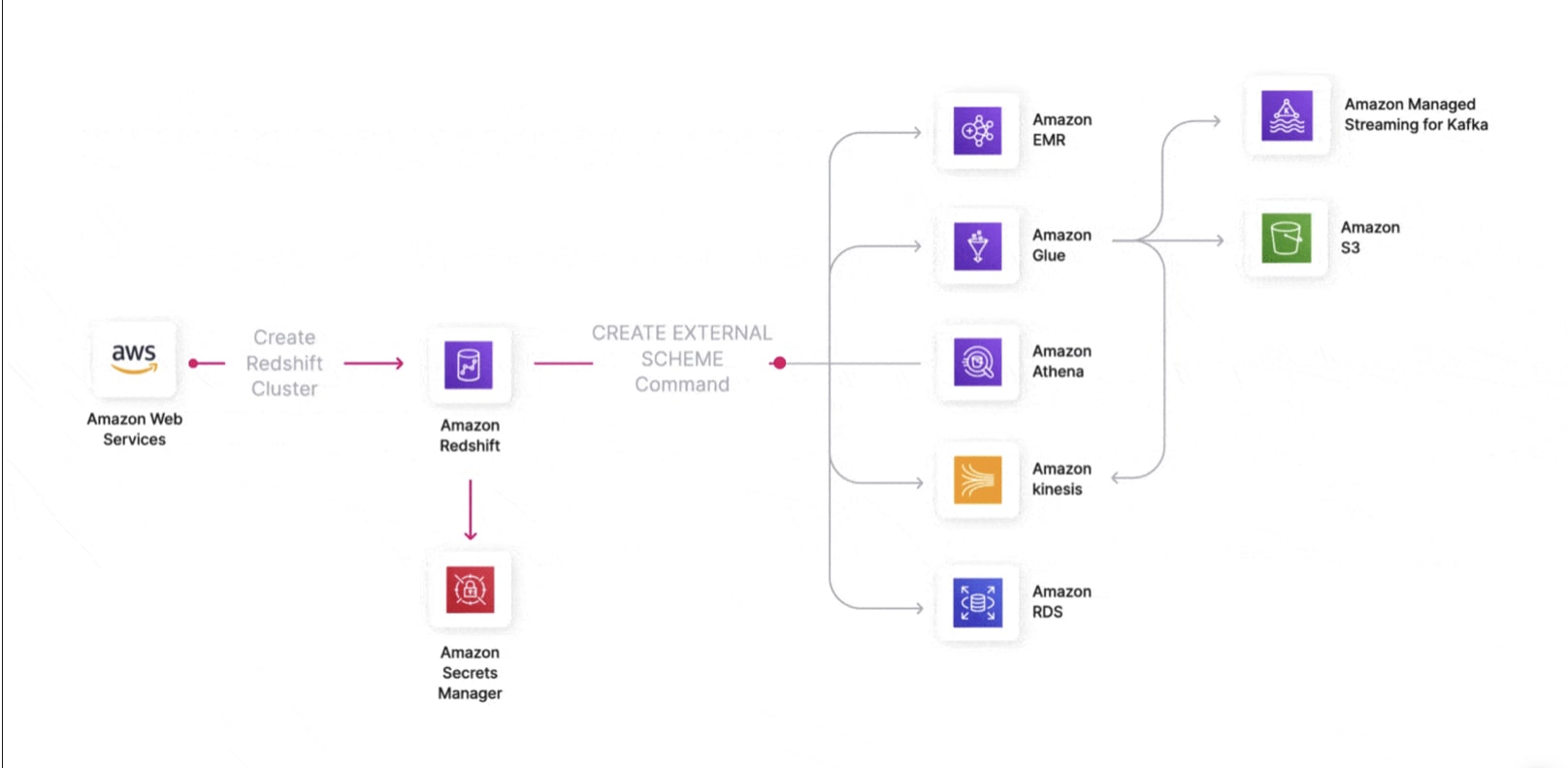

In an example we covered in our Redshift security guide, an engineer might be granted cluster administrator privileges for a migration project. However, the default Redshift role ("AmazonRedshiftAllCommandsFullAccess") includes permissions that can extend far beyond the specific cluster being managed, such as:

- List and access any S3 bucket containing "Redshift" in its name, which can be used to perform reconnaissance ahead of an attack.

- Create external schemas to query data from other services like AWS Glue, effectively enabling them to copy external records into connected services such as Amazon Athena.

- Fetch secrets to connect to RDS, Athena and Hive Metastore via Amazon Secrets Manager.

Prevention

Organizations should shift from managing individual permissions to understanding effective access across their cloud estate.

Use Cortex Cloud to:

- Map service connections to identify indirect paths to sensitive resources.

- Track permission usage patterns to find unnecessary elevated access.

- Generate least-privileged roles based on actual usage patterns.

- Monitor for suspicious permission combinations that could indicate shadow admin privileges.

Closing: Identity in Context

Like everything else in cloud security, the main challenge in managing identity risk is prioritization. No security team can track every last identity or permission granted. Instead, it should aim to understand the cloud topography and application context in which sensitive data can become exposed. Organizations can achieve this by answering questions such as:

- Which data assets are most sensitive or business-critical?

- How does this data flow through development, staging and production environments?

- What AI models and applications depend on this data?

- Which identities — human and machine — have effective access to these resources?

Rarely can these questions be answered in a vacuum. They require a unified view across identities, data and cloud infrastructure. To tackle these and other challenges with fragmented cloud security, Palo Alto Networks recently introduced Cortex Cloud, the world’s most complete enterprise-to-cloud SecOps platform.

Cortex Cloud lets you correlate risks — misconfigurations, vulnerabilities, overly permissive IAM roles, sensitive data exposures — to reveal exploitable attack paths. AI-powered prioritization groups related risks with a shared issue, enabling teams to remediate at scale instead of tackling risks one by one.

Cortex unifies both the user interface and the data model across different risk categories: CSPM, CIEM, DSPM, AI-SPM, KSPM, ASPM, CWP and vulnerability management. This allows security teams to approach permission and access views with a holistic picture of cloud environments. It breaks down silos between tools and teams, and multiplies the impact of security efforts through effective prioritization and integrated remediation workflows.

Learn more about cloud posture security with Cortex Cloud. And if you haven’t seen Cortex Cloud in action, schedule a demo today.