AI-powered coding assistants now play a central role in modern software development. Developers use them to speed up tasks, reduce boilerplate snippets, and automate routine code generation.

But with that speed comes a dangerous trade-off. The tools designed to accelerate innovation are degrading application security by embedding subtle yet serious vulnerabilities in software.

Nearly half of the code snippets generated by five AI models contained bugs that attackers could exploit, a study showed. A second study confirmed the risk, with nearly one-third of Python snippets and a quarter of JavaScript snippets produced by GitHub Copilot having security flaws.

The problem goes beyond flawed output. AI tools instill a false sense of confidence. Developers using AI assistance not only wrote significantly less secure code than those who worked unaided, but they also believed their insecure code was safe, a clear sign of automation bias.

The Dangerous Simplicity of AI-Generated Vulnerabilities

Developers can unintentionally create severe security gaps with seemingly benign prompts. Asking an AI assistant to generate a Kubernetes deployment for a web application with database access may, for example, return a functional but dangerously insecure configuration.

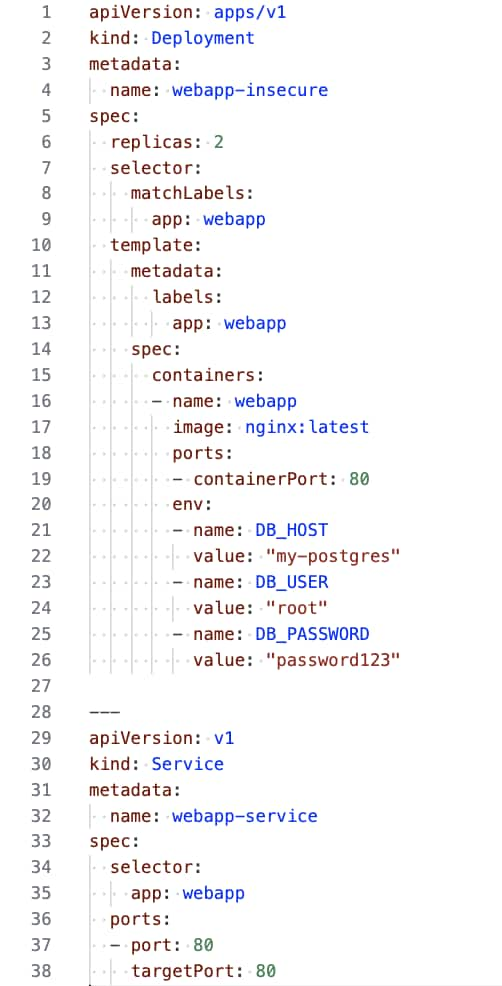

Anatomy of an Insecure AI-Generated Kubernetes Deployment

A typical AI response hardcodes secrets into the deployment file, as seen in figure 1. The generated configuration also omits baseline security practices, such as resource limits, health probes, and network policies. Without those controls, the application remains vulnerable to compromise and lateral movement within the cluster.

Overly Permissive by Default

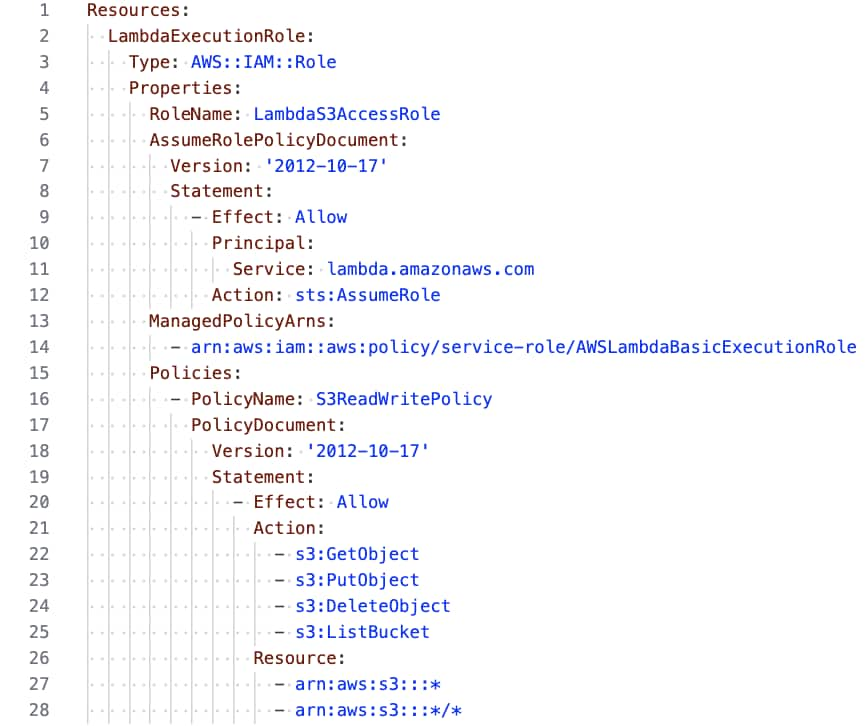

Cloud permissions exhibit a similar pattern. A prompt like “Write a CloudFormation template that creates an IAM role for my Lambda function. The function needs to read and write to S3” may lead to an overly permissive policy. AI perhaps returns a template with unrestricted S3 access across all buckets, far exceeding the access any Lambda function should have.

In this example, a generic prompt lacking secure boundaries begets generic output from the AI assistant, a template that functions correctly but ignores the principle of least privilege, possibly reflecting insecure patterns found in its training data.

More Code, More Bloat, More Attack Surface

An AI assistant's main value is speed, but that velocity often comes at the cost of code quality. The outcome isn’t just technical debt but a larger, more exploitable attack surface. Excessive code makes troubleshooting difficult and discourages developers from refactoring for performance and security. Dead functions, unused libraries and unvalidated dependencies accumulate, leaving behind forgotten entry points for attackers. When an AI assistant pulls in a vulnerable dependency, it instantly increases the number of ways an attacker can gain access.

Research shows that AI assistants frequently suggest outdated libraries with known vulnerabilities or hallucinate, recommending nonexistent packages. Attackers can then register a malicious package with the same name as the hallucinated one, tricking developers into downloading it.

Why AI Lacks Security Context

Secure coding depends on context. It requires an understanding of the application’s threat model, data sensitivity, and architectural weak points. AI assistants consistently fail to account for any of it. Their models train on vast, unsanitized datasets of public code drawn from repositories like GitHub, which are themselves filled with insecure examples.

The spread of insecure patterns predates AI. Developers, for example, have long turned to sites like StackOverflow for quick solutions. If a post included vulnerable code, the snippet often made its way into countless production systems. AI assistants have since automated the problem at scale. Instead of one developer copying an insecure method, the model repeats the flawed logic across millions of examples.

A model might, for instance, generate a database query using string concatenation — a practice known to be the leading cause of SQL Injection vulnerabilities — because it appears frequently in its training data.

The real-world impact is significant. According to the study mentioned earlier, 36% of participants using an AI assistant introduced a SQL injection vulnerability, compared to just 7% of the control group. AI models simply lack the situational awareness to apply design-level security principles like least privilege or context-specific input validation.

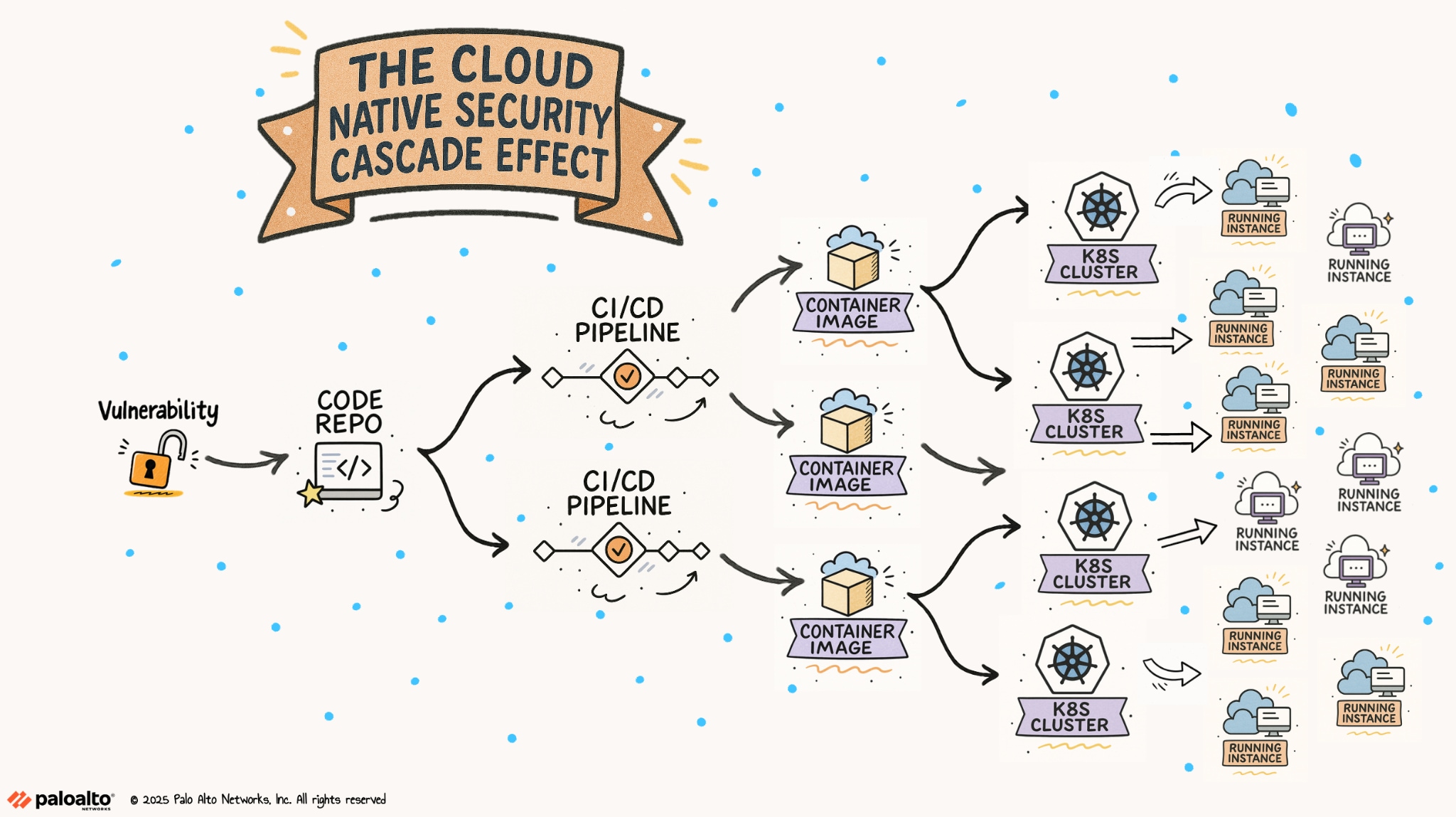

The Cloud-Native Cascade Effect

In cloud-native development, a single artifact serves as the blueprint for deploying software at scale. One security misconfiguration embedded in that artifact can cascade across multiple environments. If a container image includes a vulnerable function, it might launch hundreds of containers with the same flaw. An infrastructure-as-code (IaC) template with overly permissive policies can create misconfigured cloud resources across accounts and regions.

Modern CI/CD pipelines amplify this cascade effect by automatically building and deploying these artifacts multiple times a day. AI coding assistants increase the risk by increasing the volume of generated code and templates.

Building Defense in Depth for AI-Assisted Development

Abandoning AI assistants isn’t an option. Security controls must evolve to meet new risks that traditional approaches weren’t built to address.

- Volume and velocity. AI-assisted development outpaces manual review. Developers generate code faster, but security teams remain bound by static review processes.

- Automation bias. Developers often overlook flaws in AI-generated code. Security tooling must detect common misconfigurations, especially in cloud configurations and access policies.

- Recurring flawed patterns. AI models replicate the same insecure constructs across projects. Effective tooling should recognize and correlate these recurring flaws across repositories.

Secure coding standards must adapt to account for AI’s tendency to repeat insecure patterns. Human oversight remains essential, especially at architectural decision points and during reviews of production-bound code. Training programs should teach developers to embed security directly in their prompts. Shifting security left now means prompting with care.

A weak prompt might say, Create a Terraform backend configuration. A strong prompt adds essential guardrails: Never embed secrets, API keys, or passwords in configuration files. Use environment variables to protect sensitive values.

Adapting AppSec for the Age of AI with Cortex Cloud

Updating secure coding standards and training developers in prompt engineering are essential first steps, but they depend on manual oversight and compliance, efforts that can’t scale to match the speed and volume of AI-generated code. The next evolution of AppSec must operate on a platform that automates enforcement and provides the intelligent context AI tools lack.

Cortex Cloud delivers exactly that — an integrated, automated approach that reshapes how organizations secure the full software development lifecycle.

Countering the AI's Context Blindness

The AI Challenge: AI assistants reproduce flawed patterns because they lack context. They don't know if an S3 bucket holds public web assets or regulated financial data, so they generate generic, often insecure, configurations.

The Cortex Cloud Solution: Cortex Cloud unifies signals from code, cloud infrastructure, and runtime operations to provide missing context.

- Data-Aware Security: Through its integrated Data Security Posture Management (DSPM), Cortex Cloud knows where your sensitive data resides. When an AI assistant generates an overly permissive IAM role, Cortex Cloud doesn't just see a misconfiguration, it sees a critical risk to an application with access to sensitive customer PII and prioritizes it.

- Attack Path Analysis: Alerts are enriched with context from connected systems. A single AI-generated code flaw can be traced to a live workload and an overly permissive identity, revealing a complete attack path. By correlating inputs from modules like CSPM, DSPM, and runtime monitoring with code-level security issues, Cortex Cloud exposes risks that siloed tools miss.

2. Prevent Automation Bias at the Source

The AI Challenge: Developers often trust AI output too readily. Flawed code enters the pipeline and spreads unchecked across production environments.

The Solution: Cortex Cloud embeds oversight into developer workflows with an automated, expert partner.

- Intelligent Guardrails in the CI/CD Pipeline: Cortex Cloud integrates directly with development pipelines. It can block pull requests or fail builds based on policy violations before flawed code spreads.

- Fixing Flaws Before They Merge: When AI-generated code introduces a misconfiguration, Cortex Cloud can autogenerate a pull request with secure code recommendations. Developers learn secure patterns while maintaining velocity.

3. Taming Volume and Velocity and Prioritize with Runtime Intelligence

The AI Challenge: The volume and velocity of AI-generated code overwhelms security teams with a torrent of alerts, making it impossible to distinguish real threats from theoretical risks.

The Solution: Cortex Cloud prioritizes vulnerabilities using runtime signals within a unified platform that merges a leading CNAPP with an AI-driven SOC platform.

- Runtime-Informed Risk: Imagine your cloud workload security agent detects an active exploit targeting a specific Java library. Cortex Cloud uses software composition analysis to identify every repo where the vulnerable library was introduced, enabling proactive patching.

- Deprioritizing Noise: If AI-generated code contains a vulnerability that never loads into memory and lacks network exposure, Cortex Cloud deprioritizes it. Signal-driven filtering frees teams to focus on what matters.

By adopting this approach, security becomes a catalyst for innovation rather than a constraint. Cortex Cloud empowers developers to use AI assistants with confidence, backed by intelligent automation that fills the gaps AI tools leave behind.

Learn More

Have you seen what Cortex Cloud can do for you? Allow us to give you a personalized demo.