- What Is Container Security?

-

Managing Permissions with Kubernetes RBAC

- Kubernetes RBAC Defined

- Why Is RBAC Important for Kubernetes Security?

- RBAC Roles and Permissions in Kubernetes

- How Kubernetes RBAC Works

- The Role of RBAC in Kubernetes Authorization

- Common RBAC Permissions Risks and Vulnerabilities

- Kubernetes RBAC Best Practices and Recommendations

- Kubernetes and RBAC FAQ

- Kubernetes: How to Implement AI-Powered Security

- What Is Container Runtime Security?

- What Is Kubernetes Security?

-

Multicloud Management with Al and Kubernetes

- Multicloud Kubernetes Defined

- How Does Kubernetes Facilitate Multicloud Management?

- Multicloud Management Using AI and Kubernetes

- Key AI and Kubernetes Capabilities

- Strategic Planning for Multicloud Management

- Steps to Manage Multiple Cloud Environments with AI and Kubernetes

- Multicloud Management Challenges

- Kubernetes Multicloud Management with AI FAQs

-

What Is Kubernetes?

- Kubernetes Explained

- Kubernetes Architecture

- Nodes: The Foundation

- Clusters

- Pods: The Basic Units of Deployment

- Kubelet

- Services: Networking in Kubernetes

- Volumes: Handling Persistent Storage

- Deployments in Kubernetes

- Kubernetes Automation and Capabilities

- Benefits of Kubernetes

- Kubernetes Vs. Docker

- Kubernetes FAQs

-

What Is Kubernetes Security Posture Management (KSPM)?

- Kubernetes Security Posture Management Explained

- What Is the Importance of KSPM?

- KSPM & the Four Cs

- Vulnerabilities Addressed with Kubernetes Security Posture Management

- How Does Kubernetes Security Posture Management Work?

- What Are the Key Components and Functions of an Effective KSPM Solution?

- KSPM Vs. CSPM

- Best Practices for KSPM

- KSPM Use Cases

- Kubernetes Security Posture Management (KSPM) FAQs

- What Is Orchestration Security?

- What Is Container Orchestration?

-

How to Secure Kubernetes Secrets and Sensitive Data

- Kubernetes Secrets Explained

- Importance of Securing Kubernetes Secrets

- How Kubernetes Secrets Work

- How Do You Store Sensitive Data in Kubernetes?

- How Do You Secure Secrets in Kubernetes?

- Challenges in Securing Kubernetes Secrets

- What Are the Best Practices to Make Kubernetes Secrets More Secure?

- What Tools Are Available to Secure Secrets in Kubernetes?

- Kubernetes Secrets FAQ

-

Kubernetes and Infrastructure as Code

- Infrastructure as Code in the Kubernetes Environment

- Understanding IaC

- IaC Security Is Key

- Kubernetes Host Infrastructure Security

- IAM Security for Kubernetes Clusters

- Container Registry and IaC Security

- Avoid Pulling “Latest” Container Images

- Avoid Privileged Containers and Escalation

- Isolate Pods at the Network Level

- Encrypt Internal Traffic

- Specifying Resource Limits

- Avoiding the Default Namespace

- Enable Audit Logging

- Securing Open-Source Kubernetes Components

- Kubernetes Security Across the DevOps Lifecycle

- Kubernetes and Infrastructure as Code FAQs

- What Is the Difference Between Dockers and Kubernetes?

- Securing Your Kubernetes Cluster: Kubernetes Best Practices and Strategies

-

What Is a Host Operating System (OS)?

- The Host Operating System (OS) Explained

- Host OS Selection

- Host OS Security

- Implement Industry-Standard Security Benchmarks

- Container Escape

- System-Level Security Features

- Patch Management and Vulnerability Management

- File System and Storage Security

- Host-Level Firewall Configuration and Security

- Logging, Monitoring, and Auditing

- Host OS Security FAQs

- What Is Docker?

- What Is Container Registry Security?

- What Is a Container?

What Is Containerization?

Containerization is a lightweight virtualization method that packages applications and their dependencies into self-contained units called containers. These containers run on a shared host operating system, providing isolation and consistency across different environments. Containerization enables efficient resource usage, rapid deployment, and easy scaling. Popular tools like Docker and Kubernetes facilitate creating, managing, and orchestrating containers. This technology streamlines development, testing, and deployment by reducing conflicts and ensuring that containerized applications run consistently, regardless of the underlying infrastructure.

Containerization has become an essential part of modern software development practices, particularly in microservices architectures and cloud-native applications.

Why Is Containerization Important?

In recent years, an average 90% of organizations report using containers in production, as surveyed annually by the Cloud Native Computing Foundation (CNCF). The population climbs to 95% for those using containers in the proof of concept (PoC), test, and development environments.

Containers have arrived. They’ve long been helping DevOps teams to scale and create unique services, eliminating the need for dedicated servers and operating systems. Using containers for microservices allows applications to scale on a smaller infrastructure footprint, whether in on-premises data centers or public cloud environments. The containerization approach allows DevOps teams to quickly expand without significant resource constraints, making organizations more agile while enabling isolated testing and preventing interference with other essential services.

Adopting container technology dramatically improves the application lifecycle, from inception to production deployment. But containers aren’t just an enabling technology for cloud-native applications. Containers are a cornerstone technology — and for reasons that closely align with the principles of cloud native.

Microservices Architecture

Containers are inherently suited for microservices. Each microservice can be developed, deployed, and scaled independently, offering greater agility and resilience.

Portability

Containers encapsulate an application and its dependencies, ensuring that it runs the same regardless of where it’s deployed.

Platform Neutrality

Containers enable applications to run seamlessly across various environments and operating systems, reducing compatibility issues and simplifying deployment processes.

Scalability

Containers can be easily orchestrated using tools like Kubernetes, which automates the deployment, scaling, and management of application containers.

Immutability

Containers maintain an unchangeable state once created, ensuring consistent behavior across environments, simplifying rollbacks, enhancing security, and reducing deployment-related errors.

Isolation and Security

While not as isolated as VMs, containers do provide a level of process and file system isolation. Security can be further enhanced using specialized tools and best practices tailored for containerized environments.

Containers: A Modern Contender to VMs

Virtual machines (VMs) have been the bedrock of enterprise computing, providing a dependable way to run multiple operating systems on a single hardware host while offering reliable isolation and security features. Ubiquitous since the early 2000s, organizations continue to utilize VMs in aspects of IT infrastructure, from data centers to cloud services.

Containers gained prominence largely in the last decade as organizations pivoted toward DevOps and agile methodologies. An essential component of modern application architecture, containers challenge the long-standing dominance of VMs in many use cases.

Originating from OS-level virtualization, containers encapsulate an application and its dependencies into a single, portable unit. They offer advantages in resource efficiency, scalability, and portability, making them a popular choice for cloud-native applications and microservices architectures.

Both VM and container technologies now coexist, often complementing each other as enterprises navigate the evolving landscape of application deployment and management.

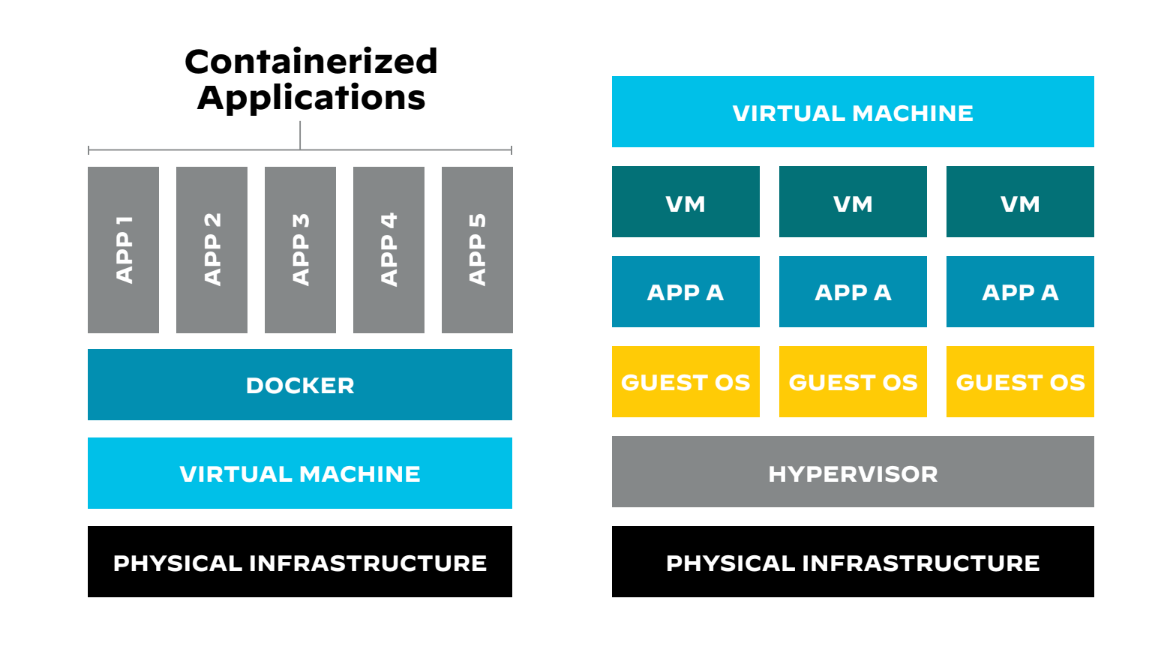

Figure 1: Differences between containerized and virtual machine applications

Choosing Between Containers and Virtual Machines

The choice between containerized applications and virtual machines (VMs) remains a strategic decision. Both technologies offer advantages and limitations, impacting factors such as performance, security, scalability, and operational complexity.

Containers, built on OS-level virtualization, offer superior resource efficiency and faster startup times. Because they’re modular, easily distributed, elastic, and largely platform agnostic, they greatly reduce the friction of managing full virtual machines. They excel in cloud-native applications and microservices, facilitated by orchestration tools like Kubernetes. Their process-level isolation, though, poses security challenges, albeit with a smaller attack surface.

VMs operate on hardware-level virtualization, running a full guest OS. They offer stronger security through better isolation but consume more resources and have a larger attack surface. VMs are better suited for monolithic applications and come with mature, yet less flexible, management tools like VMware vSphere.

In figure 1, you see a physical infrastructure that includes two virtual machines and three containerized applications. Each virtual machine runs on different operating systems, whereas the containerized applications all run on the same operating system. The ability of containers to run multiple applications on a single physical server, even if the applications operate on different operating systems, streamlines infrastructure management.

Through higher resource utilization and open-source options, containers reduce hardware and software costs. VMs often require proprietary software and more hardware, increasing operational costs.

Organizations should align their technology choice with their needs, goals, existing infrastructure, and security considerations.

| Containers | VMs |

| Lightweight resource consumption | Heavyweight resource consumption |

| Native performance | Limited performance based on hardware |

| All containers share the host OS | Each VM runs its own OS |

| OS virtualization | Hardware-level virtualization |

| Startup time is rapid (milliseconds) | Startup time is slow (minutes) |

| Requires less memory space | Requires more memory space (esp. OS) |

| Process-level isolation and potentially less secure | Fully isolated and adds more security (esp. hypervisor) |

Table 1: Advantages and disadvantages of containers and virtual machines

To Container or Not to Container: Moving Applications to the Cloud

Containerization has revolutionized the way software applications are developed, deployed, and managed, offering a lightweight and portable solution for packaging and running applications consistently across different environments.

But among the "5 Rs" of cloud migration — rehost, refactor, revise, rebuild, and replace — not all involve container technologies.

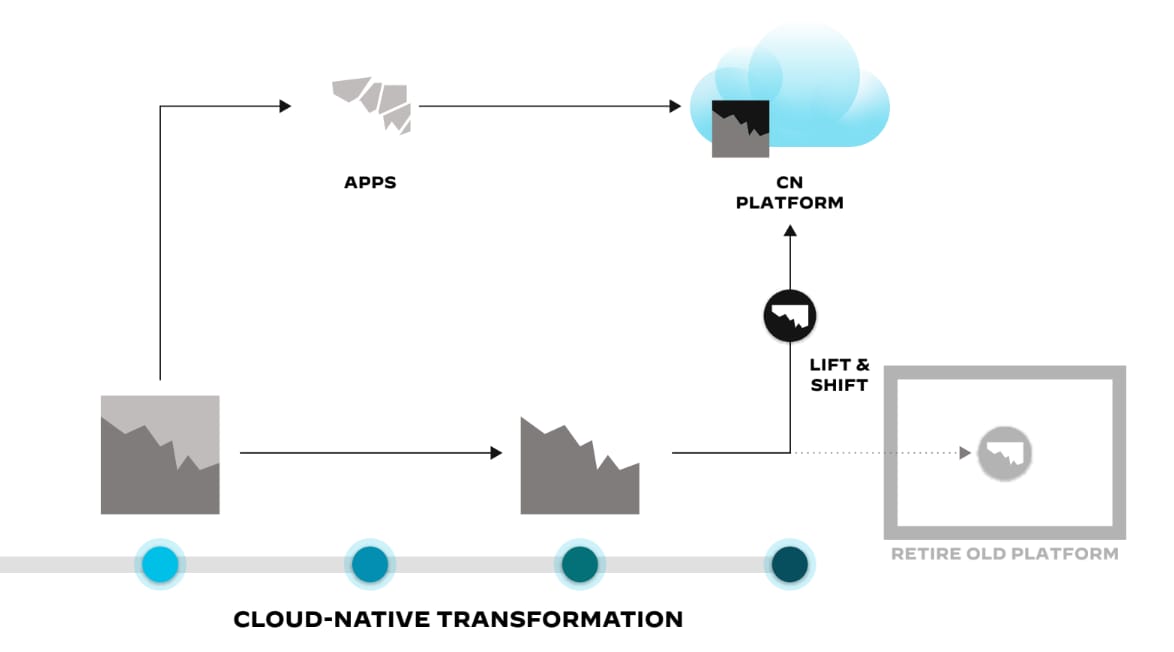

Figure 2: The three main approaches to a cloud-native transformation, as well as two options

for retiring old platforms

Architecture and Migration

Containers are integral to cloud migration and application management strategies, allowing organizations to benefit from cloud-native tools and methodologies. But the decision-making process surrounding application architecture, technology, and cloud migration methods varies for each organization.

Assessing Application Architecture and Technology

Organizations need to evaluate their existing applications, infrastructure, and technology stacks to understand their current state and identify limitations, opportunities, and compatibility with cloud services. This assessment will help determine the necessary changes, optimizations, and architectural patterns they should adopt for a successful migration.

Choosing a Cloud Migration Method

After assessing the application architecture and technology, organizations can choose the most suitable migration method based on their requirements, budget, timeline, and risk tolerance. Their chosen approach — whether to rehost, refactor, revise, rebuild, or replace — will accommodate factors that include the complexity of their existing applications, the desired level of cloud integration, and their overall cloud strategy.

Rehost: The “Lift and Shift” Approach (IaaS)

Rehosting an application involves minimal changes to the existing application and focuses on moving it as-is to the cloud, which is how rehosting became known as lift and shift. Organizations capitalize on speed and cost-effectiveness by moving their business application to a new hardware environment without altering its architecture. The trade-off comes in the form of maintenance costs, as the application doesn't become cloud-native in the lift-and-shift process.

DRAWBACK: While containers can be used in a rehost strategy, their impact is less transformative compared to the refactor, revise, and rebuild strategies.

Refactor: Embracing Platform as a Service (PaaS)

Refactoring, or repackaging, maintains an application’s existing architecture and involves only minor changes to its code and configuration. Containers offer a way to encapsulate the application and its dependencies, making it easier to move and manage. This also allows organizations to take advantage of cloud-native features, especially through the reuse of software components and development languages.

DRAWBACK: PaaS enables organizations to leverage cloud-native features, particularly through the reuse of software components and development languages. However, porting an application to the cloud without making significant architectural changes could introduce constraints from the existing framework as well as underutilized cloud capabilities.

Revise: Modifying for Cloud Compatibility

The revise approach requires significant changes to the application's architecture, transforming it into a cloud-native or cloud-optimized solution. Organizations adapt existing noncloud code to wield cloud-native development tools and runtime efficiencies. While the upfront development costs can be significant, the payoff comes in the form of enhanced performance and scalability, ultimately maximizing the benefits of cloud services.

Containers contribute substantially to the revise approach, offering a level of abstraction that allows developers to focus on application logic.

Rebuild: A Ground-Up Approach

Rebuilding, as the name suggests, involves completely rebuilding or rearchitecting the application using cloud-native technologies and services. This method is ideal for organizations looking to modernize their applications and take full advantage of the cloud. In doing so, they can fully employ cloud-native tools, deployment methods, and efficiencies, which typically results in the most optimized and resilient applications.

DevOps teams rely on containers as the go-to technology when building an application from scratch. Containers allow for microservices architectures, easy scaling, and seamless deployment.

Replace: Transition to Software as a Service (SaaS)

The replace migration strategy involves retiring the existing application and replacing it with a new cloud-native solution or a software as a service (SaaS) offering. Organizations seeking to reduce their maintenance burden and focus on innovation often opt to replace an application. Speed to market factors into the decision, as the SaaS vendor assumes responsibility for new feature development. Containers in this approach are less relevant to the user, though the SaaS provider may use them extensively.

DRAWBACK: Organizations face constraints imposed by the SaaS vendor or underlying cloud infrastructure, particularly concerning data management.

Selecting the Cloud Migration Method Based on Needs |

||

| Migration | Advantages | Disadvantages |

| Rehost |

|

|

| Refactor |

|

|

| Revise |

|

|

| Rebuild |

|

|

| Replace |

|

|

Table 2: Sample pros and cons for various migration methods

When Micro Means Fast

Cloud-native applications are designed from the ground up to take full advantage of cloud computing frameworks. Similar to refactoring, new container-native applications are often built using microservices architectures, which allow for independent deployment of application components.

The Triad of Modern Application Development

In today's high-speed digital landscape, agility and security aren’t just buzzwords — they’re imperatives. In the race to keep pace, microservices, CI/CD, and containers have gained prominence. Together, these three elements form the backbone of modern application development, offering a robust framework that balances speed, scalability, and security.

Container FAQs

Cloud-optimized refers to software and systems designed to exploit the scalability, performance, and cost efficiencies of cloud computing environments. It involves architecting applications and infrastructure to leverage cloud-native features like auto-scaling, distributed storage, and microservices.

Cloud-optimized applications are built to maximize the benefits of cloud platforms, such as improved resource utilization, reduced operational costs, and enhanced flexibility. They typically employ a modular structure, allowing for easier updates and maintenance, and are designed to handle the dynamic nature of cloud resources.

A container registry is a storage and distribution system for container images. It allows developers to push, pull, manage, and share container images. Registries can be public or private, with popular public registries including Docker Hub and Google Container Registry. They play a role in containerized software development and deployment workflows, providing a centralized resource for managing the various versions of container images.

Secure and efficient management of container registries is vital in ensuring the integrity and availability of containerized applications.

A container repository is a collection of related container images stored within a registry. Each repository is typically associated with a specific application, service, or component and contains different versions or variations of container images for that component.

Repositories allow developers to organize, version, and manage their container images efficiently — which is helpful, considering that organizations can easily have tens of thousands of images stored in their registries. Images are usually identified by unique tags that can represent versions, environments, or configurations.

Because registries are central to the way a containerized environment operates, it’s essential to secure them.