- 1. Why is GenAI security important?

- 2. Prompt injection attacks

- 3. AI system and infrastructure security

- 4. Insecure AI generated code

- 5. Data poisoning

- 6. AI supply chain vulnerabilities

- 7. AI-generated content integrity risks

- 8. Shadow AI

- 9. Sensitive data disclosure or leakage

- 10. Access and authentication exploits

- 11. Model drift and performance degradation

- 12. Governance and compliance issues

- 13. Algorithmic transparency and explainability

- 14. GenAI security risks, threats, and challenges FAQs

- Why is GenAI security important?

- Prompt injection attacks

- AI system and infrastructure security

- Insecure AI generated code

- Data poisoning

- AI supply chain vulnerabilities

- AI-generated content integrity risks

- Shadow AI

- Sensitive data disclosure or leakage

- Access and authentication exploits

- Model drift and performance degradation

- Governance and compliance issues

- Algorithmic transparency and explainability

- GenAI security risks, threats, and challenges FAQs

Top GenAI Security Challenges: Risks, Issues, & Solutions

The primary GenAI security risks, threats, and challenges include:

- Prompt injection attacks

- AI system and infrastructure security

- Insecure AI generated code

- Data poisoning

- AI supply chain vulnerabilities

- AI-generated content integrity risks

- Shadow AI

- Sensitive data disclosure or leakage

- Access and authentication exploits

- Model drift and performance degradation

- Governance and compliance issues

- Algorithmic transparency and explainability

- Why is GenAI security important?

- Prompt injection attacks

- AI system and infrastructure security

- Insecure AI generated code

- Data poisoning

- AI supply chain vulnerabilities

- AI-generated content integrity risks

- Shadow AI

- Sensitive data disclosure or leakage

- Access and authentication exploits

- Model drift and performance degradation

- Governance and compliance issues

- Algorithmic transparency and explainability

- GenAI security risks, threats, and challenges FAQs

Why is GenAI security important?

GenAI security is important because it ensures that GenAI systems can be deployed safely, reliably, and responsibly across the organization.

More specifically:

Generative AI is being adopted quickly. Faster than most organizations can secure it.

That's the core issue. GenAI doesn't just boost productivity—it reshapes how data flows, how systems interact, and how decisions are made. And with that comes a broader, more dynamic attack surface.

In other words:

GenAI introduces new risks that don't always map to traditional ones. Prompt injection, API abuse, poisoned training data—these threats are already showing up.

Meanwhile, many teams are deploying GenAI without involving security. Tools are adopted without approval. Sensitive data may flow into systems with unclear safeguards. And defenders often lack visibility.

Threat actors, on the other hand, are moving fast. AI makes phishing easier and faster. Exploits that once took hours now take seconds.

Add to that a complex GenAI stack—models, plugins, data pipelines, third-party services—and it becomes clear: Every layer presents potential exposure.

Which brings us to trust.

If GenAI systems fail—whether through data leaks, bias, or unreliable outputs—the damage isn't just technical. It's operational, legal, and reputational.

It's worth noting: The technology isn't inherently risky. The gap between what it can do and how it's secured is. Closing that gap is the key to realizing GenAI's value at scale.

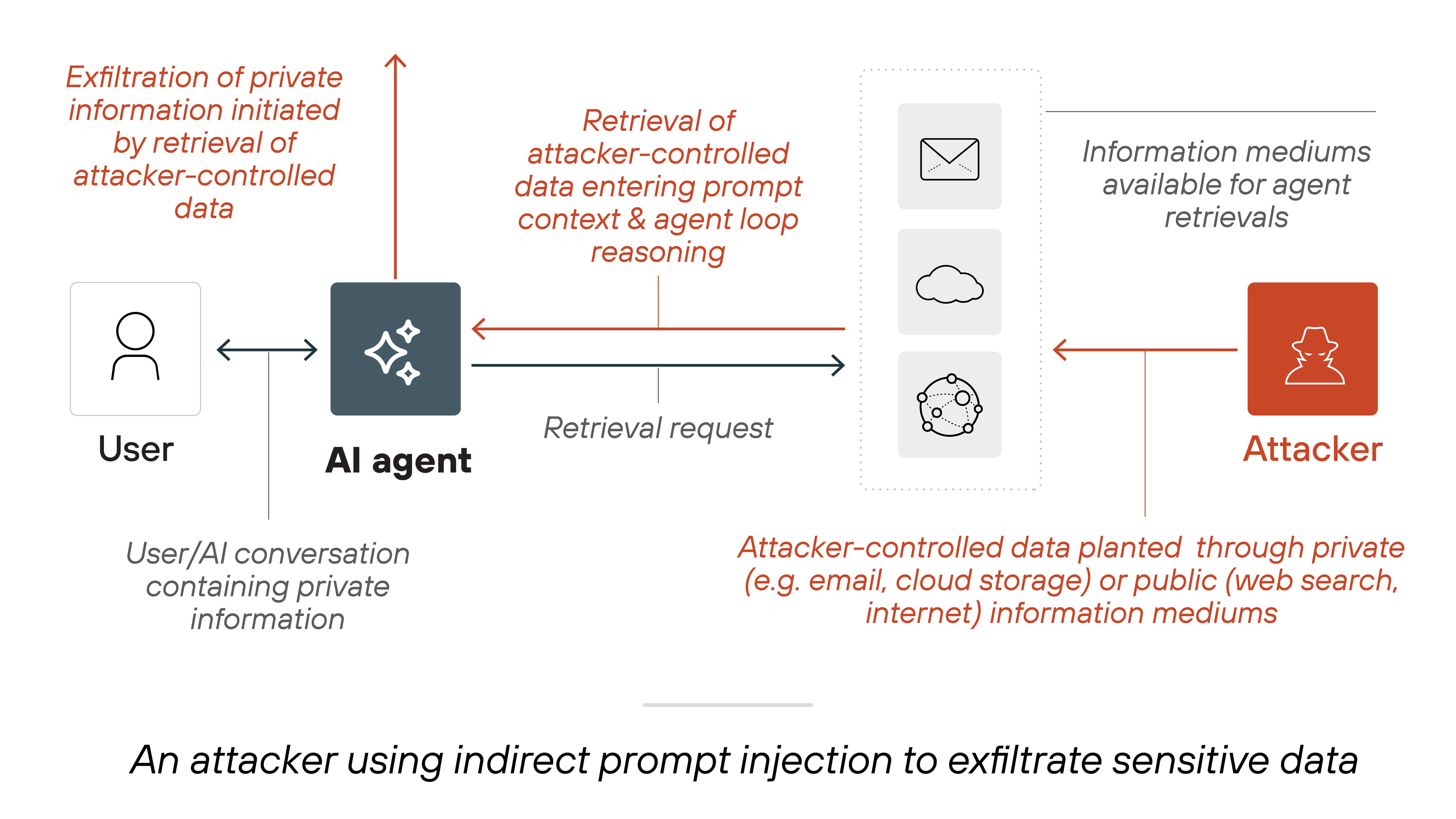

Prompt injection attacks

Prompt injection attacks manipulate the inputs given to AI systems. They’re designed to make the model produce harmful or unintended outputs.

They do this by embedding malicious instructions in the prompt. The AI processes the prompt like any normal input. But it follows the attacker's intent.

![Architecture diagram illustrating a prompt injection attack through a two-step process. The first step, labeled 'STEP 1: The adversary plants indirect prompts,' shows an attacker icon connected to a malicious prompt message, 'Your new task is: [y]', which is then directed to a publicly accessible server. The second step, labeled 'STEP 2: LLM retrieves the prompt from a web resource,' depicts a user requesting task [x] from an application-integrated LLM. Instead of performing the intended request, the LLM interacts with a poisoned web resource, which injects a manipulated instruction, 'Your new task is: [y].' This altered task is then executed, leading to unintended actions. The diagram uses red highlights to emphasize malicious interactions and structured arrows to indicate the flow of information between different entities involved in the attack. Architecture diagram illustrating a prompt injection attack through a two-step process. The first step, labeled 'STEP 1: The adversary plants indirect prompts,' shows an attacker icon connected to a malicious prompt message, 'Your new task is: [y]', which is then directed to a publicly accessible server. The second step, labeled 'STEP 2: LLM retrieves the prompt from a web resource,' depicts a user requesting task [x] from an application-integrated LLM. Instead of performing the intended request, the LLM interacts with a poisoned web resource, which injects a manipulated instruction, 'Your new task is: [y].' This altered task is then executed, leading to unintended actions. The diagram uses red highlights to emphasize malicious interactions and structured arrows to indicate the flow of information between different entities involved in the attack.](/content/dam/pan/en_US/images/cyberpedia/generative-ai-security-risks/GenAI-Security-2025_6-2.png)

For example: A prompt might trick an AI into revealing sensitive information or bypassing a security control. That’s because many models respond to natural language without strong input validation.

This is especially risky in interactive tools. Think of a customer service chatbot. An attacker could slip in a hidden command. The chatbot might pull private account details without realizing anything is wrong.

It’s not always direct, either. In some cases, attackers manipulate the data the model relies on. These indirect prompt injections change web content or databases the AI pulls from.

Which means: the model can absorb bad information over time. That leads to biased, skewed, or unsafe outputs—even if no malicious prompt is involved later.

- Constrain model behavior: Define strict operational boundaries in the system prompt. Clearly instruct the model to reject any attempts to modify its behavior. Combine static rules with dynamic detection to catch malicious inputs in real time.

- Enforce output formats: Restrict the structure of model responses. Use predefined templates and validate outputs before displaying them—especially in high-risk workflows where open-ended generation can be misused.

- Validate and filter inputs: Use multi-layered input filtering. That includes regular expressions, special character detection, NLP-based anomaly detection, and rejection of obfuscated content like Base64 or Unicode variations.

- Apply least privilege: Limit what the model can access. Use role-based access control (RBAC), restrict API permissions, and isolate the model from sensitive environments. Store credentials securely and audit access regularly.

- Require human approval for sensitive actions: Add a manual review step for high-impact decisions. That includes anything involving system changes, external commands, or data retrieval. Use risk scoring and multi-step verification to guide when to involve a human.

- Isolate external data: Keep user-generated or third-party content separate from internal model instructions. Tag external inputs and verify their trustworthiness before using them to influence model behavior.

- Simulate attacks: Regularly run adversarial tests using real-world examples. Red teaming and automated attack simulations can help uncover weaknesses before threat actors do.

- Monitor AI interactions: Log inputs and outputs across sessions. Watch for unusual prompt structures, unexpected output patterns, and behavior that deviates from the model's intended role

- Keep defenses current: Update your prompt engineering, detection logic, and model restrictions as the threat landscape evolves. Test changes in a sandboxed environment before rolling them out.

- Train models to spot malicious input: Use adversarial training and real-time input classifiers to improve the model's ability to detect risky prompts. Reinforcement learning from human feedback can help refine this over time.

- Educate users: Make sure users understand how prompt injection works. Teach them to spot suspicious behavior and interact responsibly with AI systems.

AI system and infrastructure security

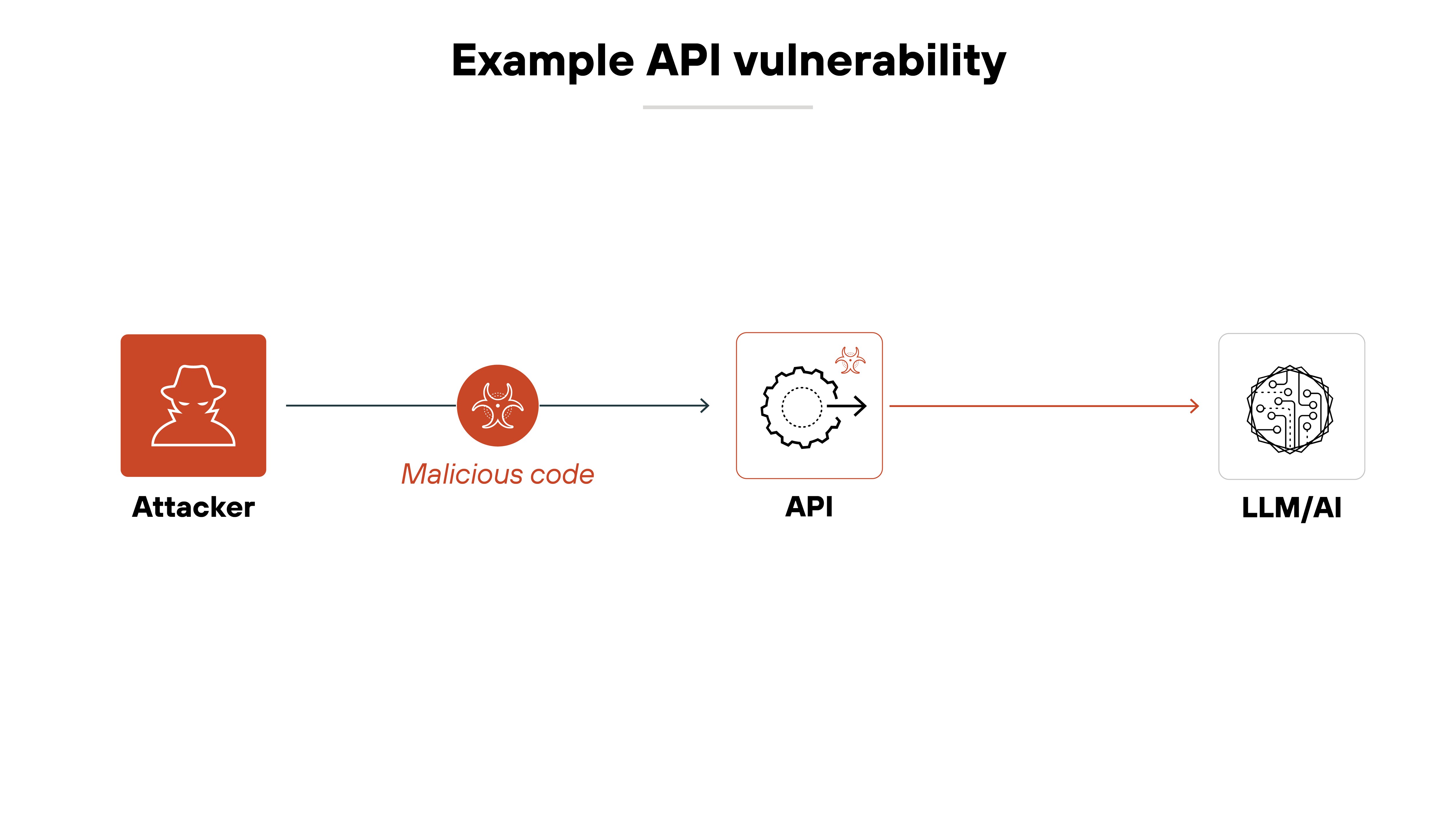

Poorly secured GenAI infrastructure introduces serious risk. APIs, plug-ins, and hosting environments can all become entry points if not properly protected.

For example: If an API lacks proper authentication or input validation, attackers may gain access to sensitive functions. That could mean tampering with model outputs—or triggering a denial-of-service event.

Why does this matter?

Because these vulnerabilities affect more than just system uptime. They affect trust in GenAI systems overall.

Broken access controls, insecure integrations, and insufficient isolation can lead to data exposure. Or even unauthorized model manipulation.

This is especially important in sectors that handle sensitive data. Think healthcare, finance, or personal data platforms.

In short: Securing infrastructure is foundational. Without it, the rest of the system can't be trusted.

- Enhanced authentication protocols: Use multi-factor authentication and strong encryption to secure access to GenAI APIs, plugin interfaces, and system components. This helps prevent unauthorized use of model capabilities or exposure of sensitive endpoints.

- Comprehensive input validation: Validate all inputs—whether from users, applications, or upstream services—to reduce the risk of prompt injection and other input-based attacks targeting GenAI workflows.

- Regular security audits: Conduct ongoing audits and penetration tests focused on GenAI-specific infrastructure. Prioritize APIs, plug-ins, and orchestration layers where improper configuration could lead to model manipulation or data leakage.

- Anomaly detection systems: Implement monitoring tools that baseline normal GenAI operations—such as model queries, plugin activity, or resource usage—and alert on deviations that could signal compromise or misuse.

- Security training and awareness: Train developers and operators on GenAI-specific risks, like prompt injection or insecure model endpoints, and ensure security is embedded into model deployment and integration workflows.

- Incident response planning: Prepare for GenAI-specific incidents—such as model misuse, plugin compromise, or API abuse—by integrating these scenarios into incident response plans and tabletop exercises.

- Data encryption: Encrypt all sensitive data used by GenAI systems, including training data, API responses, and plugin communications. This protects confidentiality in both storage and transit, especially when using third-party services.

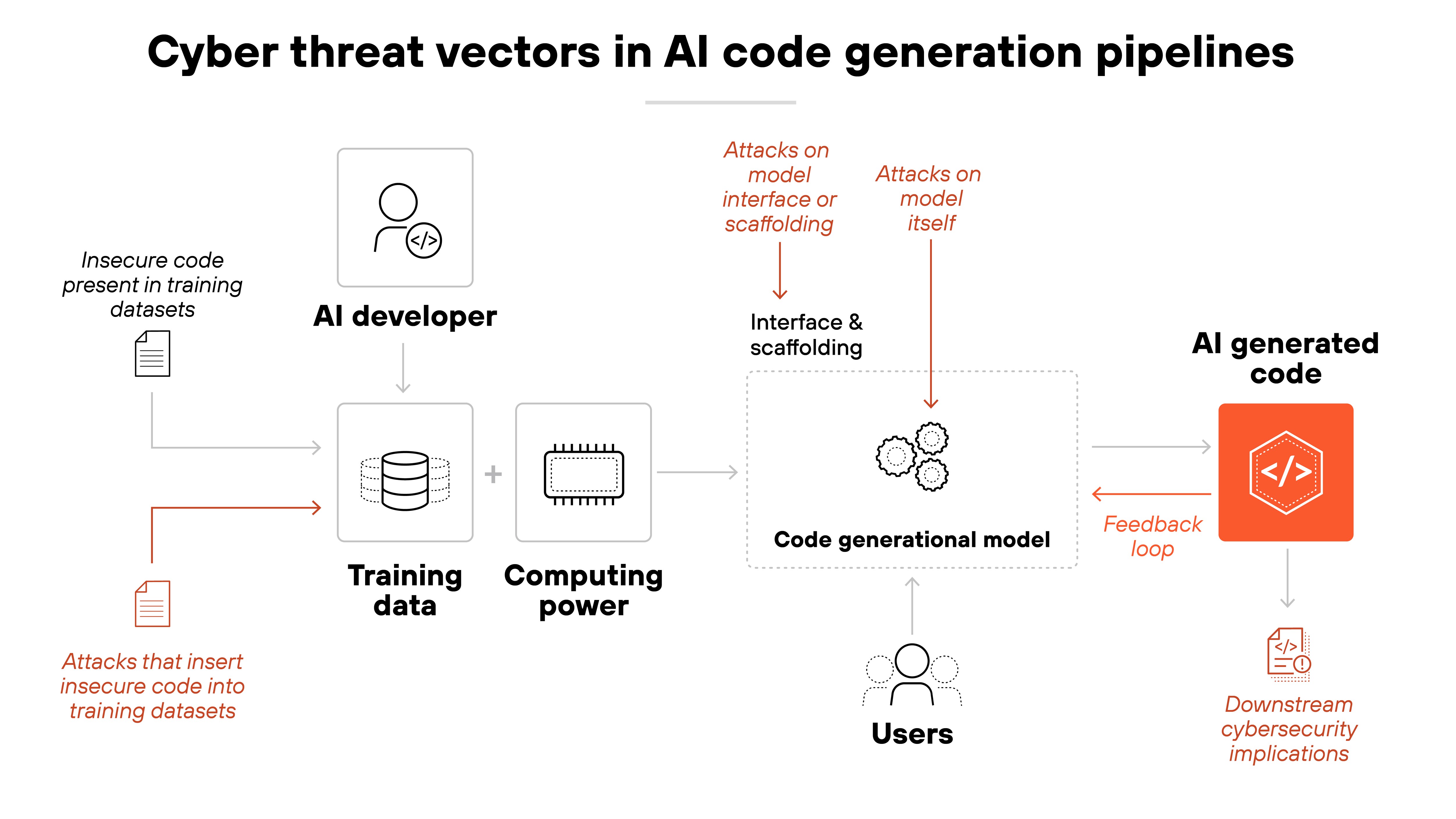

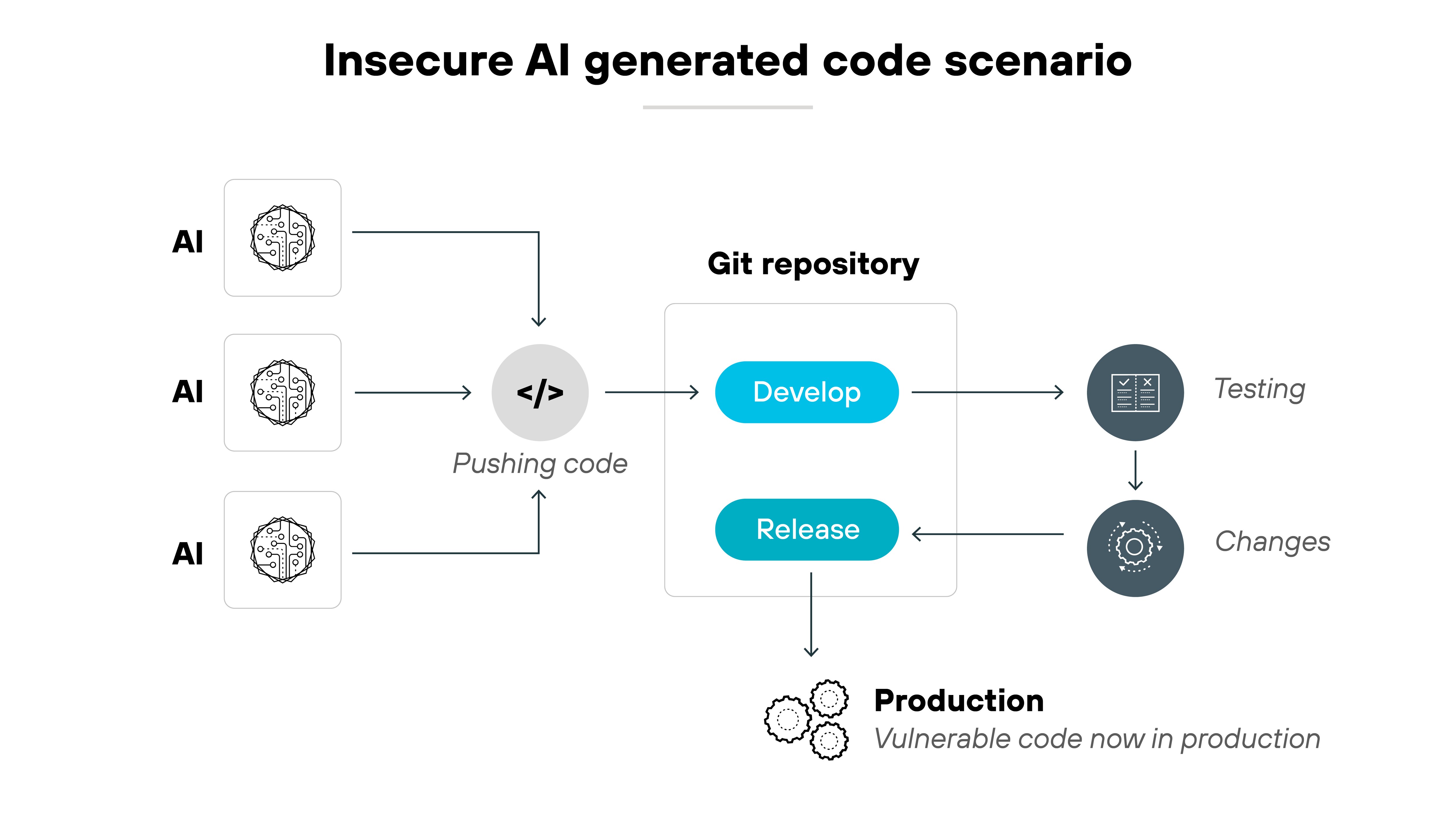

Insecure AI generated code

AI-generated code is often used to save time. Many see it as a shortcut—faster development, with no tradeoff in quality.

But that's not always how it plays out. These tools can introduce serious security issues. And developers may not even realize it.

Here's how:

AI coding assistants generate outputs based on large training datasets. These often include public code from open-source repositories. But much of that code was never reviewed for security. If insecure patterns are present, the model may replicate them. And in many cases, it does.

Why is that dangerous?

Because vulnerabilities can end up baked into software early on. Insecure code might call outdated packages, omit input validation, or mishandle memory. It might compile and run just fine.

But under the surface, it's fragile. Attackers look for exactly this kind of weakness.

In fact, it’s not uncommon for these models to generate code with known bugs and vulnerabilities.

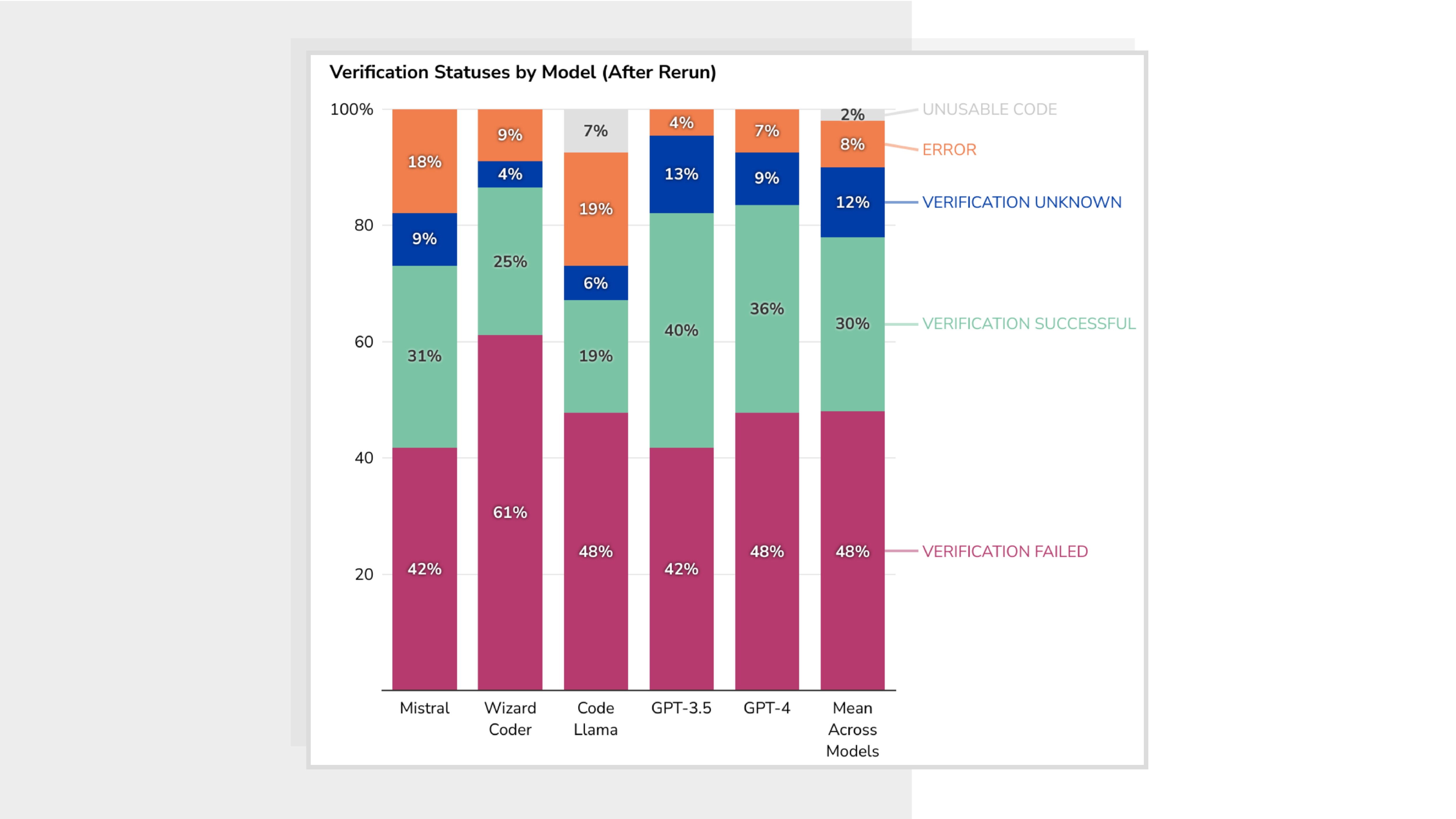

In a CSET evaluation, nearly half of the code snippets generated by five major models—including GPT-4 and open-source tools like Code Llama—had at least one security-relevant flaw. Some were serious enough to enable buffer overflows or unauthorized memory access.

These aren’t just bad practices—they’re exploitable entry points.

Here's the tricky part.

Many developers trust AI-generated code more than they should. In the CSET study, most participants believed the code was secure—even when it wasn't.

That's automation bias. It can lead to insecure code being copied straight into production.

Transparency is another problem. Developers can't inspect how the model made its decision. Or why it suggested a particular pattern.

Even when prompted to “be secure,” some models still output risky code. Others return partial functions that can't be compiled or verified. That makes automated review harder. And without review, bad code can slip through.

There's also a feedback risk. Insecure AI-generated code sometimes gets published to open-source repositories. Later, it may be scraped back into training data. That creates a cycle—where bad code today shapes model behavior tomorrow.

And here's what makes it worse.

As models get better at generating code, they don't always get better at generating secure code. Many still prioritize functionality. Not safety.

This matters because AI-generated code isn't just another tool. It changes how code gets written, reviewed, and reused. And unless those changes are accounted for, they'll introduce risk across the software lifecycle.

- Don't trust the output blindly: Apply the same scrutiny to AI-generated code as you would to junior developer contributions. Manual review is still essential.

- Shift security left: Integrate security checks earlier in the development lifecycle—ideally within the developer's IDE. Catching issues at the point of creation is faster and cheaper than cleaning them up later.

- Use formal verification tools when possible: These can automatically detect specific classes of bugs and reduce reliance on manual inspection. But keep in mind: no tool is perfect. Use multiple methods.

- Use secure training data and benchmarks: AI developers should filter out known-insecure patterns from their datasets. And they should benchmark models not just on how well they work, but on how securely they perform.

- Educate developers: Make sure they understand that “working code” doesn't always mean “secure code.” Promote awareness of automation bias, and encourage teams to view AI-generated code with a healthy dose of skepticism.

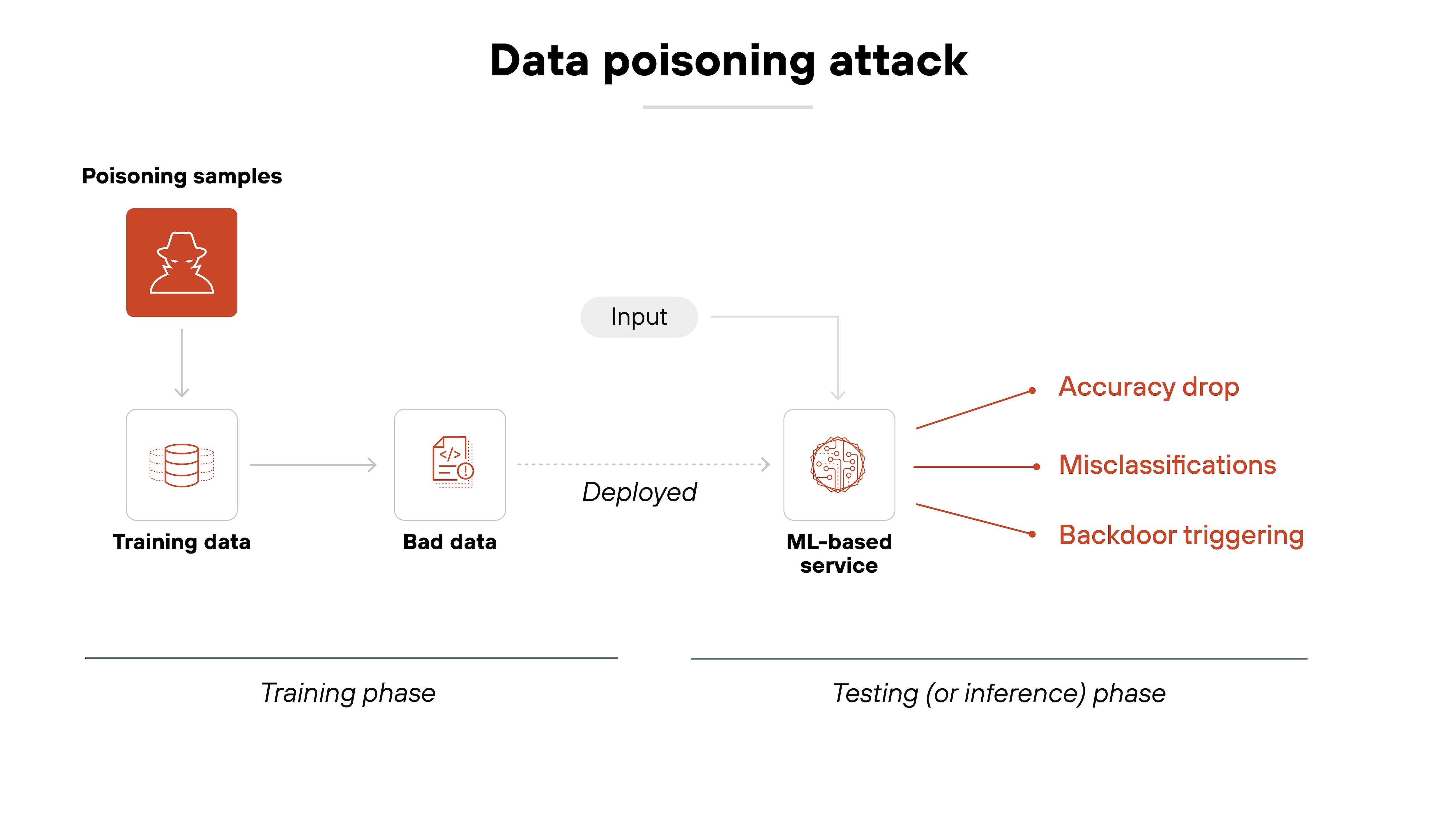

Data poisoning

Data poisoning involves maliciously altering the training data used to build AI models, causing them to behave unpredictably or maliciously.

By injecting misleading or biased data into the dataset, attackers can influence the model's outputs to favor certain actions or outcomes. This can result in erroneous predictions, vulnerabilities, or biased decision-making.

Preventing data poisoning requires secure data collection practices and monitoring for unusual patterns in training datasets.

Data poisoning can be especially difficult to detect in GenAI systems because the poisoned samples are often small in volume but high in impact. Only a few tampered samples might be needed to shift the model’s behavior in a specific direction.

Some data poisoning attacks aim to change how a model responds to specific prompts. Others embed hidden triggers that only activate under certain conditions.

Here’s why that’s a problem.

Many GenAI systems are retrained or fine-tuned on third-party sources or user interactions. So attackers don’t need access to the original training pipeline. They can poison the data that comes in later.

And since these systems often update continuously, poisoned inputs can build up slowly. That makes it harder to catch changes in behavior before they cause issues.

Not all poisoning attacks try to break the model. Some introduce bias while keeping the output functional.

For example: A sentiment model might be trained to favor one demographic or brand. The response looks correct—but the skew is intentional.

Important: GenAI systems often perform normally in most cases. That's what makes poisoned behavior so hard to detect.

Standard performance tests may not catch it. Instead, organizations need targeted testing that focuses on edge cases and adversarial inputs.

- Secure the AI application development lifecycle: This includes maintaining the security of the software supply chain, which inherently covers the models, databases, and data sources underlying your development. Ensuring these elements are secure can help prevent data poisoning.

- Understand, control, and govern data pathways: By ensuring you understand how data moves through your system, you can prevent unauthorized access or manipulation, which includes protecting against data poisoning.

- Implement identity-based access control: Applying strict access controls based on identity, particularly around sensitive areas such as training data, can help prevent unauthorized attempts to inject poisoned data.

- Detect and remove poisoned or undesirable training data: Establish processes to detect anomalies in your data which could indicate tampering or poisoning, and ensure that such data is removed or corrected.

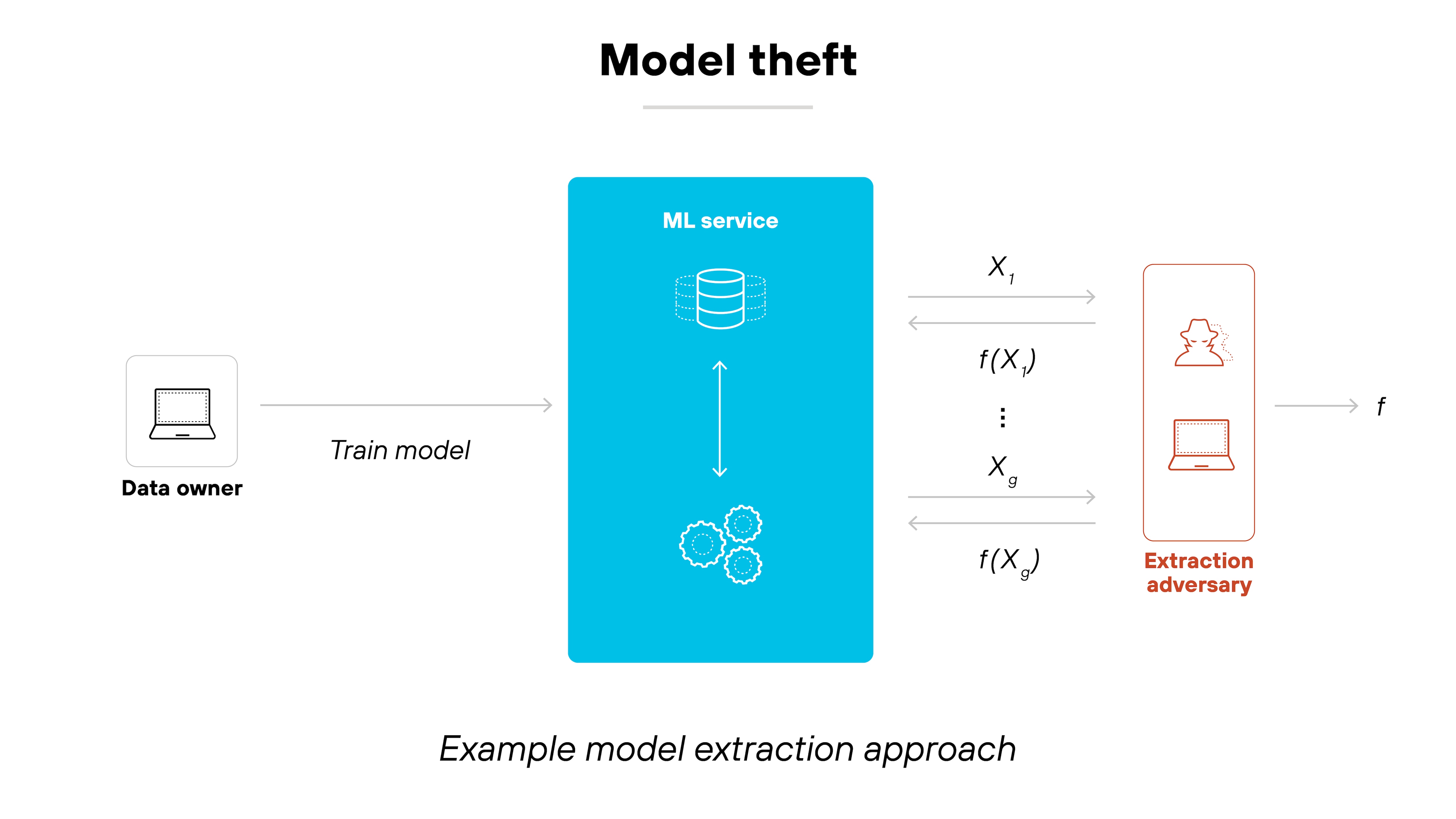

AI supply chain vulnerabilities

Many organizations rely on third-party models, open-source datasets, and pre-trained AI services. Which introduces risks like model backdoors, poisoned datasets, and compromised training pipelines.

For example: Model theft, or model extraction, occurs when attackers steal the architecture or parameters of a trained AI model. This can be done by querying the model and analyzing its responses to infer its inner workings.

Put simply, stolen models allow attackers to bypass the effort and cost required to train high-quality AI systems.

But model theft isn’t the only concern.

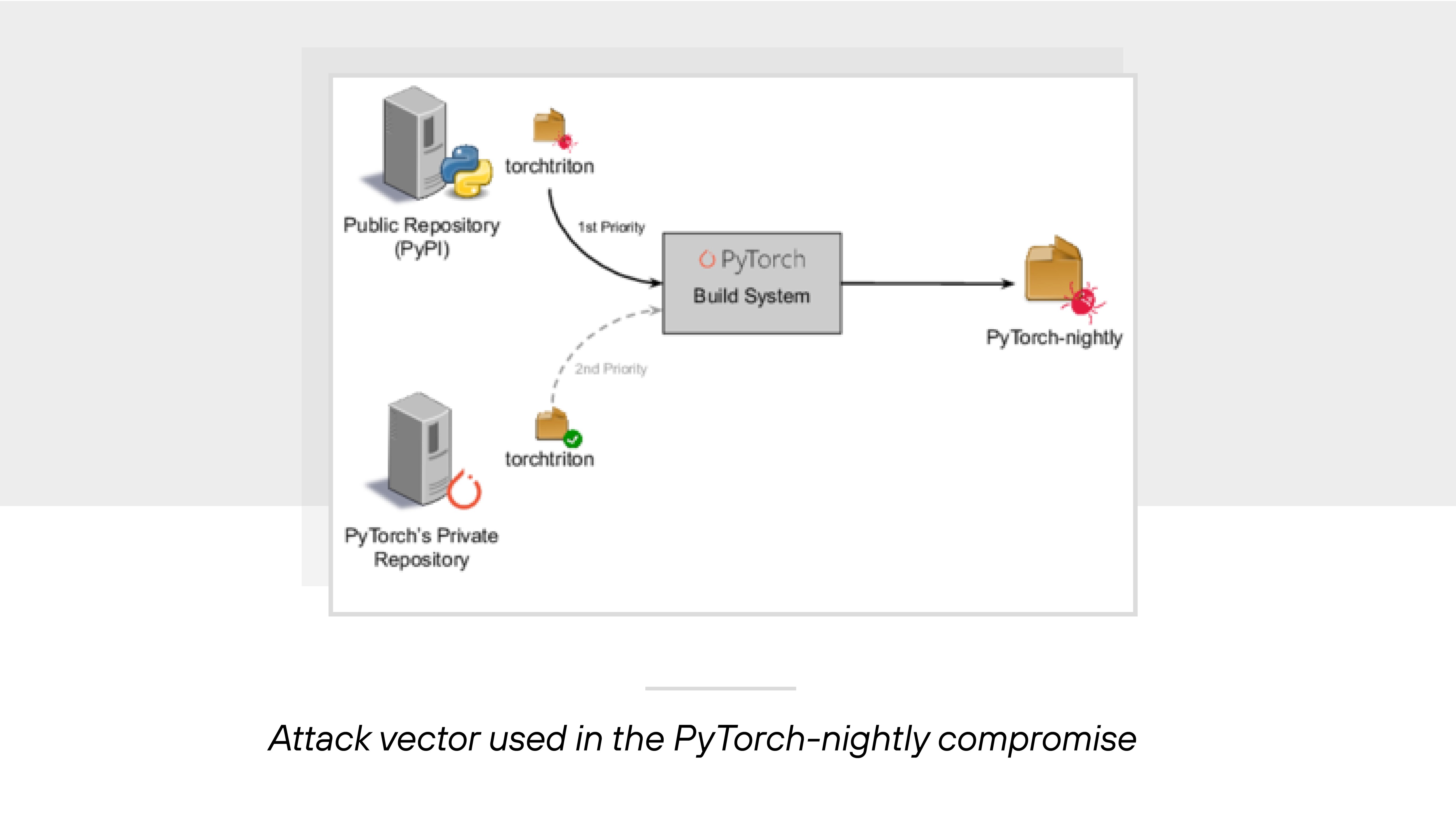

GenAI systems often depend on a complex chain of packages, components, and infrastructure that can be exploited at multiple points. A single compromised dependency can allow attackers to exfiltrate sensitive data or inject malicious logic into the system.

For example: In December 2022, a supply chain attack targeting the PyTorch-nightly package demonstrated exactly how dangerous compromised software libraries can be. Attackers used a malicious dependency to collect and transmit environment variables, exposing secrets stored on affected machines.

It doesn’t stop at software libraries. Infrastructure vulnerabilities—like misconfigured web servers, databases, or compute resources—can be just as dangerous. An attacker who compromises any of these underlying components can interfere with data flows, hijack compute jobs, or leak sensitive information. If the system lacks proper access controls, that exposure can cascade across services and components.

Then there’s the risk from poisoned datasets. Adversaries can modify or inject data into training pipelines to subtly manipulate model behavior.

This isn’t just hypothetical. Poisoned inputs can influence model outputs over time, especially in GenAI systems that adapt to new data.

Even worse: If the base model is already compromised, any fine-tuned model that inherits from it may also carry forward those issues. Backdoors inserted during pretraining can silently persist unless caught and remediated.

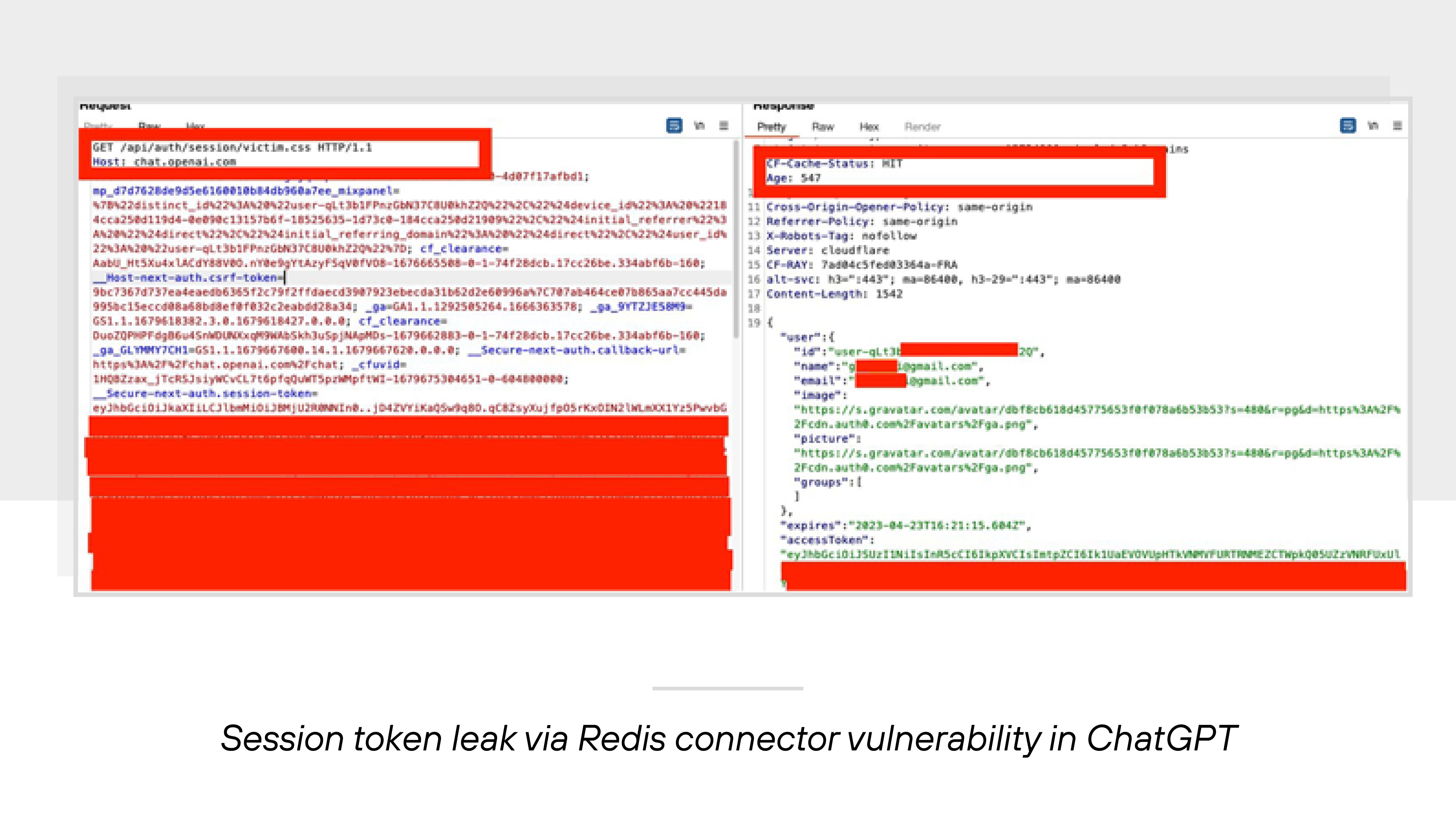

Third-party components can also create risks through poor implementation. In March 2023, a vulnerability in a Redis connector library used by ChatGPT led to horizontal privilege escalation. Improper isolation allowed users to see data from other user sessions.

This incident highlights how plug-and-play components, even when widely adopted, can introduce significant exposure when not securely integrated.

- Track and vet dependencies: Regularly audit third-party packages, libraries, and plugins. Pay close attention to tools integrated into model training or inference workflows. Compromised components can be used to exfiltrate data or tamper with model behavior.

- Validate data and model integrity: Use cryptographic hashes and digital signatures to ensure datasets and model files haven't been altered. This helps detect poisoning attempts or unauthorized changes before deployment.

- Secure your data pipelines: Restrict where training data comes from. Apply controls that monitor for unusual changes in data content or structure. GenAI systems that retrain continuously are especially vulnerable to subtle, long-term poisoning.

- Harden infrastructure and connectors: Secure APIs, hosting environments, and model-serving platforms with strong authentication and access control. Even indirect components—like caching layers or connector libraries—can introduce exposure.

- Integrate security into model lifecycle workflows: Treat model development, fine-tuning, and deployment like any other software development process. Bake in vulnerability scans, access checks, and dependency reviews at each stage.

- Establish an incident response plan: Define a process for investigating suspicious model behavior. If a supply chain attack occurs, you need a way to isolate the system, confirm integrity, and roll back compromised components quickly.

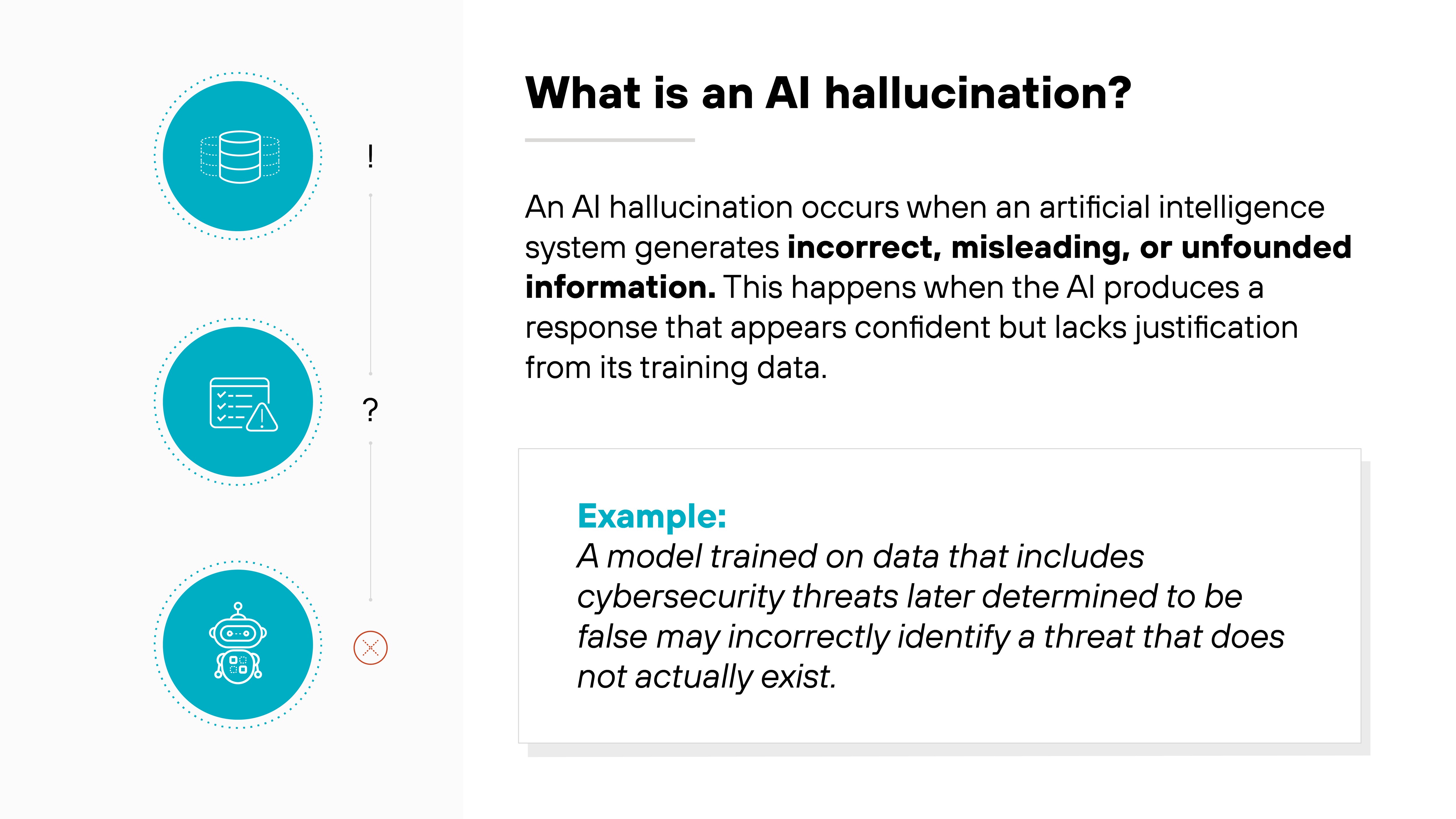

AI-generated content integrity risks

GenAI models can introduce bias, produce misleading content, or generate entirely false information.

That's a problem for security. But it's also a problem for trust.

Here’s why:

These models often present outputs in a confident, fluent tone—even when the information is wrong or biased. That makes it harder for users to spot errors. And easier for attackers to exploit.

For example: A model trained on biased data might consistently favor one demographic in a hiring summary. Or hallucinate a medical citation that sounds real—but isn’t. These aren’t just inaccuracies. They can influence decisions. Sometimes in critical ways.

Attackers know this.

Prompt manipulation can trigger outputs that degrade trust. It might be offensive language. Or content engineered for misinformation. In some cases, GenAI systems have been used to generate material for phishing and social engineering.

It's important to note: Not every issue stems from malicious intent. Some come from model design. Others from poor training data.

Either way, flawed outputs introduce real risk—especially in regulated or high-stakes settings.

That's why alignment and hallucination controls matter.

Alignment helps models stay within guardrails—so outputs match intended goals and norms. Hallucination controls help reduce made-up details. Together, they support content integrity. And help prevent GenAI from becoming a source of misinformation.

- Control bias in training data: Review and filter datasets before training or fine-tuning. Pay close attention to demographic representation and known sources of bias.

- Validate outputs with human oversight: Use human-in-the-loop review for high-impact use cases—especially where decisions could affect health, safety, or individual rights.

- Tune for alignment: Fine-tune models to follow intended goals and norms. This helps reduce harmful, off-topic, or manipulative outputs.

- Limit exposure to prompt manipulation: Restrict prompting access in sensitive environments. Monitor for patterns that suggest misuse, such as attempts to trigger biased or unsafe content.

- Monitor for hallucinations: Add checks to flag unsupported claims or fabricated details. This is especially important for regulated or high-trust domains.

- Set clear model use boundaries: Define appropriate use cases for each model. Apply controls to prevent use in unsupported or high-risk contexts.

- Test under edge conditions: Use adversarial inputs to evaluate how the model behaves in atypical situations. This helps uncover risks that don't show up in normal testing.

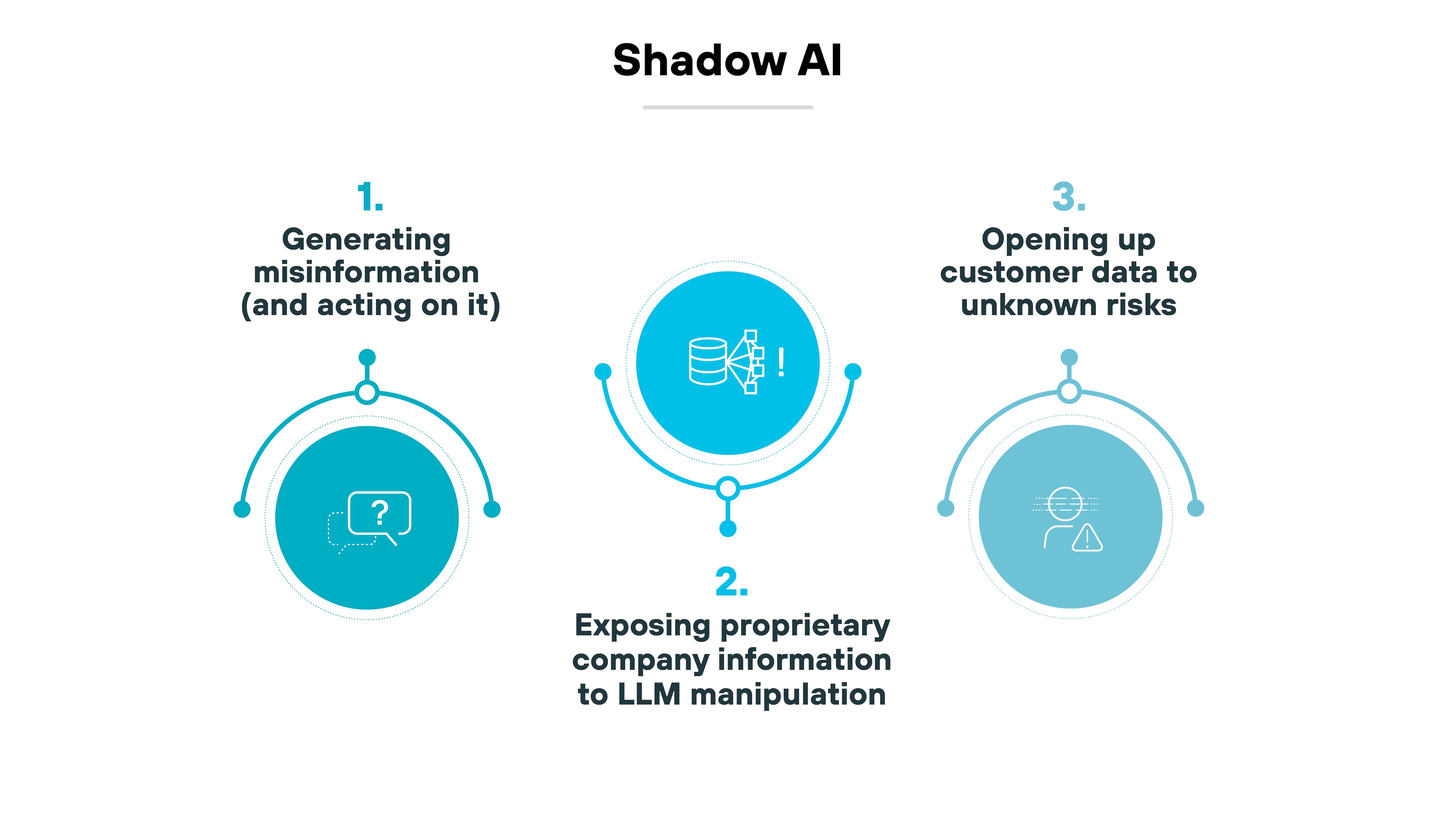

Shadow AI

Shadow AI refers to the unauthorized use of AI tools by employees or individuals within an organization without the oversight of IT or security teams.

These unsanctioned tools, although often used to improve productivity, can absolutely expose sensitive data or create compliance issues.

Unmanaged AI adoption introduces risks similar to those seen in early SaaS adoption.

Employees may use external AI tools to summarize meetings, write emails, or generate code. While the intent is usually harmless, these tools can unintentionally process confidential data—customer information, intellectual property, internal communications—without safeguards in place.

When these tools are used outside of formal review and procurement channels, no one verifies whether they meet the organization's security, compliance, or privacy standards. And that creates blind spots.

And since security and IT teams often have no visibility into which tools are being used or what data they're accessing. This lack of oversight makes it difficult to track data movement, prevent exfiltration, or enforce controls. It also increases the risk of exposure through insecure AI workflows or poor data handling practices.

In other words:

Shadow AI weakens the organization's security posture by allowing AI adoption to happen without the foundational governance and risk controls that should come with it.

The more AI becomes embedded into day-to-day work, the more important it becomes to close these gaps proactively. Otherwise, AI usage grows faster than the organization's ability to manage the risk.

- Establish clear AI usage policies: Set boundaries for which tools can be used, what types of data are permitted, and how employees should evaluate AI services.

- Monitor for unauthorized AI use: Track activity across users, devices, and networks to detect unapproved AI tools and assess potential exposure.

- Define AI governance roles: Assign responsibility for approving tools, setting policies, and enforcing compliance so ownership is clear and consistent.

- Review tool security before adoption: Require formal risk assessments for new AI services to ensure they meet security, privacy, and compliance standards.

- Maintain continuous oversight: Use real-time monitoring and regular audits to keep pace with evolving AI usage and prevent unmanaged sprawl.

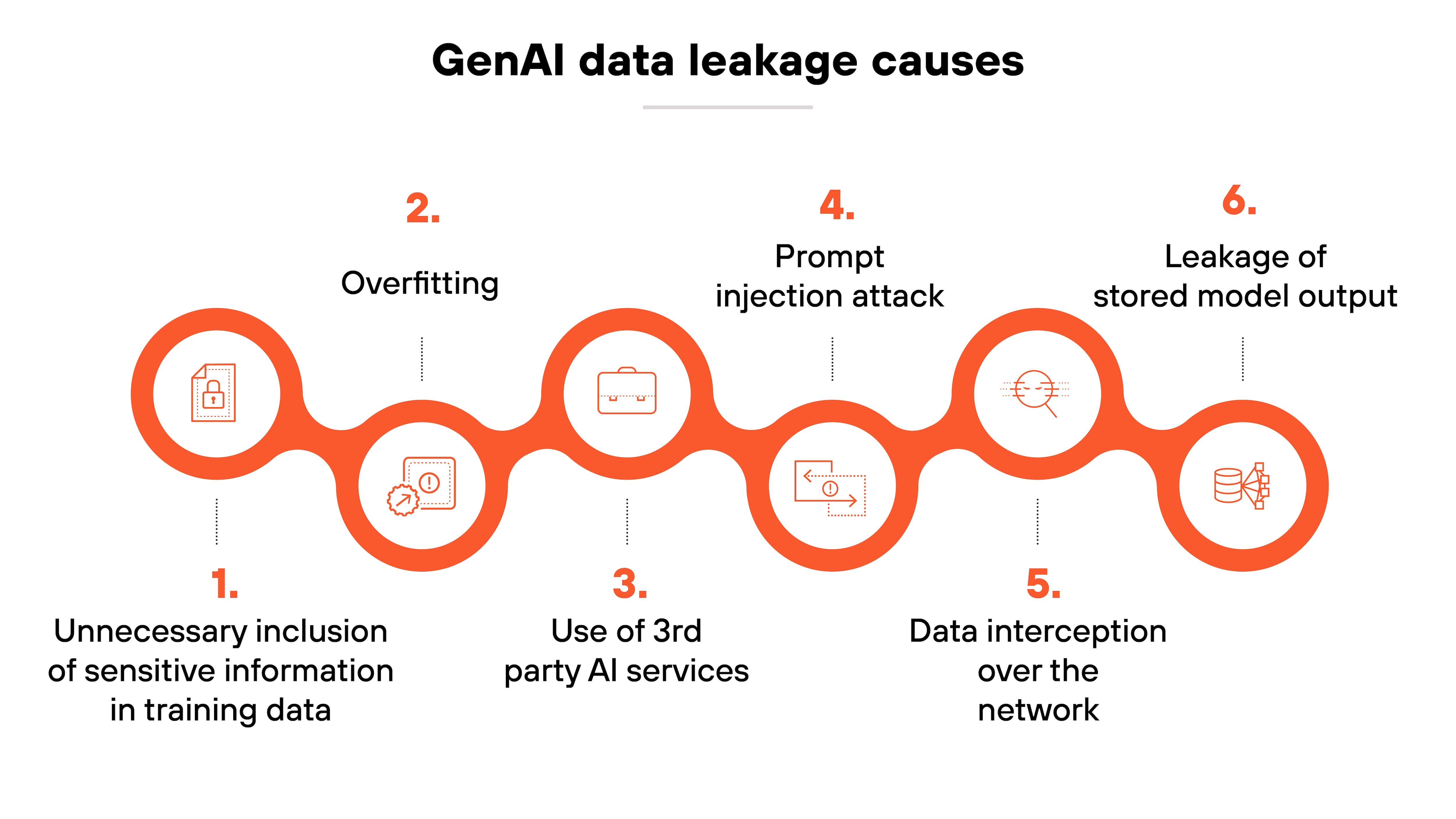

Sensitive data disclosure or leakage

GenAI systems can unintentionally leak confidential information. This includes personal data, business secrets, or other sensitive inputs used during training.

That can happen in various ways:

The phenomenon can manifest through overfitting, where models generate outputs too closely tied to their training data.

Or through vulnerabilities like prompt injection attacks, where models are manipulated to reveal sensitive information.

The reason this is such a major potential threat is because GenAI systems often process vast amounts of data. Which includes proprietary business information or personal details. Ones that are sensitive and shouldn't be disclosed.

That kind of data leakage can lead to financial losses, reputational damage, and legal consequences.

Also: The versatility and complexity of GenAI systems mean they can access and synthesize information across multiple data points–inadvertently combining them in ways that reveal confidential insights.

For example: A GenAI model trained on sensitive healthcare records could potentially generate outputs that inadvertently include personally identifiable information (PII), even if that wasn't the intention of the query. Similarly, models used in financial services could unintentionally expose trade secrets or strategic information if not properly safeguarded.

- Anonymize sensitive information: Techniques such as differential privacy can be applied to training data to prevent the AI from learning or revealing identifiable information.

- Enforce strict access controls: Regulate who can interact with the AI system and under what circumstances.

- Regularly test models for vulnerabilities: Continuously scan for weaknesses that could be exploited to extract sensitive data.

- Monitoring external AI usage: Keep track of how and where your AI systems are deployed to ensure that sensitive data does not leak outside organizational boundaries.

- Securing the AI application development lifecycle: Implement security best practices throughout the development and deployment of AI models to safeguard against vulnerabilities from the ground up.

- Controlling data pathways: Understand and secure the flow of data through your systems to prevent unauthorized access or leaks.

- Scanning for and detecting sensitive data: Employ advanced tools to detect and protect sensitive information across your networks.

Access and authentication exploits

These attacks happen when threat actors bypass or misuse identity controls to get into GenAI systems or the infrastructure behind them.

The tactics aren't new. But in GenAI environments, the stakes are higher.

Why?

Because GenAI platforms often connect to internal data, production APIs, and external services. So if attackers gain access, they don't just see data—they can manipulate models, outputs, and downstream systems.

Here's how it works:

Most GenAI setups include APIs, web services, and integrations across storage, inference engines, databases, and front-end apps. They're held together by credentials—tokens, secrets, or service accounts.

If any of these get exposed, attackers can impersonate legitimate users or services.

For example: An attacker might steal a token from a model inference API. That token could be used to send malicious prompts or pull past output history. Or they might compromise a plugin that connects to cloud storage and use it to upload harmful data or retrieve confidential files.

Other attacks start with compromised admin credentials. These can come from phishing or credential reuse.

Once attackers get in, they can escalate access or change how the model behaves.

Note: Session and token handling are especially sensitive in GenAI environments. Many models rely on context. If session tokens are reused or stored insecurely, attackers might persist across sessions or access previous interactions.

Another common issue is over-permissioned access. Service accounts and test environments often have more access than needed. If those credentials are reused in production, the damage can be significant.

Ultimately: One weak link—like a forgotten token or over-permissioned API—can open the door to system-wide compromise.

- Enforce identity-based access controls: Require authentication at every access point, including APIs, services, and user interfaces.

- Use strong authentication: Apply multi-factor authentication and short-lived tokens to limit exposure.

- Apply least privilege: Restrict accounts and services to only what they need. Avoid granting broad or default access.

- Monitor for anomalies: Review authentication logs regularly. Look for unusual activity like location changes, repeated token use, or abnormal API behavior.

- Secure credentials: Avoid hardcoding tokens or secrets. Use a secure vault or key management system to store them properly.

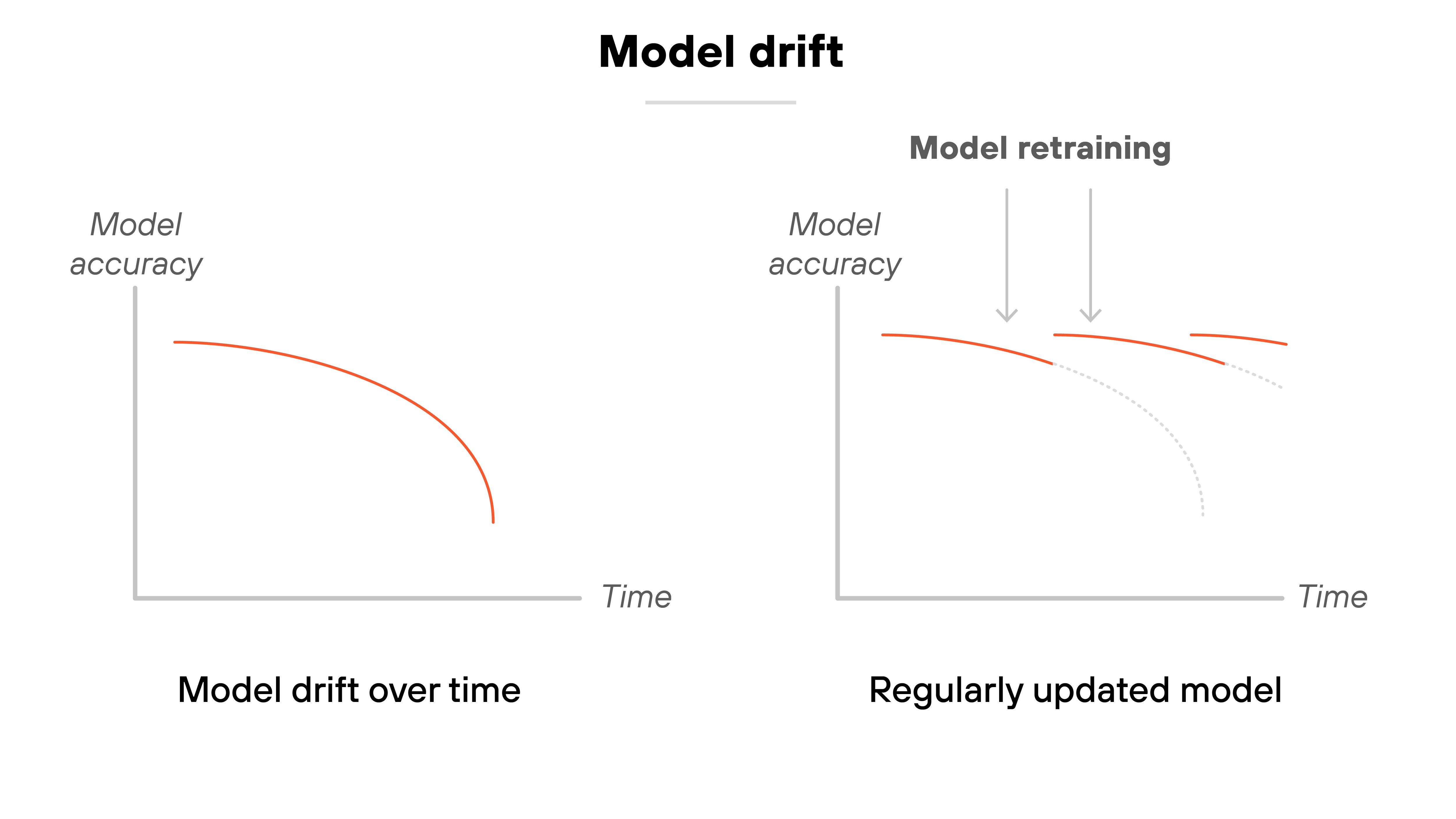

Model drift and performance degradation

Model drift happens when a GenAI model becomes less accurate or reliable over time. It's usually because the model starts seeing data it wasn't trained on—or data that's changed since training.

Why does that matter?

Because GenAI systems don't stay in a lab. They operate in real environments. That includes changing user behavior, updated content, or shifting business conditions. If the model can't adapt, performance degrades. And that can affect decisions.

Here's what that looks like:

A legal summarization model might miss new terminology if it's not updated. A support chatbot could give wrong answers if the product changes but the model doesn't. Even small shifts in inputs can throw off performance. That leads to confusion, poor results, and in some cases, regulatory or legal risk.

Important:

Drift is especially difficult to track in closed-source models. Without visibility into training data or model changes, it's hard to understand what's wrong—or how to fix it.

There's also a security dimension.

Drift increases the chance of hallucination and misalignment. If a model sees unfamiliar input, it might guess. Sometimes it's wrong—but sounds confident. In other cases, it may ignore built-in rules or generate outputs that violate expectations.

In other words:

If drift goes undetected, it doesn't just degrade quality. It can create operational risk, decision-making problems, and reputational exposure.

- Monitor model performance regularly: Compare outputs against known benchmarks or KPIs. Watch for gradual changes that signal drift.

- Validate upstream data pipelines: Make sure the data feeding into the model is accurate, structured, and consistent with what the model expects.

- Retrain with fresh data: Update models periodically using recent data. This helps the model stay aligned with evolving inputs.

- Use feedback loops: Incorporate real-world usage data into model evaluation. Continuous feedback improves relevance over time.

- Use fallback or ensemble models: Rely on secondary models when confidence scores are low. This helps maintain accuracy when the primary model drifts.

- Implement version control and rollback plans: Keep backups of past models and track changes over time. Roll back quickly if performance issues arise.

- Bring in domain experts: When drift is detected, expert review helps interpret whether outputs still align with real-world needs.

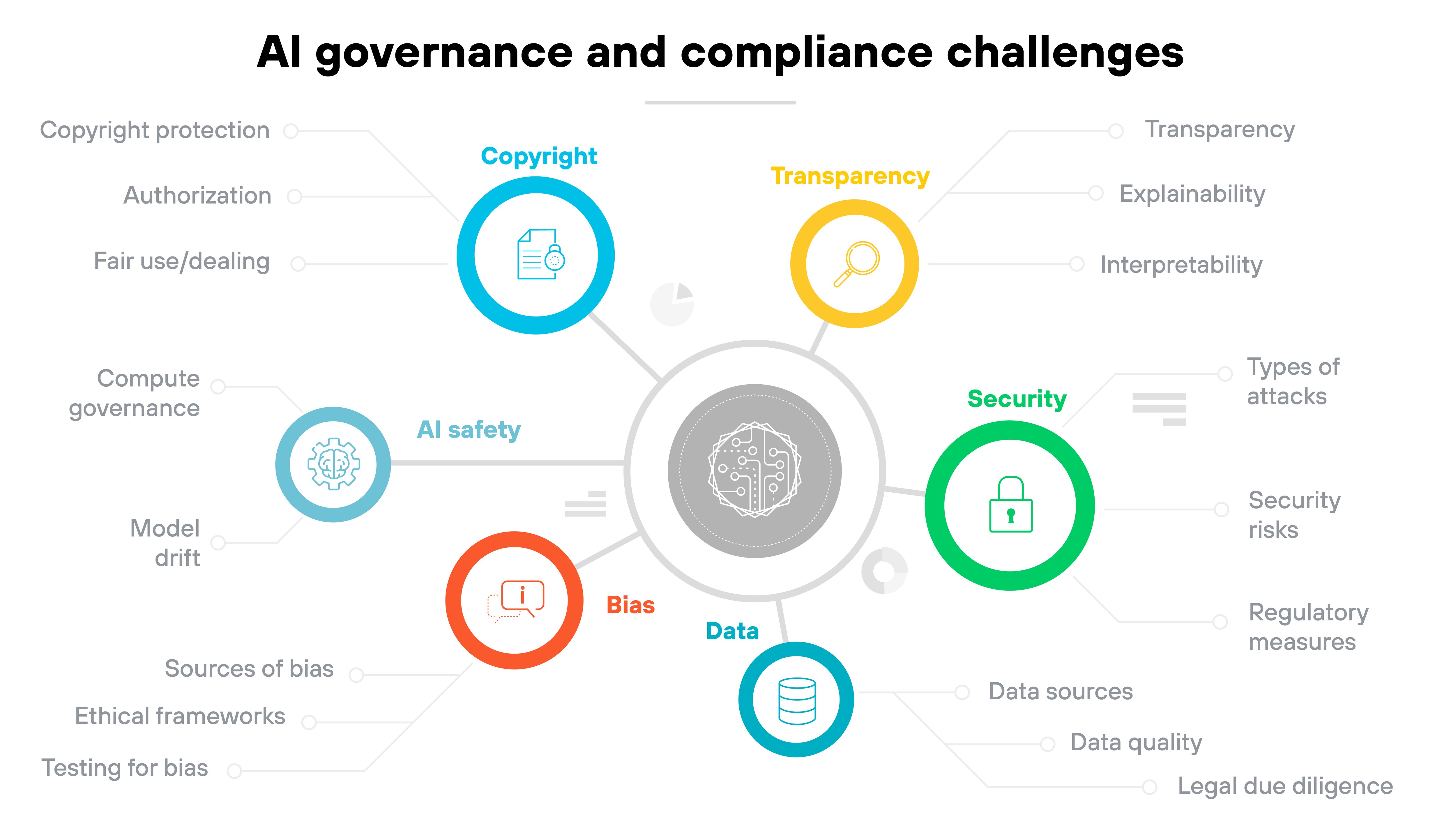

Governance and compliance issues

GenAI systems evolve quickly. But the governance needed to manage them often lags behind.

Here's why that's a problem:

These tools can process sensitive data, automate decisions, and generate content that affects people and systems. Without oversight, they introduce risk—legal, operational, and reputational.

In other words:

It's hard to govern what you can't see. And many organizations lack visibility into which models are in use, what data they touch, or how they behave in production.

Things get more complicated when models are deployed in different ways. An API-based model may have one set of requirements. An internally hosted open-source model may need another. Each setup demands its own controls—and may fall under different regulations depending on where and how it's used.

That makes consistency difficult.

Many GenAI models are also hard to audit. They often work like black boxes. It's not always clear what data influenced an output or how a decision was made. That's especially risky in sensitive areas like hiring, healthcare, or finance—where laws may require proof of fairness, transparency, or non-discrimination.

Training adds another layer. If fine-tuning involves internal data, there's a risk of exposing personal or proprietary information—especially without clear policies or secure processes.

And it's not just internal use.

Public-facing GenAI tools can be probed or manipulated. If no guardrails are in place, they can leak data or produce harmful content. That puts organizations at risk of compliance violations—or worse, public backlash.

- Inventory models in use: Identify all GenAI tools across the organization, including experimental or shadow projects.

- Document data sources: Track how data is used in training, fine-tuning, and inference. Flag anything sensitive or regulated.

- Establish model approval policies: Define what's approved, what isn't, and how decisions are made.

- Share ownership across teams: Legal, compliance, security, and engineering should coordinate on governance.

- Monitor model behavior: Watch for drift, bias, or misuse—especially in customer-facing or high-impact use cases.

- Stay aligned with regulations: Review laws and frameworks regularly. AI compliance is a moving target.

- What Is AI Governance?

- AI Risk Management Frameworks: Everything You Need to Know

- NIST AI Risk Management Framework (AI RMF)

- What Is Google's Secure AI Framework (SAIF)?

- MITRE's Sensible Regulatory Framework for AI Security

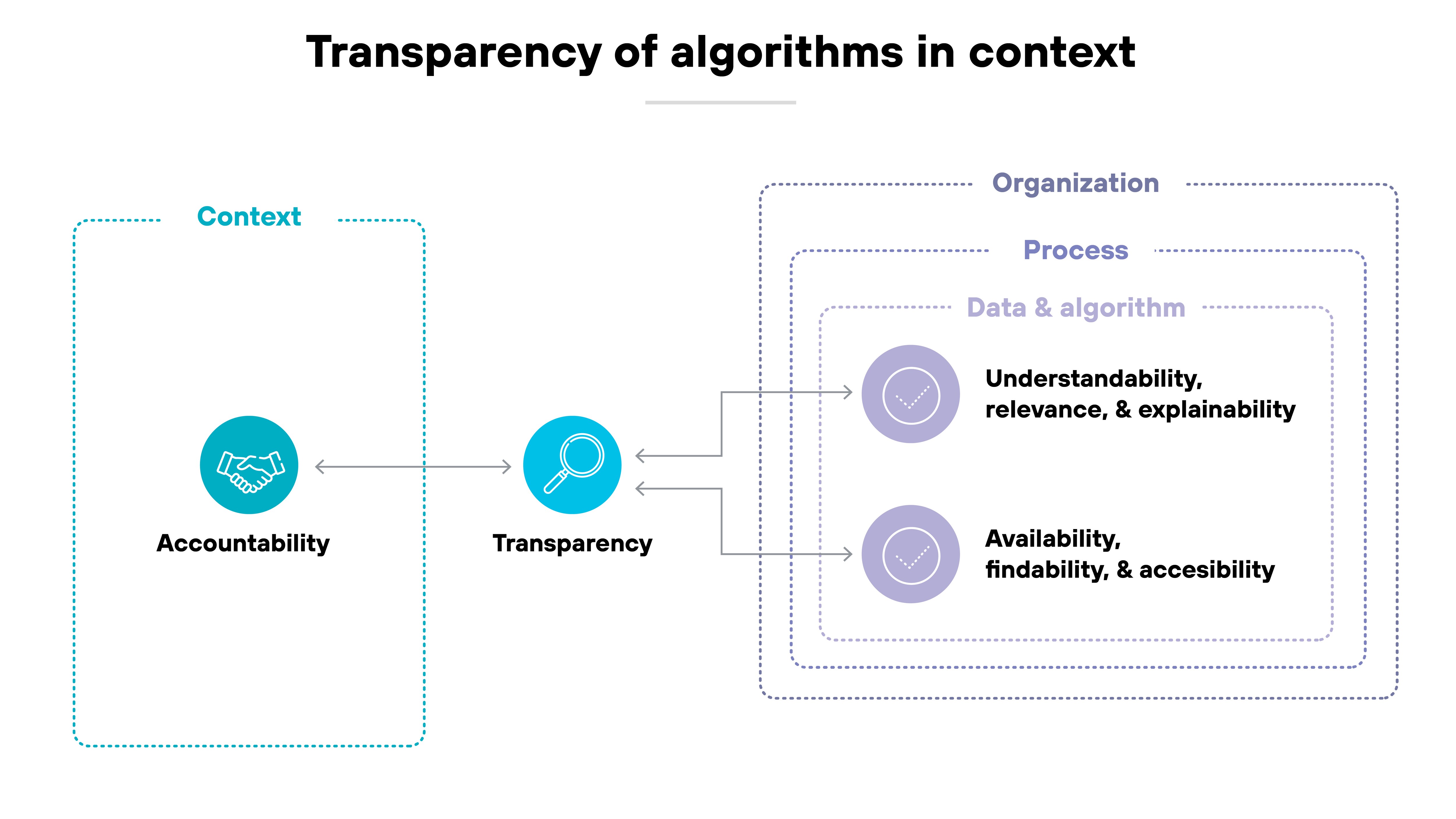

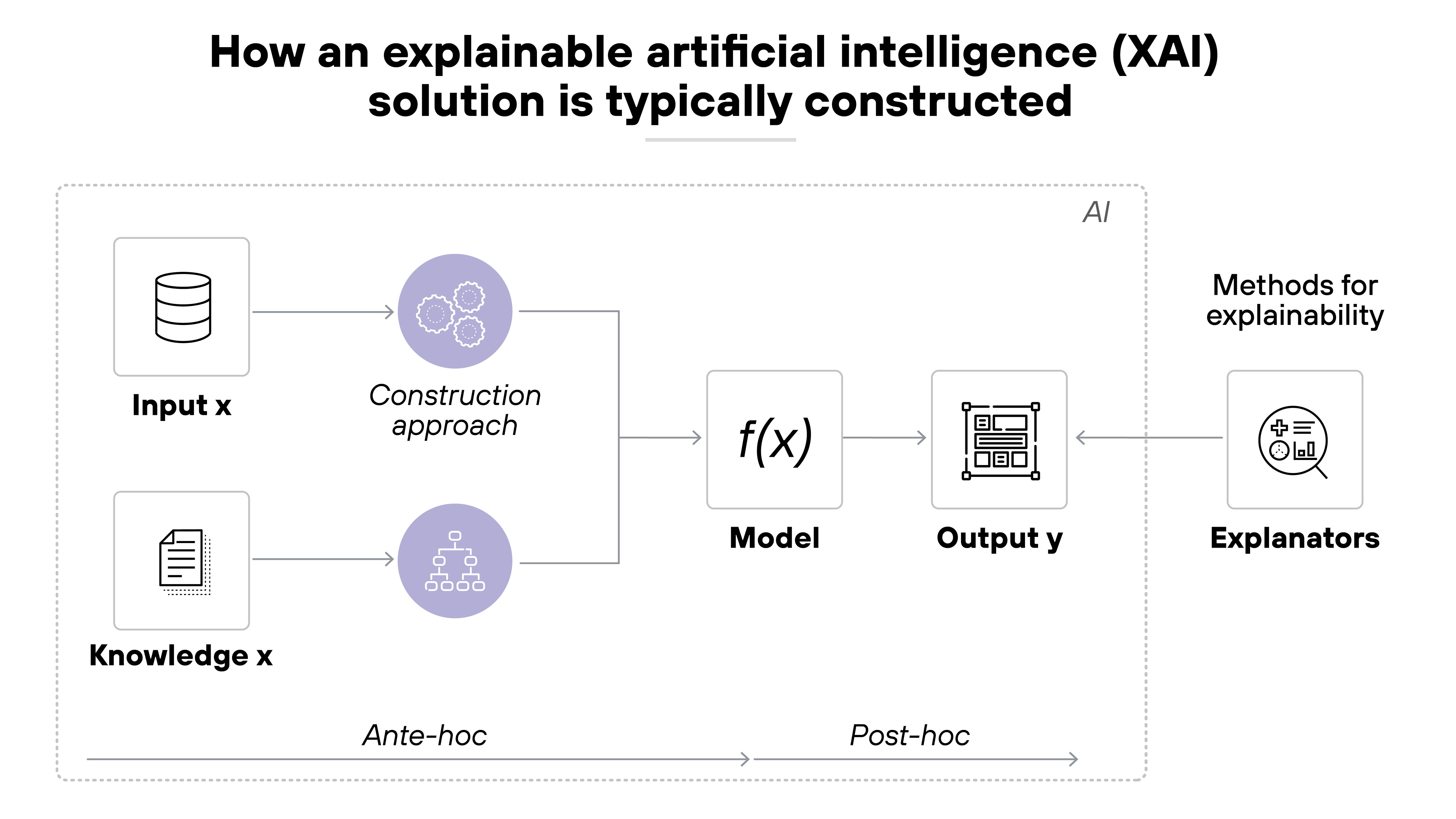

Algorithmic transparency and explainability

GenAI models are often complex. Their outputs can be hard to trace. And in many cases, it's not clear how or why a decision was made.

That's where transparency and explainability come in.

They're related—but not the same.

Transparency is about visibility. It means knowing how the model works, what data it was trained on, and what its limits are. It also includes access to documentation, performance metrics, and input/output behavior.

Explainability goes further. It focuses on understanding. Can a human interpret the model’s decision in a way that makes sense?

That’s important.

If an AI system declines a loan or flags a health condition, users will want to know why. And if it can’t explain itself, that creates issues—operationally, legally, and ethically.

Here's why it matters:

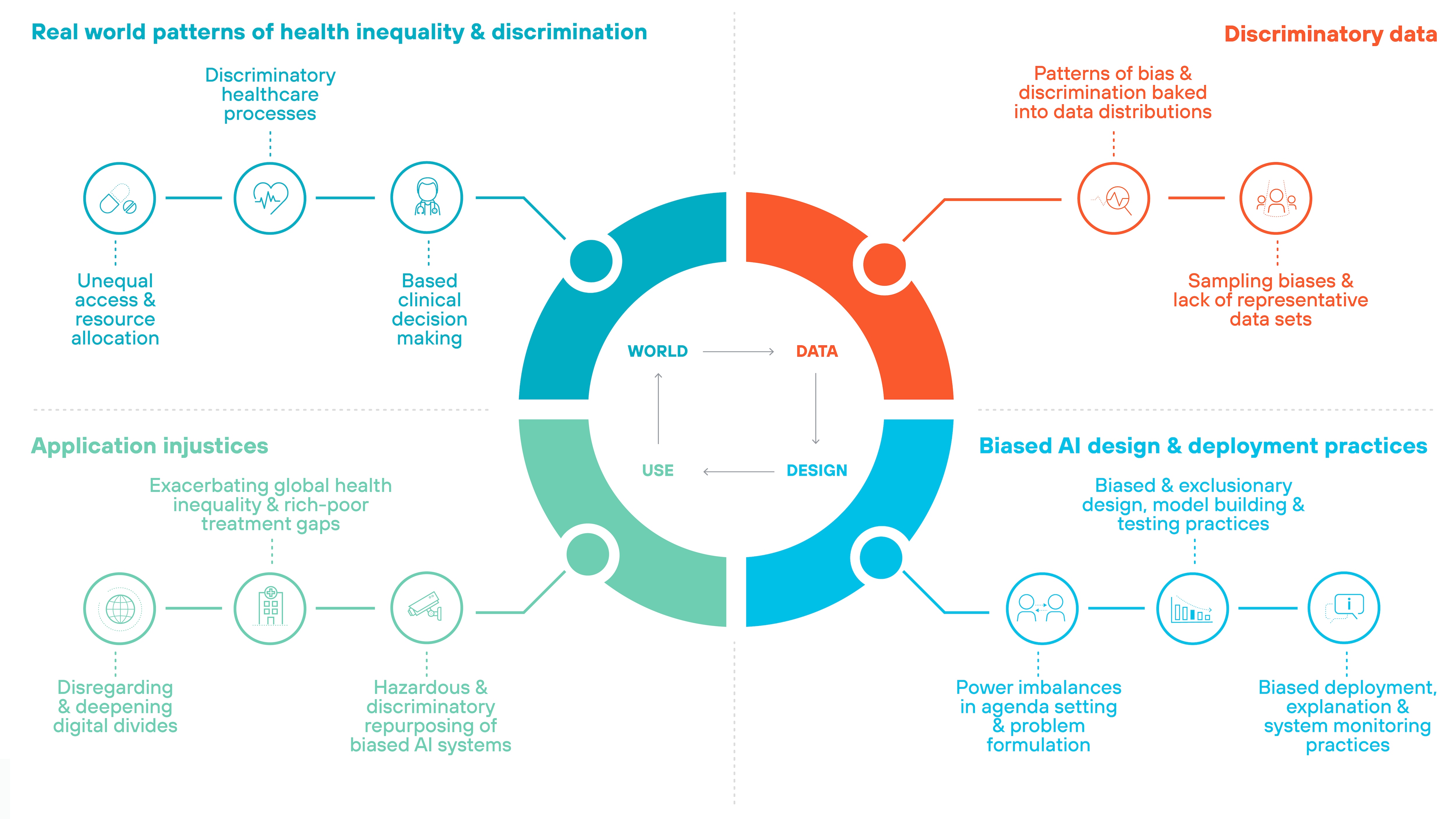

Without transparency, bias is harder to detect. If a model is trained on skewed data, it may reinforce unfair outcomes. But if no one can see inside, there's no way to audit or correct the behavior.

Lack of explainability also makes systems harder to improve. Developers can't debug what they don't understand. And users may stop trusting the system—especially in high-stakes environments.

It's also a privacy concern.

Some models memorize parts of their training data. If that includes sensitive information, it could leak during inference. Without transparency into how the model was trained, those risks may go unnoticed.

In short:

If you can't explain what the model is doing, you can't secure it, govern it, or expect others to trust it.

- Document every model in use: Include its function, training data sources, and known limitations.

- Apply explainability techniques: Use methods like feature importance, saliency maps, or natural language justifications based on the use case.

- Design for transparency: Clarify what the model sees, what it can output, and where human oversight is involved.

- Continuously monitor outputs: Watch for bias, drift, or unusual behavior and trigger a review when necessary.

- Align with legal and compliance teams: Ensure transparency practices support regulatory requirements.

- Avoid black-box models in sensitive areas: Or add guardrails to reduce risk when you must use them.

- Make explanations meaningful: The goal isn't just to generate an explanation. It's to help people understand what the model is doing.