What Is Generative AI (GenAI)? The Definitive Guide

Generative AI is a class of machine learning models that create new content such as text, images, or code by learning patterns from vast datasets.

These models, often built on transformer or diffusion architectures, generate outputs that resemble human-created data. Unlike traditional AI, which classifies or predicts, generative AI produces original material in response to prompts or contextual inputs.

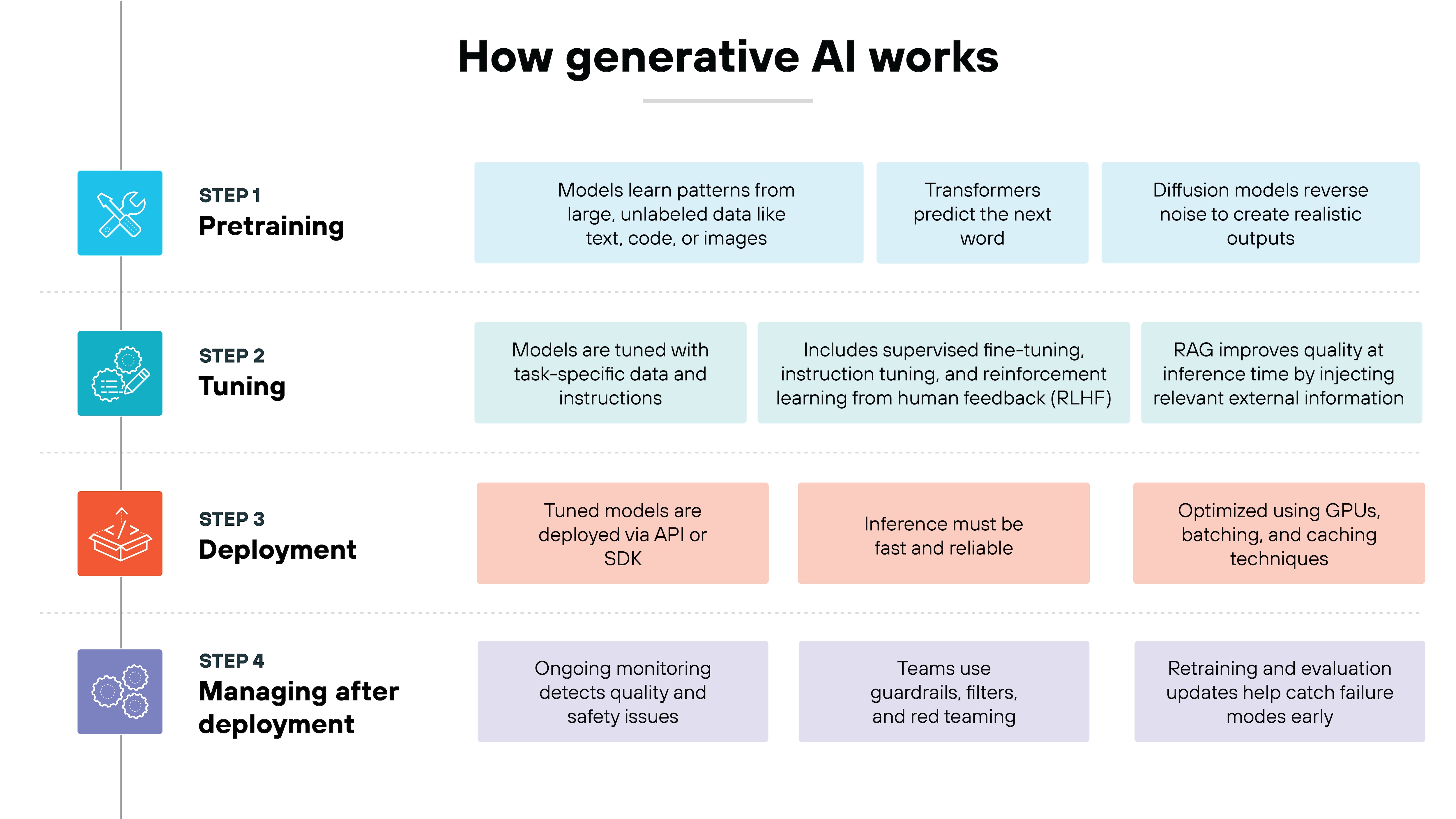

How does generative AI work?

Most generative AI systems follow the same basic path from training to real‑world use.

The details vary across models and domains. But the workflow stays similar.

First a model learns broad patterns. Then it's tuned, deployed, and monitored. Each phase shapes what the model can do—and how reliably it can do it.

1. Pretraining on massive datasets

Most modern generative AI models start with a process called self-supervised learning.

That means they learn patterns without needing labeled examples. Instead, they try to predict missing or corrupted parts of their input—like the next word in a sentence or a blurry patch in an image.

To learn those patterns, models are trained on massive datasets. These might include web pages, code, images, or documents. The data doesn't need to be labeled by humans. Just collected at scale.

What the model learns depends on how it's built:

Transformer models usually predict the next word.

Diffusion models learn how to turn random noise into realistic images.

But the goal is the same: to create a general-purpose model that understands structure and can generate something new.

2. Tuning for specific tasks

Pretrained models aren't well aligned to specific tasks or safety requirements by default.

They have broad knowledge but no instruction. So they need to be tuned.

-

That starts with supervised fine-tuning.

A curated dataset teaches the model how to behave on specific tasks.

-

Then comes instruction tuning.

This step improves how models respond to natural-language instructions, like “summarize this” or “write code for that.”

-

To refine things further, some teams use reinforcement learning from human feedback (RLHF).

Human raters score model outputs. That feedback is then used to optimize the model's behavior.

- What Is RLHF? Reinforcement Learning from Human Feedback

- What Is Retrieval-Augmented Generation (RAG)? An Overview

3. Deploying and serving the model

Once tuned, the model gets deployed, usually via API or SDK. Organizations might host it in the cloud or embed it into applications.

Inference needs to be fast. But generative models are large.

That means they often run on GPUs. Sometimes on clusters of them. Batching, caching, and quantization can help improve performance.

The goal is to serve real-time generations reliably. Whether that's text completions, code, images, or multimodal content.

4. Managing the model after deployment

Post-deployment is where operational risk shows up.

Models may drift over time. Inputs might change. New misuse patterns may emerge.

So teams monitor for quality, safety, and abuse. That includes detecting hallucinations, toxic content, jailbreak attempts, and prompt injection.

Some organizations run red teaming exercises before launch. Others rely more on real-time filters and guardrails in production.

Over time, many also retrain the model or update evaluation pipelines, looking for signs of drift or misuse before they cause harm.

What are the different generative AI model architectures?

Not all generative models work the same way.

Some predict the next word in a sentence. Others denoise random noise into realistic images. Each approach has its own mechanics, strengths, and weaknesses.

Understanding how different model types work can help explain why they're used in different domains, from text to vision to anomaly detection.

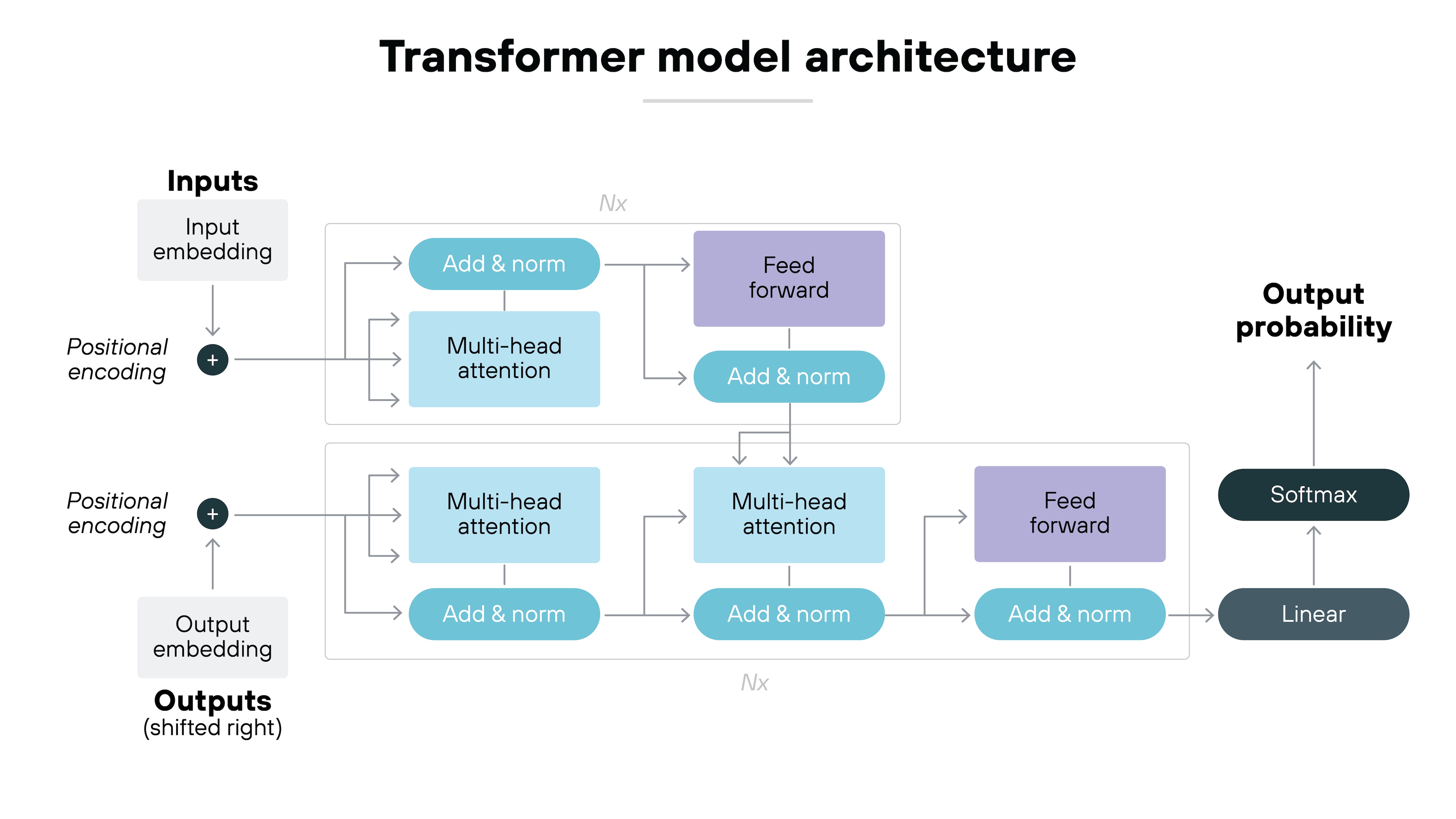

Transformers

Transformers are the backbone of most modern generative AI models.

They work by comparing every part of the input to every other part so the model can understand context and meaning. That applies to text, code, or even image fragments.

They're trained to predict what comes next. For example, the next word in a sentence, based on everything before it. This approach is what powers language models developed by OpenAI, Anthropic, Google, Meta, etc.

Transformers are good at handling long inputs and scaling to very large datasets. That's why they've become the go-to choice for generating text, writing code, and even mixing multiple data types.

Used for: Text generation, code completion, document summarization, chatbots, multimodal interfaces

Generative adversarial networks (GANs)

Generative adversarial networks (GANs) use two models working against each other.

One generates new content. The other tries to tell whether that content is real or fake. As they compete, both models get better—one at generating, the other at detecting.

GANs became popular for creating realistic images.

But they can be hard to train. They don't always produce stable results, and sometimes they get stuck generating only a few types of output.

Today, GANs are still used for things like improving image quality, translating between styles, or generating content without needing matched pairs of data.

Used for: Image synthesis, video generation, super-resolution, style transfer, domain adaptation

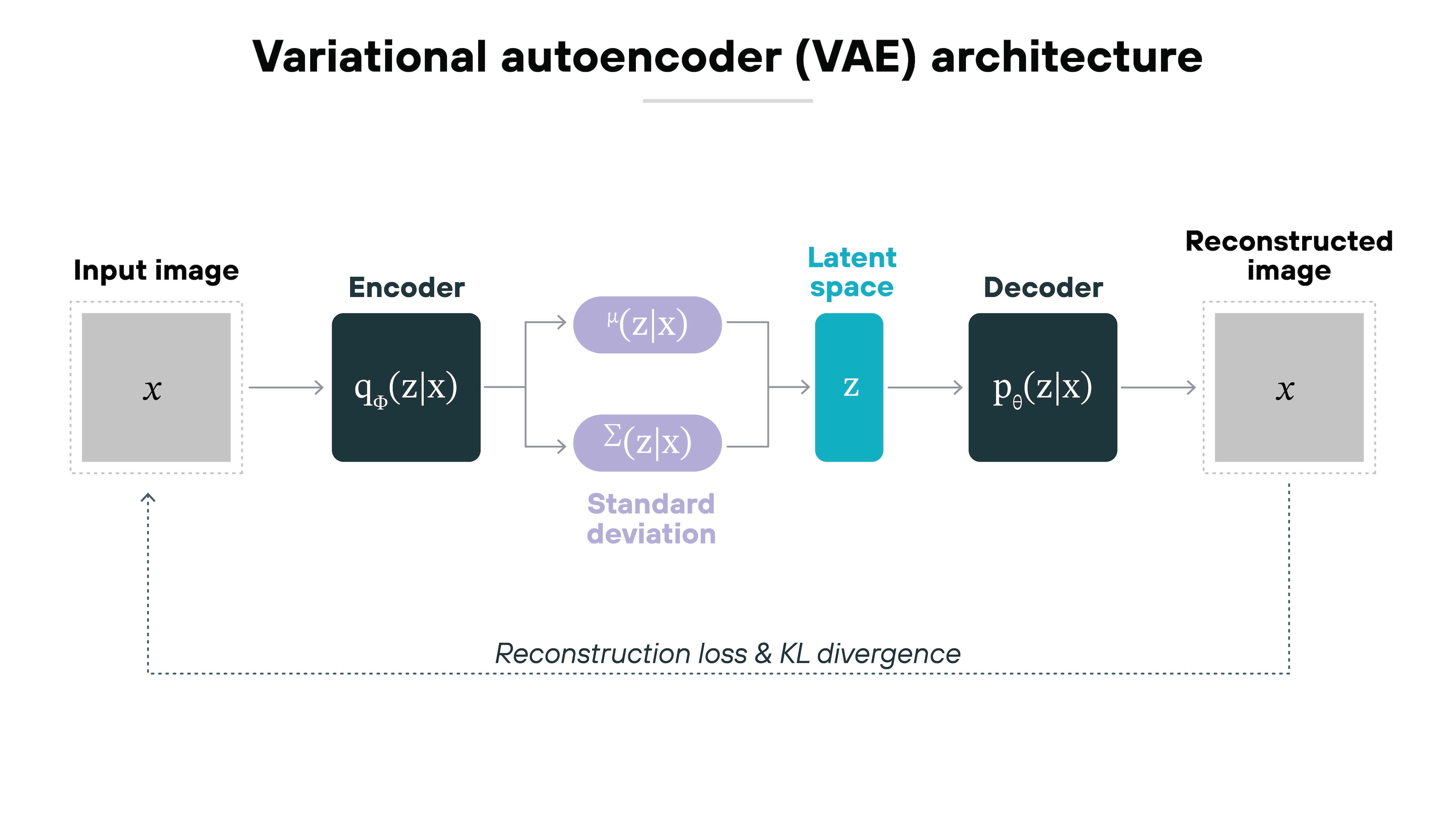

Variational autoencoders (VAEs)

Variational autoencoders (VAEs) work by first compressing the input—like an image or a file—into a simpler version with fewer details. Then they rebuild the original from that compressed version.

This makes them useful for spotting unusual patterns or creating controlled variations of the original input. For example, they can be used to detect errors or generate similar-but-different outputs.

The results are often less sharp than what GANs or diffusion models produce. But VAEs are easier to train and give developers more control over what the model generates.

Used for: Anomaly detection, image reconstruction, controllable generation, representation learning

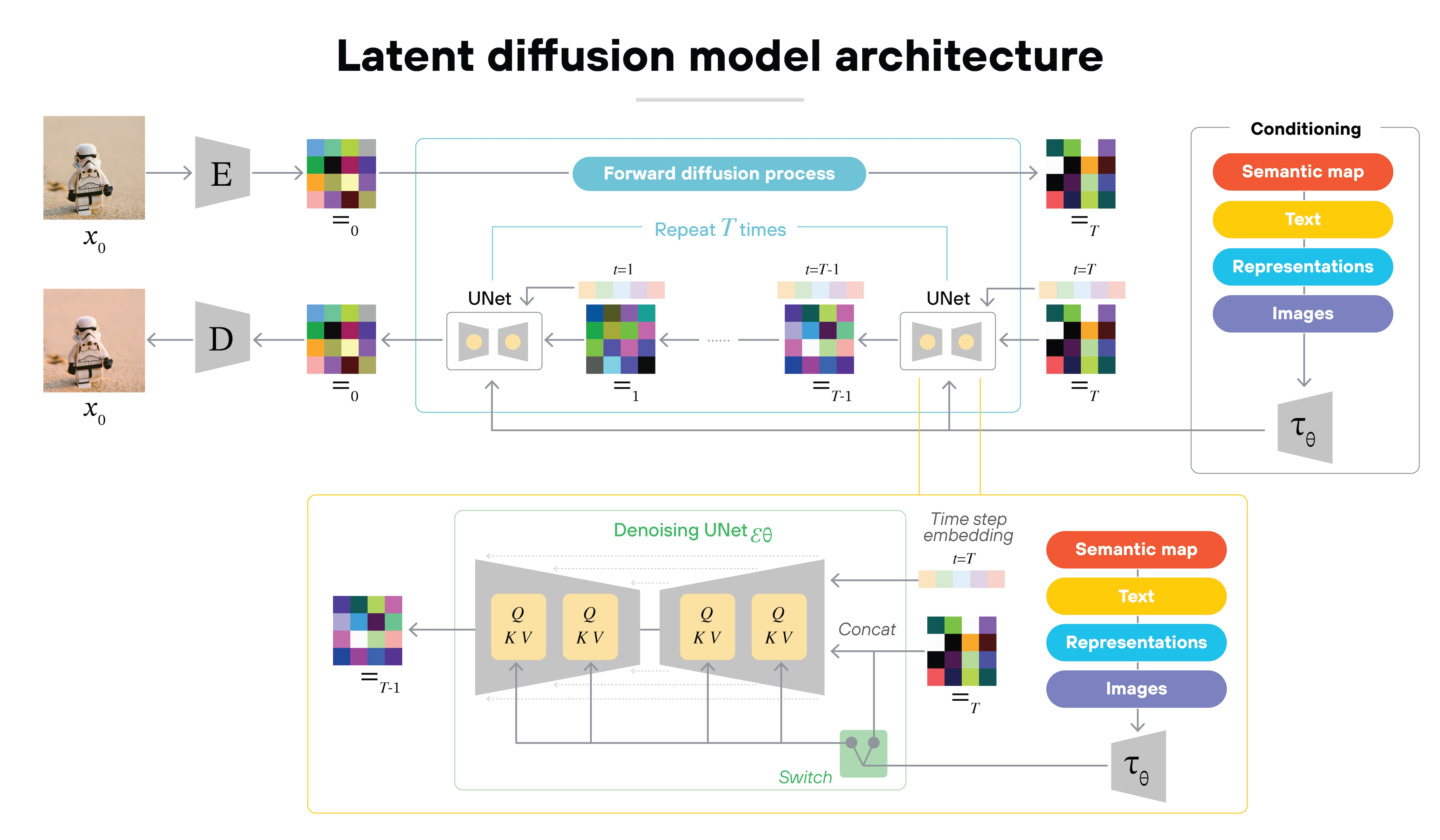

Diffusion models

Diffusion models create images by starting with random noise, like static on a screen.

Then they remove the noise in small steps until a clear image forms. This method is now widely used in image generation tools like DALL·E, Midjourney, and Stable Diffusion.

It takes longer to generate results compared to other models. But the images are often more detailed, and the process tends to be more reliable.

Used for: Text-to-image generation, inpainting, video generation, 3D synthesis

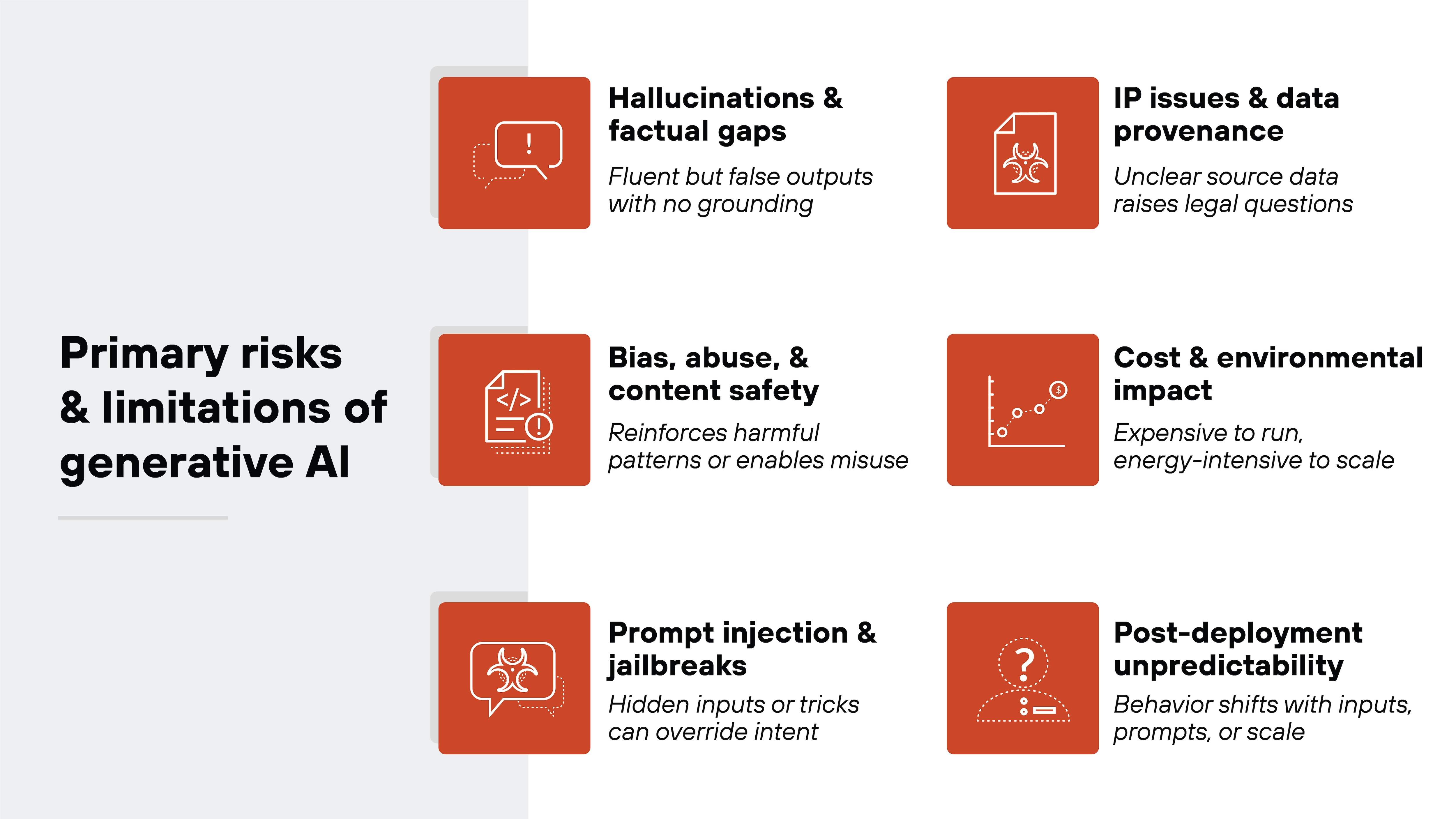

What are the limitations and risks of generative AI?

Generative AI systems are capable but not without tradeoffs. Their outputs may look fluent or convincing. But that doesn't mean they're accurate, fair, or safe.

The underlying models aren't fully predictable. And their behavior can change based on prompts, context, or scale.

Which means: the risks aren't just theoretical. They're already shaping how generative AI is built, deployed, and governed.

Hallucinations and factual gaps

Generative models often produce content that's fluent but false. These are known as hallucinations. They occur when models generate plausible-sounding text that isn't grounded in any real data.

Why? Because many models are trained to predict the next word. Not to verify facts. And without an external source of truth, there's no guarantee their answers are accurate.

This becomes a serious issue in high-stakes domains. Especially when users assume the output is trustworthy.

Bias, abuse, and content safety

Generative models reflect the data they were trained on. That includes social bias, stereotypes, and harmful language. Which means they can amplify existing harms or introduce new ones.

They can also be used maliciously. Attackers might generate misinformation, impersonate individuals, or automate abuse.

And even without malicious intent, generative content can cause offense or harm if left unchecked.

To manage that, many systems use content filters and moderation layers. But the underlying risk remains.

Prompt injection and jailbreaks

Generative models are susceptible to indirect prompt manipulation.

That includes prompt injection—where hidden instructions override user intent. And jailbreaks—where users trick the model into bypassing safety controls.

Why does this happen? Because models aren't reasoning about intent. They're following text patterns. So even minor phrasing tweaks can cause major behavior changes.

These vulnerabilities are hard to detect. And even harder to fully prevent.

IP issues and data provenance

Many widely used generative models are trained on public web data. That includes copyrighted material, proprietary datasets, and content with unclear licensing.

This raises questions about what models are allowed to generate. And what counts as derivative work. It also creates legal risk if outputs are used commercially without knowing where the underlying data came from.

Provenance remains a challenge. Most models don't track which data contributed to which output.

Cost and environmental impact

Training and running generative models is resource-intensive. It requires large GPU clusters, specialized hardware, and ongoing compute for deployment.

That makes them expensive to scale. And it has environmental implications. Especially around energy use, water consumption, and carbon emissions.

The bigger the model, the higher the cost. And not just financially. Also in terms of who has access and who doesn't.

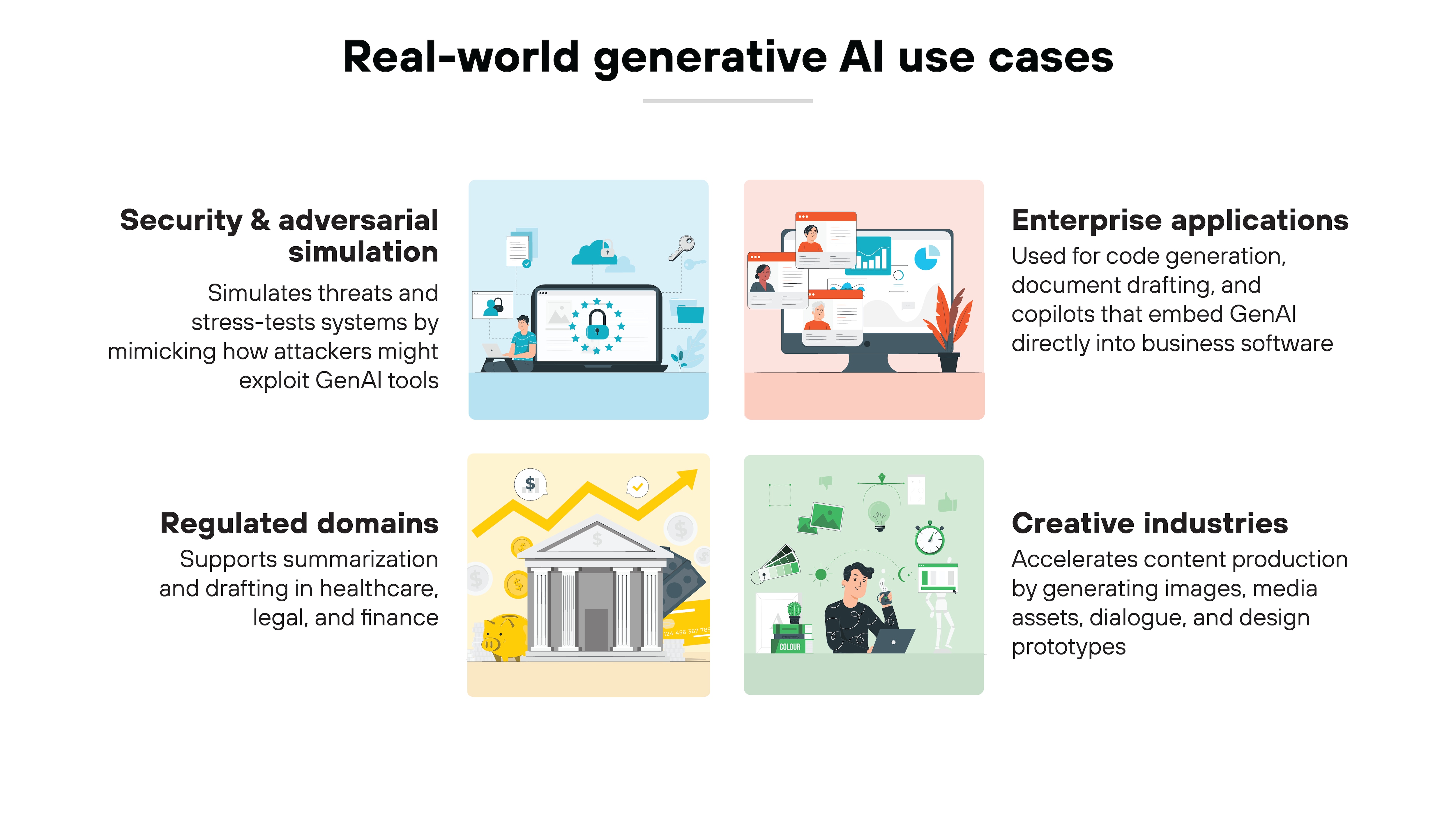

Real-world use cases for generative AI

Generative AI isn't limited to research labs or experimental prototypes. It's already being used across a wide range of industries.

From software development to security testing, generative systems are being embedded into everyday workflows. But the way they're used—and what they're used for—depends on the domain.

Here's how the technology is showing up in practice.

Enterprise applications

In enterprise settings, generative AI is used to automate content generation and streamline internal workflows. That includes generating code, drafting documentation, and summarizing internal reports.

-

One of the most common patterns is embedding models into copilots. These tools help users complete tasks directly inside business applications.

-

Another trend is retrieval-augmented generation. That's where generative models pull in context from internal data sources to produce more accurate answers.

These tools aren't just productivity boosters. They're changing how employees interact with systems and how knowledge moves inside organizations.

Creative industries

Creative teams use generative models to accelerate design and production.

In design and media, models can generate images, storyboard assets, or text-based concept drafts.

In game development, they're used to build environments, populate dialogue trees, and prototype levels.

Some tools use image diffusion models. Others rely on large language models (LLMs) or audio synthesis systems. The goal is to reduce the time between concept and usable output.

It's not about replacing creative work. It's about scaling it.

Regulated domains

Healthcare, finance, and legal fields are exploring GenAI for summarization, drafting, and question-answering.

In healthcare, models are used to summarize clinical notes and assist with documentation.

In legal, they help review contracts and surface relevant clauses.

In finance, they're being tested for risk analysis and reporting.

The stakes are higher in these domains. So most deployments involve strict controls, oversight, and human review. Generative models help. But they don't make decisions on their own.

Security and adversarial simulation

Generative AI is also being used in cybersecurity.

One application is red teaming, where models simulate phishing attempts or generate synthetic threats to test defenses. Another is adversarial simulation. That's where teams test how models respond to indirect or hostile prompts.

Some use cases are offensive. Others are defensive. Either way, generative models are helping teams test systems the way attackers might.

Which means:

They're no longer just targets in the security stack. They're tools too.

- The Role of Generative AI in Cybersecurity

- What Is AI Red Teaming? Why You Need It and How to Implement