- What is Data Security Posture Management? DSPM Guide

- What Is a Data Breach?

- What Is Cloud Data Protection?

- How DSPM Enables XDR and SOAR for Automated, Data-Centric Security

- DSPM Tools: How to Evaluate and Select the Best Option

- How DSPM Combats Toxic Combinations: Enabling Proactive Data-Centric Defense

- How DSPM Enables Continuous Compliance and Data Governance

- DSPM for AI: Navigating Data and AI Compliance Regulations

- What Is Data Exfiltration?

- What Is Data Classification?

- What Is Data-Centric Security?

- What Is Data Access Governance?

- DSPM Market Size: 2026 Guide

- How DSPM Is Evolving: Key Trends to Watch

- What Is a Data Flow Diagram?

- What Is Data Movement?

-

What Is Data Detection and Response (DDR)?

- Data Detection and Response Explained

- Why Is DDR Important?

- Improving DSPM Solutions with Dynamic Monitoring

- A Closer Look at Data Detection and Response (DDR)

- How DDR Solutions Work

- How Does DDR Fit into the Cloud Data Security Landscape?

- Does the CISO Agenda Need an Additional Cybersecurity Tool?

- Supporting Innovation Without Sacrificing Security

- DSPM and Data Detection and Response FAQs

- What Is Unstructured Data?

- What Is Structured Data?

- What Is Shadow Data?

- DSPM Vs. CSPM: Key Differences and How to Choose

What Is Data Discovery?

Data discovery is the process of identifying and exploring data within an organization to better understand the data’s meaning and potential uses. The core processes of data discovery involve analyzing and visualizing data from various sources to identify patterns, trends, and relationships to gain insights and inform decision-making.

As it pertains to data security in the cloud, data discovery focuses on the identification, analysis, and understanding of data assets within an organization's cloud infrastructure. With the increasing complexity of application environments, data discovery becomes essential to maintaining security and compliance, as it helps organizations gain visibility into their data, uncovering its sources, storage locations, and usage patterns. Organizations can then make informed decisions when establishing proper access controls, enforce encryption, and ensure compliance with data protection regulations.

Figure 1: Data discovery

How Data Discovery Works

Data discovery plays a pivotal role in enabling organizations to grasp the nuances of their expansive datasets. Though organizations frequently wrestle with data sprawl — exacerbated by ceaseless data accumulation and an absence of visibility in their data repositories — data discovery facilitates informed decision-making. The typical data discovery process leverages data profiling, exploration, and visualization methodologies. These techniques illuminate the data’s structure, content, and quality, providing users with a fundamental understanding.

Data discovery tools get consumed by data analysts, business analysts, and other stakeholders to explore data and uncover hidden insights. These tools can include data profiling software, visualization tools, and analytics platforms that allow them to analyze data in real time. As the data flow is neverending, automated tooling helps keep organizations on top of the evolving landscape, adapting their security posture to address the change. Without automated tooling, the information gathered would become stale and ineffective, exposing the organization.

Shadow data presents a significant concern in this context. Data discovery efforts help uncover these hidden repositories, typically residing outside the official channels and overlooked due to their obscured nature. The adoption of microservices and the complex tapestry of multicloud frameworks exacerbate the challenge.

Data Discovery in the Cloud

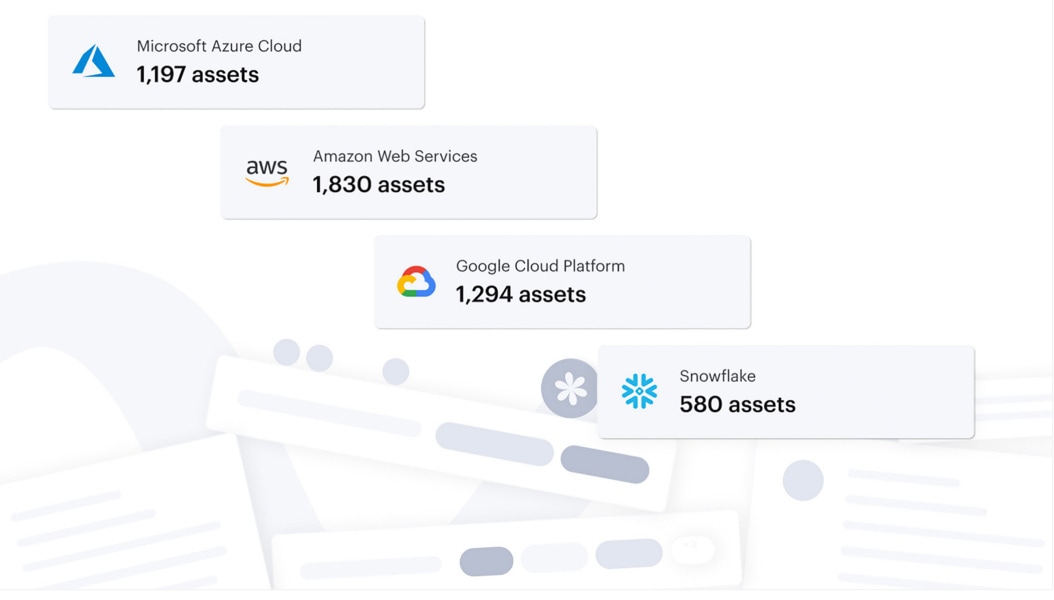

In terms of cloud security, data discovery involves locating and examining data stored across various cloud platforms, including public, private, and hybrid clouds. Modern data security posture management (DSPM) solutions automate this process by continuously scanning across all cloud providers, services, and data stores to discover sensitive data assets. It enables organizations to identify sensitive information, assess potential risks, and implement appropriate security controls. In addition to shadow data, data discovery helps uncover shadow IT resources, which may contain unprotected data or introduce vulnerabilities. Through continuous monitoring and data analysis, security teams can respond to emerging threats, adapt to changes in the threat landscape, and uphold regulatory compliance.

Data Discovery: The Key to Data Classification

Data discovery and data classification are closely intertwined processes, as both contribute to safeguarding data and ensuring compliance with data protection regulations in cloud environments.

By categorizing data based on its sensitivity and regulatory requirements, data classification allows organizations to determine the right security controls for each data asset. Data discovery facilitates classification by first locating and understanding the data. Organizations can then implement tailored security measures for each data category, ensuring that sensitive information is adequately protected.

Additionally, data discovery and classification enable organizations to maintain a proactive security posture and achieve regulatory compliance in the cloud. By continuously monitoring and analyzing data, security teams can identify potential vulnerabilities, misconfigurations, or unauthorized access to sensitive data. Teams can then respond to emerging threats and adapt security controls as needed, ultimately ensuring the safety and integrity of their data.

Benefits of Data Discovery

Organizations are inundated with data from many sources, heightening the complexity of understanding and accurately classifying it. It’s not merely about distinguishing between diverse data types. Organizations must grasp the intricacies of the data’s origins, interrelationships, and associated risks. Every dataset — from customer details and internal communications to proprietary algorithms — necessitates security measures based on inherent risks and value.

While correct classification is paramount to safeguarding sensitive data, the sheer volume and the evolving digital landscape amplify the challenges. The advent of remote work, multicloud strategies, and the proliferation of Internet of Things (IoT) devices further obscure the boundaries of data storage and transfer. Ensuring it has the appropriate protections is virtually impossible without understanding what data exists and its proper classification.

Better Decision-Making

Data discovery helps organizations make better, data-driven decisions by providing insights into their data. By uncovering patterns, trends, and relationships in the data, organizations can make informed decisions based on accurate and relevant information.

Maintaining Compliance

Data discovery helps identify various types of sensitive data controlled by legal or regulatory frameworks. The data may have stringent requirements for how it is protected and shared, with significant consequences if not handled properly.

Improved Data Quality

Data discovery can help identify data quality issues, such as missing or inconsistent data. By addressing these issues, organizations can improve the overall quality of their data, improving the accuracy of their decisions.

Increased Efficiency

Data discovery can help organizations save time and resources by quickly accessing the needed data, which eliminates the need for manual data exploration and analysis, saving time-consuming and reducing errors.

Competitive Advantage

Organizations that can effectively leverage their data through data discovery have a competitive advantage over those that don’t. Organizations can stay ahead of the competition by using data to make informed decisions and identify opportunities.

Reasons for Data Discovery

Modern enterprises collect and process vast amounts of information while doing business. The data may originate from external parties such as vendors or customers, but it may also be generated throughout the course of operations. Organizations need a complete understanding of where their data resides and what is contained in it to avoid exposing themselves.

- Data classification: Data discovery can help organizations classify their data based on sensitivity and criticality. Organizations can then apply appropriate security controls and comply with data protection regulations.

- Access control: Data discovery can help organizations identify who has access to what data. Knowing this they can ensure that access is appropriate and in compliance with regulations.

- Privacy compliance: Data discovery can help organizations identify personal data and ensure its protection aligns with privacy regulations such as GDPR, CCPA, and HIPAA.

- Threat detection: Data discovery can help organizations identify potential security threats by monitoring data access and usage patterns, which helps organizations to detect and respond to security incidents before they cause significant damage.

- Audit and compliance reporting: Data discovery can help organizations generate audit reports and compliance documentation to demonstrate compliance with regulations such as PCI-DSS, SOX, and FISMA.

- Data retention and disposal: Data discovery can help organizations identify data that has exceeded its retention period and should be disposed of in compliance with regulations.

Efficient Data Management

Manual processes for data discovery and data analysis are only sufficient for the smallest of organizations. As organizations grow, the volume of data to locate and analyze rapidly outgrows what can be discovered through manual assessment.

Automated data discovery solutions are necessary to analyze modern enterprises. Basic tools will be able to discover and analyze data in expected data storage locations such as databases and shared storage. Discovering all data organizations have stored in the cloud or shadow IT requires more advanced tooling.

Shadow IT encompasses all unknown systems and services that may be created temporarily to accomplish an IT goal but linger well beyond their intended purpose. These systems often house sensitive information yet are poorly maintained.

Cloud resources are another challenge for automated tools, as many discovery tools are designed for on-premises. Advanced discovery tools can analyze all cloud providers used by an organization to discover the locations where data resides and classify it by what it contains, allowing teams to decide if it should remain in that location or if new security controls are required to protect it.

Data Discovery FAQs

Data sprawl refers to the growing volumes of data produced by organizations and the difficulties this creates in data managing and monitoring. As organizations collect more data and increase the amount of storage systems and data formats, it can become difficult to understand which data is stored where. Lacking this understanding can lead to increased cloud costs, inefficient data operations, and data security risks as the organization loses track of where sensitive data is stored — and consequently fails to apply adequate security measures.

To mitigate the impact of data sprawl, automated data discovery and classification solutions can be used to scan repositories and classify sensitive data. Establishing policies to deal with data access permissions can also help. Data loss prevention (DLP) tools can detect and block sensitive data leaving the organizational perimeter, while data detection and response tools offer similar functionality in public cloud deployments.

Data in use refers to data that is actively stored in computer memory, such as RAM, CPU caches, or CPU registers. It’s not passively stored in a stable destination but moving through various systems, each of which could be vulnerable to attacks. Data in use can be a target for exfiltration attempts as it might contain sensitive information such as PCI or PII data.

To protect data in use, organizations can use encryption techniques such as end-to-end encryption (E2EE) and hardware-based approaches such as confidential computing. On the policy level, organizations should implement user authentication and authorization controls, review user permissions, and monitor file events.

Data loss prevention (DLP) can identify and alert security teams that data in use is being attacked. In public cloud deployments, this is better achieved through the use of data detection and response tools.

Data management refers to the optimal organization, storage, processing, and protection of data. This involves implementing best practices, such as data classification, access control, data quality assurance, and data lifecycle management, as well as leveraging automation, advanced analytics, and modern data management tools.

Efficient data management enables organizations to quickly access and analyze relevant information, streamline decision-making, reduce operational costs, and maintain data security and compliance.

The data lifecycle describes the stages involved in a data project — from generating the data records to interpreting the results. While definitions vary, lifecycle stages typically include:

- Data generation

- Collection

- Processing

- Storage

- Management

- Analysis

- Visualization

- Interpretation

Managing data governance, classification, and retention policies can all be seen as part of a broader data lifecycle management effort.

Data retention and disposal involve defining and implementing policies for the storage, preservation, and deletion of data in accordance with legal, regulatory, and business requirements. Data retention policies specify how long data should be stored based on its type, purpose, and associated regulatory obligations. It ensures that data is securely deleted or destroyed when it reaches the end of its retention period or is no longer required.

Proper data retention and disposal practices help organizations reduce the risk of data breaches and maintain compliance while minimizing storage costs.

Compliance in data management involves adhering to legal, regulatory, and industry-specific requirements related to the collection, storage, processing, and transfer of data. Compliance measures include implementing security controls, data classification, access control, encryption, and regular audits. Key regulations that organizations may be required to comply with include:

- General Data Protection Regulation (GDPR)

- California Consumer Privacy Act (CCPA)

- Health Insurance Portability and Accountability Act (HIPAA)

- Payment Card Industry Data Security Standard (PCI DSS)

Threat detection in data management involves identifying and analyzing potential security threats and anomalies within an organization's data infrastructure. By monitoring data access, usage patterns, and network traffic, security teams can detect malicious activities, unauthorized access, or data exfiltration attempts.

Advanced techniques, such as machine learning and artificial intelligence, can be employed to automate threat detection, allowing for real-time analysis and response. Implementing robust threat detection mechanisms helps organizations protect sensitive data, mitigate data breaches, and maintain regulatory compliance.