- What Is Cloud Native?

- What Is Threat Modeling?

- CSP-Built Security Vs. Cloud-Agnostic Security

- What Is CNAPP?

- What Are Microservices?

- What Is Cloud-Native Security?

- What Is CSPM? | Cloud Security Posture Management Explained

- What Is Microsegmentation?

-

Core Tenets of a Cloud Native Security Platform (CNSP)

- What Is a Cloud Native Security Platform?

- CSPM Tools: How to Evaluate and Select the Best Option

- What is Platform as a Service (PaaS)?

-

What Is Serverless Security?

What Is Cloud Network Security?

Cloud network security is a critical aspect of safeguarding containerized applications and their data in the modern computing landscape. It involves securing network communication and configurations for these applications, regardless of the orchestration platform in use. Cloud network security addresses network segmentation, namespaces, overlay networks, traffic filtering, and encryption for containers. By implementing cloud network security technologies and best practices, organizations can effectively prevent network-based attacks like cryptojacking, ransomware, and BotNetC2 that can impact both public-facing networks and internal networks used by containers to exchange data.

Cloud Network Security Explained

All workloads run on the same network stack and protocols, regardless of whether they run on bare-metal servers, virtual machines, or in containers. In other words, containerized workloads are subject to many of the same network-based attacks as legacy applications — cryptojacking, ransomware, BotNetC2, and more.

Network-based security threats, however, can impact containers in two ways — via public-facing networks that connect applications to the internet and via internal networks that Kubernetes containers use to exchange data within each other.

Cloud network security focuses on securing network communication and configurations for containerized applications in general, regardless of the orchestration platform. It addresses aspects such as network segmentation, namespaces, overlay networks, traffic filtering, and encryption for containers.

Kubernetes network security targets network security within a Kubernetes cluster and encompasses Kubernetes-specific features, such as network policies, ingress and egress controls, namespace isolation, role-based access control (RBAC), and service mesh implementation.

Detecting signs of malicious activity on both types of networks requires both container security and Kubernetes network security. As these are distinct domains, they warrant separate discussions to cover their unique aspects. In this section, we’ll delve into the various aspects of cloud network security as it pertains to containers and discuss best practices for safeguarding your environment.

Cloud Network Security

Once deployed, containers need to be protected from attempts to steal proprietary data and compute resources. Cloud network security, a key aspect of Kubernetes security, proactively restricts unwanted communication and prevents threats from attacking your applications once deployed.

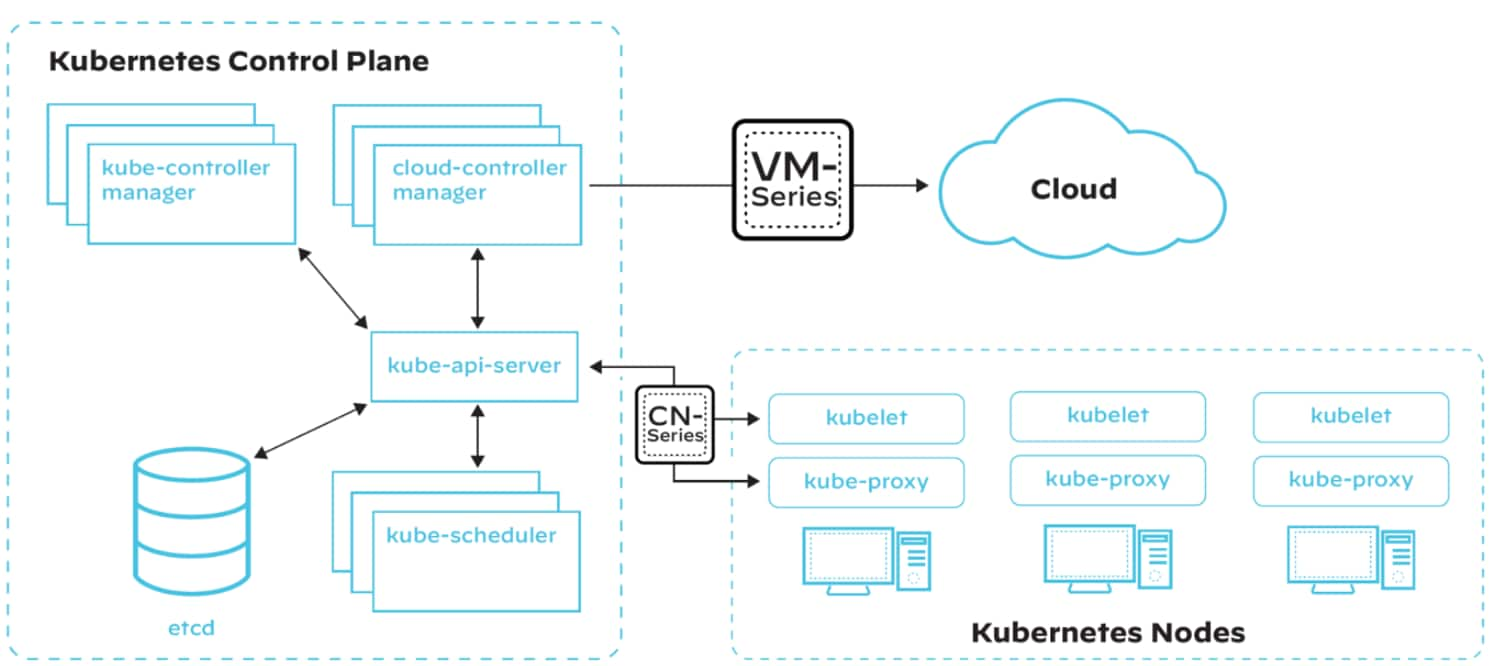

Containerized next-generation firewalls, web application and API security (WAAS), and microsegmentation tools inspect and protect all traffic entering and exiting containers (north-south and east-west), granting full Layer 7 visibility, and control over the Kubernetes environment. Additionally, containerized firewalls dynamically scale with the rapidly changing size and demands on the container infrastructure to provide security and bandwidth for business operations.

Figure 1: A simplified Kubernetes architecture with overlaid VM-Series (virtualized next-generation firewall) and CN-Series (containerized firewall tailored for securing Kubernetes-based containerized applications)

Network Segmentation

Network segmentation is the practice of dividing a network into smaller, isolated segments to limit unauthorized access, contain potential threats, and improve overall network performance. In containerized environments, security teams can achieve network segmentation through a variety of methods.

Network Namespaces

Network namespaces provide isolation between containers by creating a separate network stack for each, including their own network interfaces, routing tables, and firewall rules. By leveraging network namespaces, you can prevent containers from interfering with each other's network configurations and limit their visibility to only the required network resources.

Overlay Networks

Overlay networks create a virtual network layer on top of the existing physical network, which allows containers to communicate across different hosts as if they were on the same network. Popular overlay network solutions for containers include Docker's built-in overlay driver, Flannel, and Weave.

Network Partitions and Security Groups

Network partitions and security groups can further segment container networks by creating logical boundaries and applying specific firewall rules to restrict traffic between segments.

Traffic Filtering and Firewall Rules

Containerized next-generation firewalls stop malware from entering and spreading within the cluster, while also preventing malicious outbound connections used in data exfiltration and command and control (C2) attacks. Although shift-left security tools provide deploy-time protection against known vulnerabilities, containerized next-generation firewalls provide protection against unknown and unpatched vulnerabilities.

Traffic filtering and firewall rules are essential for controlling the flow of traffic between containers, as well as between containers and the host.

Egress and Ingress Filtering

Egress filtering controls the outbound traffic from a container, while ingress filtering controls the inbound traffic to a container. By applying egress and ingress filtering, you can limit the exposure of your containers to external threats and restrict their communication to only the necessary services.

Applying Firewall Rules to Container Traffic

Firewall rules can be applied at various levels, including the host, container, and network level. You can use Linux iptables or firewalld, for instance, to create rules that govern container traffic and protect your infrastructure from unauthorized access and malicious activities.

Load Balancing and Traffic Routing

Load balancing and traffic routing are important for distributing traffic across multiple containers and ensuring high availability of your applications. Solutions like HAProxy, NGINX, or Kubernetes' built-in services can be used to route traffic to the appropriate container based on predefined rules and health checks.

Encryption and Secure Communication

Encrypting and securing communication between containers, and between containers and the host, is vital for protecting sensitive data and maintaining the integrity of your applications.

Transport Layer Security (TLS) for Container Traffic

TLS provides encryption and authentication for data transmitted over a network. By implementing TLS for container traffic, you can ensure that data transmitted between containers and between containers and the host is encrypted and secure from eavesdropping or tampering. You can achieve this by using tools like OpenSSL or Let's Encrypt to generate and manage TLS certificates for your containers.

Securing Container-to-Container Communication

To secure communication between containers, you can use container-native solutions like Docker's built-in encrypted networks or third-party tools like Cilium, which provides API-aware network security for containers. These solutions enable you to implement encryption, authentication, and authorization for container-to-container traffic.

Securing Container-to-Host Communication

Ensuring secure communication between containers and the host can be achieved by using host-level encryption and authentication mechanisms, such as SSH or TLS-protected APIs, to control access to container management interfaces and data storage systems.

Kubernetes Network Security

Network Policies

Network policies are a key feature of Kubernetes that allows you to control the flow of traffic within your cluster and between your cluster and external networks. Modern tools make it possible for security teams to define policies that essentially determine who and what are allowed to access any given microservice. Organizations need a framework for defining those policies and making sure that they’re consistently maintained across a highly distributed container application environment.

Defining and Enforcing Network Policies

Kubernetes network policies are defined using YAML files, which specify the allowed traffic between components like pods, services, and namespaces. Once defined, these policies can be enforced using network plugins that support Kubernetes network policy API, such as Calico or Cilium.

Whitelisting and Blacklisting Traffic

Network policies can be used to whitelist or blacklist traffic between components of your cluster based on criteria that include pod labels, IP addresses, or namespaces. Establishing these will allow you to control which services can communicate with each other and prevent unauthorized access to sensitive data or resources.

Namespace Isolation and Segmentation

By applying network policies at the namespace level, you can isolate and segment applications or environments within your cluster, restricting traffic to only the necessary components and preventing potential security risks.

Ingress and Egress Controls

Controlling ingress and egress traffic is critical for managing the flow of data into and out of your Kubernetes cluster and protecting it from external threats.

Ingress Controllers and Load Balancing

Ingress controllers in Kubernetes manage the routing of external traffic to the appropriate services within your cluster based on predefined rules. Load balancing can be achieved through built-in Kubernetes services or third-party solutions like NGINX and HAProxy. These solutions allow you to route traffic based on criteria such as path, host, or headers. They also allow you to provide TLS termination and other security features.

Ingress Access Best Practices

- Correct the default "any-any-any allow" Kubernetes policy by applying a deny-all policy for every namespace.

- Prevent services from accepting incoming traffic directly from external IPs unless a load-balancer or ingress is attached. Only allow incoming traffic from load-balancers or ingresses.

- Limit traffic to specific protocols and ports according to the service's requirements (e.g., HTTP/HTTPS for web services, UDP 53 for DNS service).

- Accept traffic only from other services (pods) that consume them, whether in the same namespace or from another.

- To create an ingress policy from a pod in another namespace, add a label to the namespace.

Egress Traffic Management

Controlling egress traffic from your Kubernetes cluster is essential for preventing data leakage and ensuring that outbound connections are restricted to only the required destinations. You can achieve this by using egress network policies, which allow you to define rules for outbound traffic from your pods or namespaces. Additionally, egress gateways or proxy solutions like Squid can be used to control and monitor outbound traffic from your cluster.

Egress Access Best Practices

- Understand the need for each external service used by your microservices. Products like Cortex Cloud Compute Defender can help identify external flows engaged by microservices.

- If a pod needs to connect to a DNS (FQDN) name without a fixed IP address, use an external firewall or proxy, as Kubernetes network policies only support IP addresses.

- Prevent outbound traffic from pods that don't need external connections to reduce the risk of data exfiltration or downloading malicious binaries.

- Apply a block egress policy if you have no external dependencies but ensure essential services like Kubernetes DNS Service remain connected when enforcing egress policies.

Identity-based microsegmentation helps restrict the communication between applications at Layer 3 and Layer 4 while containerized next-gen firewalls perform Layer 7 deep packet inspection and scan all allowed traffic to identify and prevent known and unknown threats.

DNS Policies and Security

DNS is integral to Kubernetes networking, as it provides name resolution for services and other components within your cluster. Ensure the security and integrity of your DNS infrastructure to prevent attacks like DNS spoofing or cache poisoning.

Kubernetes provides built-in DNS policies for controlling the behavior of DNS resolution within your cluster, and you can also use external DNS providers or DNS security solutions like DNSSec to enhance the security of your DNS infrastructure.

Service Mesh and Network Encryption

Service mesh is a dedicated infrastructure layer that provides advanced networking features, such as traffic routing, load balancing, and security for your microservices and containerized applications.

Implementing Service Mesh

Service mesh solutions like Istio and Linkerd can be integrated with your Kubernetes cluster to provide advanced networking capabilities and enhance the security of your containerized applications. These solutions offer features like mutual TLS, access control, and traffic encryption, which can help protect your applications, particularly microservices, from various security threats.

Mutual TLS (mTLS) for Secure Communication

Mutual TLS (mTLS) is a security protocol where both the client and server authenticate each other's identities before establishing a secure connection. Unlike traditional TLS, where only the server is authenticated by the client, mTLS adds an additional layer of security by requiring the client to present a certificate. The added requirement verifies that both parties are who they claim, which can help prevent unauthorized access, data leakage, and man-in-the-middle attacks.

Observability and Control of Network Traffic

Service mesh solutions also provide observability and control over your network traffic, allowing you to monitor the performance and security of your applications in near-real time. Early identification of unauthorized access, unusual traffic patterns, and other potential security issues means you can take early corrective actions to mitigate the risks.

Encrypting Traffic and Sensitive Data

To ensure the confidentiality and integrity of data within your cluster, it’s essential to implement encryption techniques for both internal and external communications.

IPsec for Encrypting Communication Between Hosts

IPsec safeguards cluster traffic by encrypting communications between all master and node hosts. Remain mindful of the IPsec overhead and refer to your container orchestration documentation for enabling IPsec communications within the cluster. Import the necessary certificates into the relevant certificate database and create a policy to secure communication between hosts in your cluster.

Configuring Maximum Transmission Unit (MTU) for IPsec Overhead

Adjust the route or switching MTU to accommodate the IPsec header overhead. For instance, if the cluster operates on an Ethernet network with a maximum transmission unigt (MTU) of 1500 bytes, modify the SDN MTU value to account for IPsec and SDN encapsulation overhead.

Enabling TLS for API Communication in the Cluster

Kubernetes assumes API communication within the cluster is encrypted by default using TLS. Most installation methods create and distribute the required certificates to cluster components. Be aware, though, that some components and installation methods may enable local ports over HTTP. Administrators should stay informed about each component's settings to identify and address potentially insecure traffic.

Kubernetes Control Plane Security

Control planes, particularly in Kubernetes clusters, are prime targets for attacks. To enhance security, harden the following components through inspection and proper configuration:

- Nodes and their perimeters

- Master nodes

- Core components

- APIs

- Public-facing pods

While Kubernetes' default configuration provides a certain level of security, adopting best practices can strengthen the cluster for workloads and runtime communication.

Network Policies (firewall rules)

Kubernetes' flat network allows all deployments to reach other deployments by default, even across namespaces. This lack of isolation between pods means a compromised workload could initiate an attack on other network components. Implementing network policies can provide isolation and security.

Pod Security Policy

Kubernetes permits pods to run with various insecure configurations by default. For example, running privileged containers with root permissions on the host is high risk, as is using the host's namespaces and file system or sharing the host's networking. Pod security policies enable administrators to limit a pod's privileges and permissions before allowing deployment into the cluster. Isolating non-dependent pods from talking to one another using network policies will help prevent lateral movement across containers in the event of a breach.

Secrets Encryption

Base distributions of Kubernetes don’t encrypt secrets at rest by default (though managed services like GKE do). If an attacker gains access to the key-value store (typically Etcd), they can access everything in the cluster, including unencrypted secrets. Encrypting the cluster state store protects the cluster against data-at-rest exfiltration.

Role-Based Access Control

While RBAC is not exclusive to Kubernetes, it must be configured correctly to prevent cluster compromise. RBAC allows for granular control over the components in the cluster that a pod or user can access. By restricting what users and pods can view, update, delete, and create within the cluster, RBAC helps limit the potential damage of a compromise.

Addressing Control Plane Security Through Virtual Patching

Minimizing admin-level access to control planes and ensuring your API server isn’t publicly exposed are the most important security basics.

DevOps and SecOps teams can identify vulnerabilities in application packages, but mitigating these risks takes time. Vulnerable packages must be replaced or patched and tested before deployment, which leaves the environment exposed until the resolving the issue. Solutions like Cortex Cloud automate vulnerability mapping for each workload to provide virtual patching for known vulnerabilities. By utilizing its WAAS component, the solution adjusts traffic inspection policies to detect and block remote HTTP-based exploits.

Network Security Best Practices for Containers and Kubernetes

In summary of the areas discussed, the following best practices serve as a checklist to ensure your teams are equipped to safeguard your containerized applications and data from network-based threats.

Monitoring and Logging Network Traffic

Keeping a close eye on your network traffic is paramount for detecting and responding to security incidents and maintaining the overall health of your containerized environment.

Centralized Logging and Monitoring Solutions

Implementing a centralized logging and monitoring solution for your container and Kubernetes environment, such as ELK Stack, Prometheus, or Cortex Cloud, can help you collect, analyze, and visualize network traffic data from a variety of areas. Easy access to centralized data intel will enable you to identify trends, detect anomalies, and gain insights into the performance and security of your infrastructure.

Detecting and Responding to Security Incidents

Monitoring network traffic and establishing alerts for unusual or suspicious activities allows for quick detection and response to incidents involving unauthorized access, data exfiltration, and other malicious activities. Security teams are equipped to take appropriate measures such as isolating affected components, blocking malicious IPs, or updating firewall rules in a timely manner.

Network Traffic Visualization and Analysis

Visualizing and analyzing your network traffic data can help you identify patterns and trends that may indicate potential security risks. Tools like Kibana, Grafana, or custom-built dashboards can be used to create visual representations of your network traffic, allowing you to spot anomalies and investigate security incidents more effectively.

Secure Network Configurations

Hardening your network configurations and implementing strong access controls are vital for protecting your container and Kubernetes environment from security threats.

Hardening Host and Cloud Network Settings

Maintaining the security of your environment requires ensuring secure network configurations for both your container host and individual containers. Essential measures involve disabling unused network services, limiting network access to only the necessary components, and applying security patches and updates to your host operating system and container runtime.

Network Access Controls and Authentication

To prevent unauthorized access and maintain the integrity of your container and Kubernetes environment, it’s essential to implement strong access controls and authentication mechanisms. Key measures involve utilizing role-based access control (RBAC) for managing user permissions in Kubernetes, incorporating multifactor authentication (MFA), and employing network security solutions, such as VPNs or firewalls, to limit access to your environment.

Regular Network Security Assessments

Regular network security assessments — vulnerability scans, penetration tests, and security audits — are a must when it comes to identifying potential weaknesses in your container and Kubernetes environment. Key aspects of these assessments involve examining network configurations, firewall rules, and security policies to ensure adherence to industry best practices and compliance requirements.

By following these best practices and implementing effective network security measures, you can fortify your container and Kubernetes environment from potential network-based threats and ensure the safety and integrity of your applications and data.

Cloud Network Security FAQs

MTU, or maximum transmission unit, refers to the largest size of a data packet that can be transmitted over a network. It’s a contributing parameter in determining the efficiency and performance of data communication across networks. Transmission control protocol (TCP) uses the MTU to determine the maximum size of each packet in internet transmissions.

To accommodate the additional header overhead introduced by encryption and encapsulation, it’s necessary to adjust the MTU value when using IPsec for secure communication. For example, if the Kubernetes cluster operates on an Ethernet network with a default MTU of 1500 bytes, the MTU value should be reduced to account for the IPsec and SDN encapsulation overhead. The adjustment prevents packet fragmentation and ensures more efficient and secure data transmission.