- What Is a Cloud Workload Protection Platform (CWPP)?

- Agentless vs Agent-Based Security

- What is the Difference Between Web Application Firewall (WAF) and Next-Generation Firewall (NGFW)?

- What Is Layer 7?

-

What Is Web Application and API Protection?

-

What Is an API Gateway?

- API Gateway Explained

- API Gateways for Microservices Architectures

- What Is the API Gateway Pattern?

- Challenges of API Gateways Potential Response Time Increases

- Use Cases and API Gateway Benefits

- Service Mesh Vs. API Gateway

- API Gateway Vs. Load Balancer

- API Gateways Security and WAFs

- API Gateway Solutions

- Industry Practices and Standards

- API Gateway FAQs

What Is a Workload?

A workload is a computational task, process or data transaction. Workloads encompass the computing power, memory, storage and network resources required for the execution and management of applications and data. Within the cloud framework, a workload is a service, function or application that uses computing power hosted on cloud servers. Cloud workloads rely on technologies such as virtual machines (VMs), containers, serverless, microservices, storage buckets, software as a service (Saas), infrastructure as a service (Iaas) and more.

Workloads Explained

A workload comprises all tasks that a computer system or software is processing. These tasks can range from the completion of a minor computational action to managing complex data analysis or running intensive business-critical applications. Workloads, in essence, define the demands placed on IT resources, which include servers, virtual machines (VMs) and containers.

We can also categorize workloads at the application level with consideration to operations such as data processing, database management and rendering tasks. The level and type of workloads can influence the performance of a system. In some cases, without effective management, the intensity of the load can cause interruptions or slowdowns of the system.

Types of Workloads

Unique requirements for computing, storage and networking resources define each type of workload.

- Compute workloads are applications or services that require processing power and memory to perform their functions. These can include VMs, containers and serverless functions.

- Storage workloads refer to services requiring large amounts of data storage, such as content management systems and databases.

- Network workloads, such as video streaming and online gaming, require high network bandwidth and low latency.

- Big-data workloads require processing and analysis of large datasets, such as machine learning (ML) and artificial intelligence.

- Web workloads are applications or services accessed via the internet. These include e-commerce sites, social media platforms and web-based applications.

- High-performance computing workloads refer to services that need high processing power. Examples include weather modeling and financial modeling.

- Internet of things (IoT) workloads require processing and analysis of data from sensors and other devices, such as smart homes, industrial automation and connected vehicles.

Workloads Then and Now

In the early days of shared-use mainframe computers, workloads were defined by their use. Transactional workloads executed jobs one at a time to ensure data integrity, while batch workloads represented a batch of commands or programs that ran without user intervention. Real-time workloads processed incoming data in real time.

But with the rise of cloud adoption, the concept of workloads has evolved, shifting from traditional on-premises data centers to cloud-based environments. This transformation involves migrating workloads to infrastructure as a service (IaaS), platform as a service (PaaS) or software as a service (SaaS) cloud environments.

Today, in the context of cloud computing, a workload is a cloud-native or non-cloud-native application or capability that can run on a cloud resource. VMs, databases, containers, Hadoop nodes and applications are all considered cloud workloads.

The hybrid multicloud network is vastly more complicated than legacy, on-premises data centers. Organizations must now ensure container security and integrity in private and public clouds, often hosted by multiple cloud service providers (CSPs). But cloud services accommodate fluctuating workloads without the need for significant upfront capital and prove cost-effective.

Cloud Workload Characteristics

Cloud workloads, via common architectures and cloud infrastructures, share distinct characteristics. These include:

- Scalability: Cloud workloads offer the ability to increase or decrease resources based on demand, which enhances resource management efficiency.

- Elasticity: The capacity of cloud workloads to adjust to changes through autonomous provisioning and deprovisioning of resources is pivotal to modern enterprises.

- Resource Pooling: Cloud workloads share a pool of configurable computing resources, promoting efficient resource utilization.

- Measured Service: Cloud systems automatically control and optimize resource use by applying a metering capability suitable to the type of service, whether IaaS, PaaS or SaaS.

- On-Demand Self-Service: Cloud users can provision computing capabilities, such as server time and network storage, without human interaction with service providers.

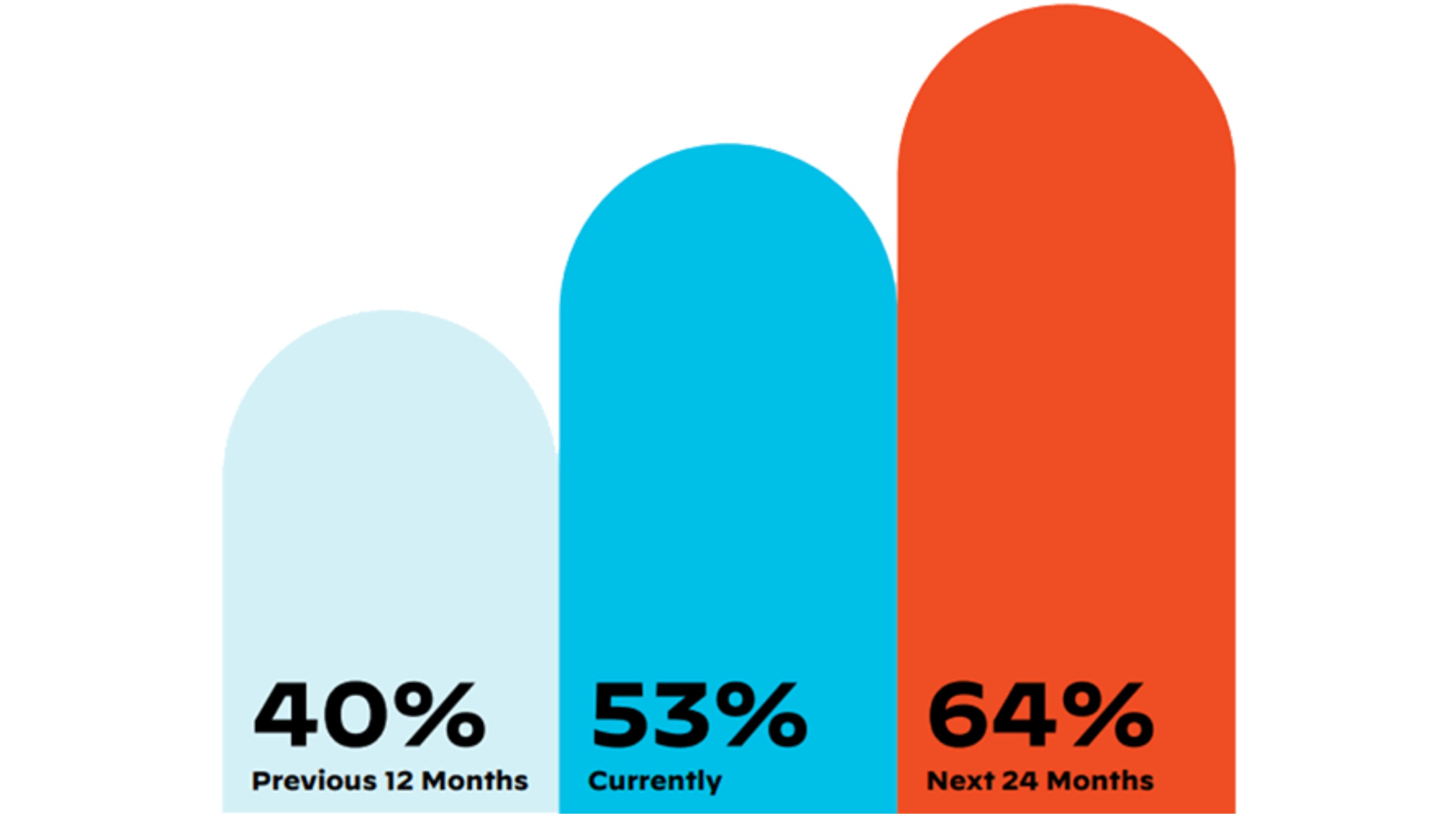

Figure 1: Percentage of workloads moved to the cloud this year, according to the State of Cloud-Native Security Report

Cloud or On-Premises?

The decision of where to run workloads will depend on variables specific to your organization and workloads. Organizations should evaluate options and consider performance, security, compliance and cost to determine the best environment.

Some workloads may require specific hardware or network configurations, which underscores the need to identify operating systems, software dependencies and other infrastructure requirements. Performance and scalability are also important considerations. Your workloads may require high performance and low latency or the ability to quickly scale up and down.

Factor in security and compliance when choosing where to run workloads. Regulations may restrict certain workloads to on-premises or private cloud environments. Cost is another consideration. Public cloud services can provide a flexible and cost-effective way to run workloads, especially those with variable demand. Other workloads, though, may prove more cost-effective running on-premises or in a private cloud environment.

Deploying Workloads in the Cloud

The cloud offers an ideal environment for a variety of workloads, with some workloads particularly well suited for the cloud.

- Web Applications: Cloud platforms offer the expansibility and availability needed to handle high volumes of requests for web applications.

- Big-Data and Analytics: Cloud providers offer big data and analytics tools to help manage and process large amounts of data.

- DevOps and CI/CD: Cloud platforms can provide the infrastructure to support automated software development, testing and deployment processes.

- Disaster Recovery and Backup: Cloud platforms can be used for offsite backups of data and systems, as well as to provide failover support.

- Machine Learning and AI: Cloud providers offer tools for training ML models and scaling them in production environments.

- IoT and Edge Computing: Cloud platforms provide services to support IoT devices and edge computing applications, such as data processing, storage and analytics.

But the cloud does not complement all workloads. Organizations should base their platform choice on analysis of the requirements and characteristics of each workload.

Deploying Workloads On-Premises

Example details to weigh when determining which workloads to deploy on-premises include:

- Security Requirements: On-premises deployment of workloads in highly regulated industries may be the best option to ensure data security and regulatory compliance.

- Data-Intensive Workloads: Workloads that process and store large volumes of data benefit from on-premises deployment due to the high cost of data transfer and cloud storage.

- Latency-Sensitive Workloads: Applications requiring low latency, such as real-time data processing or gaming, may benefit from on-premises deployment.

- Customized Workloads: On-premises deployment to ensure control over the underlying infrastructure may better serve applications needing customized hardware.

- Cost Considerations: Running workloads on-premises can be cost-effective due to resource usage, storage requirements and usage patterns.

Hybrid Cloud Deployments

A hybrid cloud is a computing environment that combines on-premises infrastructure with cloud services from one or more private or public cloud providers. This type of cloud architecture allows organizations to benefit from the offerings of both on-premises and cloud infrastructure.

With a hybrid cloud, organizations can deploy workloads across multiple environments to accommodate requirements of the application or workload. They can choose to keep sensitive workloads on-premises to meet regulatory requirements, while opting to deploy other workloads requiring scalability and flexibility on a public cloud.

To enable a hybrid cloud environment, organizations must have the necessary infrastructure, such as networking and connectivity between on-premises infrastructure and cloud services. They will also need a cloud management platform, automation tools and security solutions to manage workloads across multiple environments.

Cloud-Agnostic Workloads

Many organizations prioritize cloud-agnostic strategies, preferring the freedom of cloud-agnostic infrastructure and app architecture and development. Workloads designed to run on any cloud platform bring the benefits of compatibility, which include:

- Avoid Vendor Lock-in: By designing cloud-agnostic applications, organizations can switch cloud providers without a costly overhaul of workloads and tech stacks.

- Portability: Cloud-agnostic workloads can be deployed on any cloud platform, which provides greater flexibility and agility.

- Cost Savings: The option to deploy workloads on the platform offering the most cost-effective resources allows organizations to take advantage of cost fluctuations or use spot instances.

- Avoiding Single Points of Failure: Organizations can avoid relying on a single cloud provider for critical applications, which reduces risks of downtime or data loss.

To enable cloud-agnostic workloads, organizations typically use standard technologies and interfaces supported by multiple cloud providers, such as Kubernetes for container orchestration and Terraform for infrastructure as code.

Workload Management

Workload management refers to the endless cycle of monitoring, controlling and allocating resources to workloads. The responsibility comprises the myriad processes required to optimize and balance the distribution of computing resources to ensure workloads execute with minimal disruption or downtime.

In a cloud environment, workload management is critical because multiple users and applications share resources. The workload manager must ensure that each workload has access to the resources it needs — and without impacting the performance of other workloads.

Workload management can become especially complex in multicloud environments where workloads are distributed across multiple cloud platforms. Effective multicloud workload management requires a clear understanding of each cloud platform's capabilities and the specific requirements of each workload.

Resource Allocation

Workload management involves allocating computing resources, such as CPU, memory and storage, to different workloads based on their needs and priorities. Effective allocation requires monitoring resource usage, predicting future demand and adjusting resource allocation as needed.

Load Balancing

Workload management also involves load balancing, or distributing workloads across multiple computing resources to optimize resource utilization and prevent bottlenecks. Organizations typically rely on techniques such as round-robin, least connections and IP hash to achieve well-balanced loads.

Prioritizing Workloads

To manage workloads, DevOps teams need to prioritize their workloads based on criticality, performance requirements and service level agreements. Proper prioritization ensures mission-critical workloads receive the resources needed to run optimally, even during peak demand.

Monitoring and Optimization

Monitoring the performance of workloads and adjusting resource allocation to optimize performance and minimize costs is central to workload management. This may involve automatic scaling, autotuning and other optimization techniques.

Workload Automation

Commonly used by enterprises with complex IT infrastructures, workload automation streamlines IT processes by automating the scheduling, execution and monitoring of workloads. With the growth of digital transformation, workload automation has become essential to functional IT operations. Benefits of workload automation include:

Reduced Errors

By automating repetitive and manual tasks, workload automation eliminates the need for human intervention, which reduces the risk of error and potential adverse events, such as data loss.

Improved Efficiency

Automating repetitive and time-consuming tasks can free teams to focus on critical tasks. Instead of manually checking logs for errors, for example, workload automation can identify and alert IT staff to errors, freeing them to focus on resolving issues rather than monitoring logs.

Optimized Resource Utilization

Workload automation can optimize resource utilization by ensuring that tasks and processes are scheduled and executed at optimal times. By scheduling resource-intensive tasks to run during off-peak hours, for example, teams reduce the potential for resource contention.

Increased Agility

By automating the provisioning and deployment of applications and services, workload automation cuts the time and effort required to bring new services online. This enables IT teams to respond to business needs more quickly and efficiently.

Enhanced Compliance

By executing IT processes in a consistent and auditable manner through workload automation, organizations reinforce compliance with regulatory standards, ultimately reducing the risk of compliance violations.

Reduced Costs

By removing repetitive and manual tasks from the equation, workload automation not only optimizes resource utilization and reduces the need for additional hardware and software resources. It also reduces the cost of IT operations while allowing IT staff to focus on higher-value tasks.

Workload automation tools on the market range from open-source solutions (e.g., Jenkins and Ansible) to enterprise-grade platforms (e.g., BMC Control-M and IBM Workload Automation). These tools typically provide a range of features and functionality, including job scheduling, event-driven automation, workload monitoring and reporting, and integration with other IT systems and applications.

Cloud Workload Protection

The cloud migration of workloads offers organizations numerous benefits, while also introducing security challenges. The attack surface expands in the cloud. Even with security controls in place, a zero-day vulnerability or misconfigured server or storage bucket can pose significant risks to workloads.

Cloud workload security strategies can help secure your organization.

- Implement Access Management Controls: Implementing least-privileged access policy can limit the potential damage of a security breach.

- Automate Security Controls: Automation can ensure that security controls are consistently applied across workloads — and speed up response times in the event of a security incident.

- Monitor and Manage Vulnerabilities: Regularly scanning for vulnerabilities and promptly applying patches is crucial to protecting cloud workloads.

- Secure Containers and Serverless Workloads: Scan container images for vulnerabilities and implement appropriate isolation policies for serverless functions.

- Encrypt sensitive data: Data encryption can protect sensitive data even if other security controls fail. Remember to encrypt data at rest and in transit.

Implement a CWPP

Designed for scalability, the cloud workload protection platform (CWPP) can adapt to protect an increasing number of workloads, providing consistent security regardless of the size of the cloud environment. CWPPs are workload-centric, meaning they protect the workload regardless of where it resides — on-premises, in the cloud, or in a hybrid environment. Given that workloads rapidly move between platforms and infrastructures, this type of workload protection is essential.

CWPPs give organizations a much-needed platform alternative to tool sprawl, solving issues with complexity while maximizing security with centralized visibility and control, vulnerability management, access management, antimalware protection and more.